Vivek Hazari

DiPietro-Hazari Kappa: A Novel Metric for Assessing Labeling Quality via Annotation

Sep 17, 2022Abstract:Data is a key component of modern machine learning, but statistics for assessing data label quality remain sparse in literature. Here, we introduce DiPietro-Hazari Kappa, a novel statistical metric for assessing the quality of suggested dataset labels in the context of human annotation. Rooted in the classical Fleiss's Kappa measure of inter-annotator agreement, the DiPietro-Hazari Kappa quantifies the the empirical annotator agreement differential that was attained above random chance. We offer a thorough theoretical examination of Fleiss's Kappa before turning to our derivation of DiPietro-Hazari Kappa. Finally, we conclude with a matrix formulation and set of procedural instructions for easy computational implementation.

Robin: A Novel Online Suicidal Text Corpus of Substantial Breadth and Scale

Sep 13, 2022

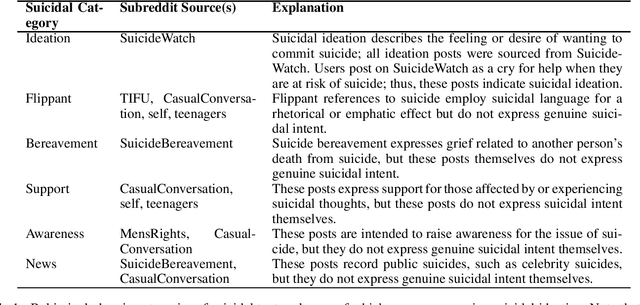

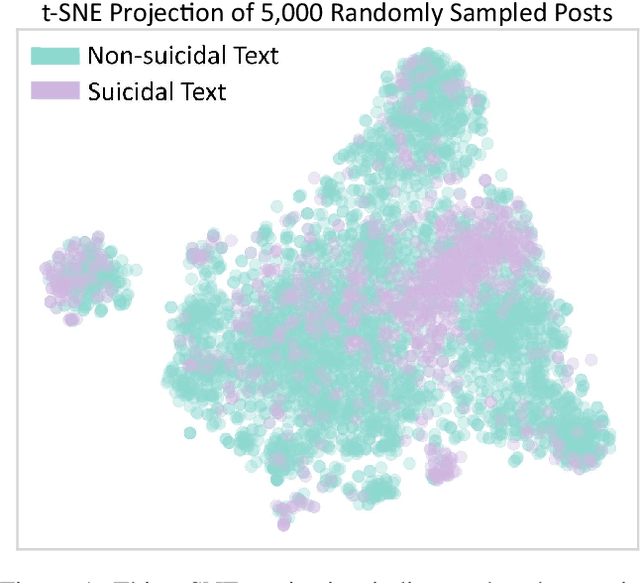

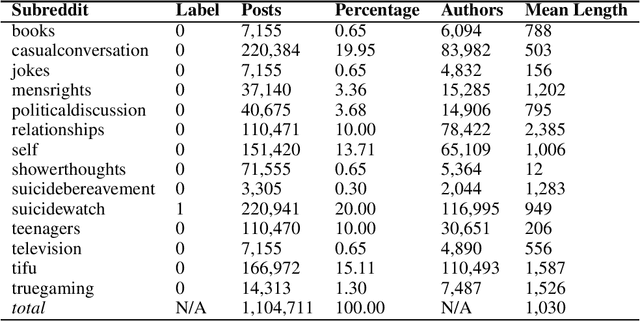

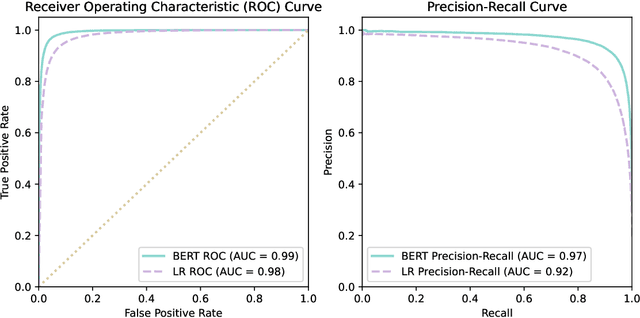

Abstract:Suicide is a major public health crisis. With more than 20,000,000 suicide attempts each year, the early detection of suicidal intent has the potential to save hundreds of thousands of lives. Traditional mental health screening methods are time-consuming, costly, and often inaccessible to disadvantaged populations; online detection of suicidal intent using machine learning offers a viable alternative. Here we present Robin, the largest non-keyword generated suicidal corpus to date, consisting of over 1.1 million online forum postings. In addition to its unprecedented size, Robin is specially constructed to include various categories of suicidal text, such as suicide bereavement and flippant references, better enabling models trained on Robin to learn the subtle nuances of text expressing suicidal ideation. Experimental results achieve state-of-the-art performance for the classification of suicidal text, both with traditional methods like logistic regression (F1=0.85), as well as with large-scale pre-trained language models like BERT (F1=0.92). Finally, we release the Robin dataset publicly as a machine learning resource with the potential to drive the next generation of suicidal sentiment research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge