Virginia Poli

Physical Scales Matter: The Role of Receptive Fields and Advection in Satellite-Based Thunderstorm Nowcasting with Convolutional Neural Networks

Apr 14, 2025Abstract:The focus of nowcasting development is transitioning from physically motivated advection methods to purely data-driven Machine Learning (ML) approaches. Nevertheless, recent work indicates that incorporating advection into the ML value chain has improved skill for radar-based precipitation nowcasts. However, the generality of this approach and the underlying causes remain unexplored. This study investigates the generality by probing the approach on satellite-based thunderstorm nowcasts for the first time. Resorting to a scale argument, we then put forth an explanation when and why skill improvements can be expected. In essence, advection guarantees that thunderstorm patterns relevant for nowcasting are contained in the receptive field at long lead times. To test our hypotheses, we train ResU-Nets solving segmentation tasks with lightning observations as ground truth. The input of the Baseline Neural Network (BNN) are short time series of multispectral satellite imagery and lightning observations, whereas the Advection-Informed Neural Network (AINN) additionally receives the Lagrangian persistence nowcast of all input channels at the desired lead time. Overall, we find only a minor skill improvement of the AINN over the BNN when considering fully averaged scores. However, assessing skill conditioned on lead time and wind speed, we demonstrate that our scale argument correctly predicts the onset of skill improvement of the AINN over the BNN after 2h lead time. We confirm that generally advection becomes gradually more important with longer lead times and higher wind speeds. Our work accentuates the importance of considering and incorporating the underlying physical scales when designing ML based forecasting models.

GPTCast: a weather language model for precipitation nowcasting

Jul 02, 2024

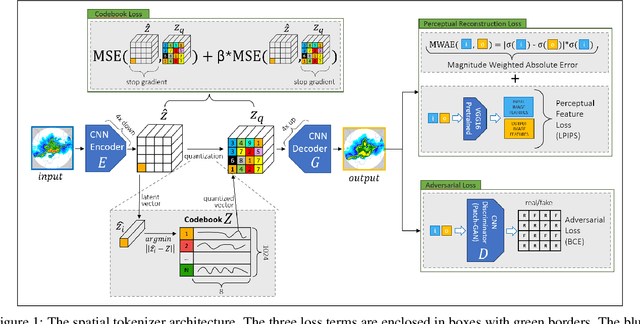

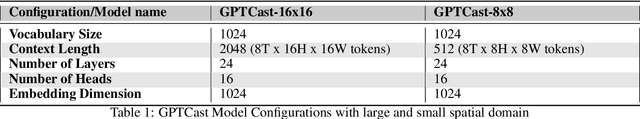

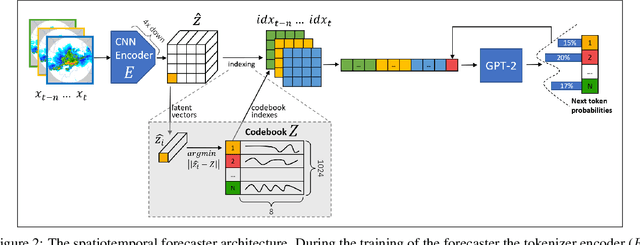

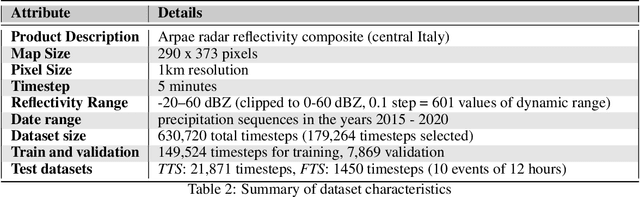

Abstract:This work introduces GPTCast, a generative deep-learning method for ensemble nowcast of radar-based precipitation, inspired by advancements in large language models (LLMs). We employ a GPT model as a forecaster to learn spatiotemporal precipitation dynamics using tokenized radar images. The tokenizer is based on a Quantized Variational Autoencoder featuring a novel reconstruction loss tailored for the skewed distribution of precipitation that promotes faithful reconstruction of high rainfall rates. The approach produces realistic ensemble forecasts and provides probabilistic outputs with accurate uncertainty estimation. The model is trained without resorting to randomness, all variability is learned solely from the data and exposed by model at inference for ensemble generation. We train and test GPTCast using a 6-year radar dataset over the Emilia-Romagna region in Northern Italy, showing superior results compared to state-of-the-art ensemble extrapolation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge