Elena Tomasi

GPTCast: a weather language model for precipitation nowcasting

Jul 02, 2024

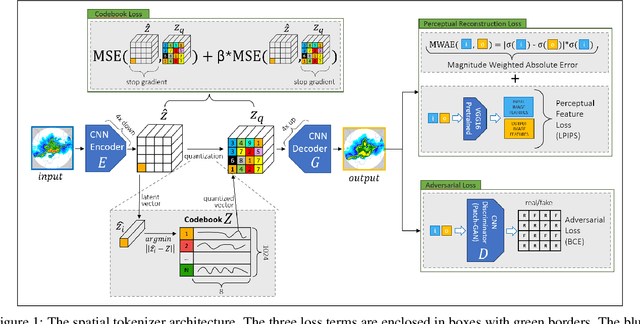

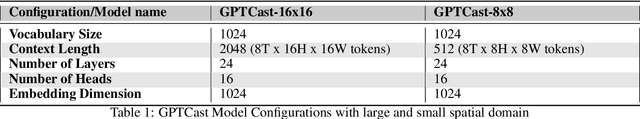

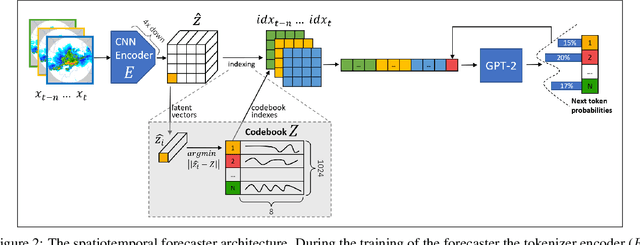

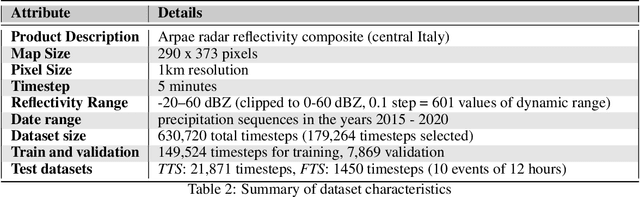

Abstract:This work introduces GPTCast, a generative deep-learning method for ensemble nowcast of radar-based precipitation, inspired by advancements in large language models (LLMs). We employ a GPT model as a forecaster to learn spatiotemporal precipitation dynamics using tokenized radar images. The tokenizer is based on a Quantized Variational Autoencoder featuring a novel reconstruction loss tailored for the skewed distribution of precipitation that promotes faithful reconstruction of high rainfall rates. The approach produces realistic ensemble forecasts and provides probabilistic outputs with accurate uncertainty estimation. The model is trained without resorting to randomness, all variability is learned solely from the data and exposed by model at inference for ensemble generation. We train and test GPTCast using a 6-year radar dataset over the Emilia-Romagna region in Northern Italy, showing superior results compared to state-of-the-art ensemble extrapolation methods.

Can AI be enabled to dynamical downscaling? Training a Latent Diffusion Model to mimic km-scale COSMO-CLM downscaling of ERA5 over Italy

Jun 19, 2024

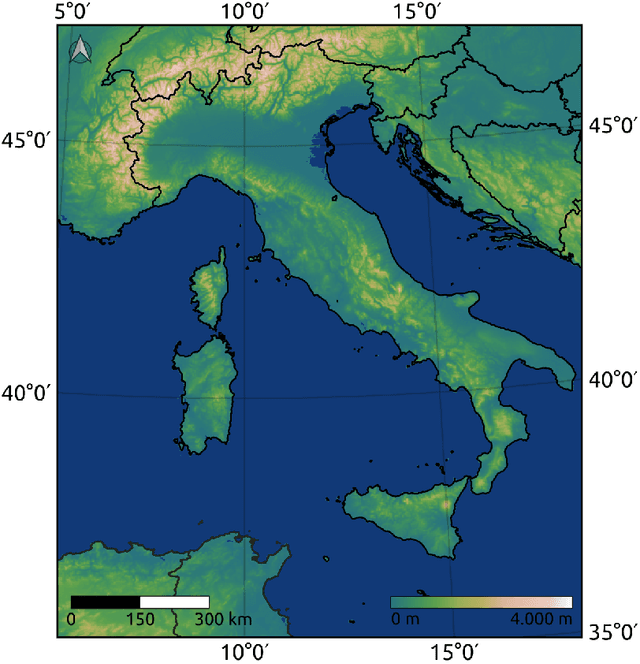

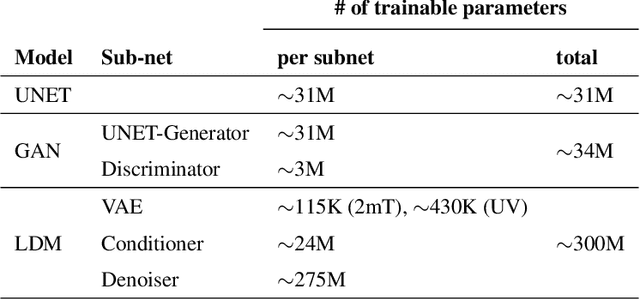

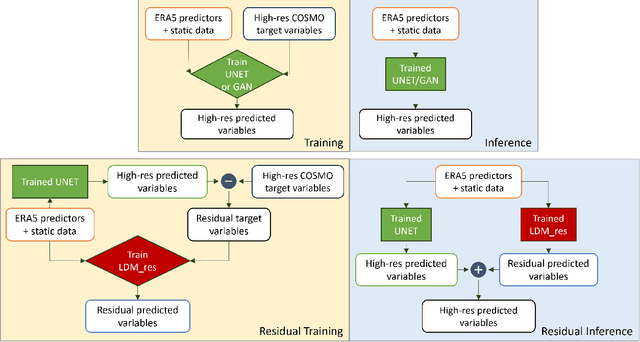

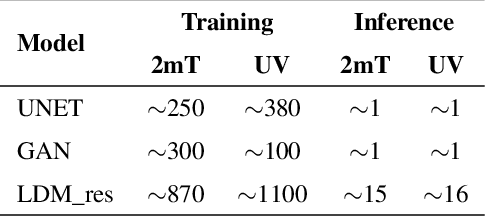

Abstract:Downscaling techniques are one of the most prominent applications of Deep Learning (DL) in Earth System Modeling. A robust DL downscaling model can generate high-resolution fields from coarse-scale numerical model simulations, saving the timely and resourceful applications of regional/local models. Additionally, generative DL models have the potential to provide uncertainty information, by generating ensemble-like scenario pools, a task that is computationally prohibitive for traditional numerical simulations. In this study, we apply a Latent Diffusion Model (LDM) to downscale ERA5 data over Italy up to a resolution of 2 km. The high-resolution target data consists of results from a high-resolution dynamical downscaling performed with COSMO-CLM. Our goal is to demonstrate that recent advancements in generative modeling enable DL-based models to deliver results comparable to those of numerical dynamical downscaling models, given the same input data (i.e., ERA5 data), preserving the realism of fine-scale features and flow characteristics. The training and testing database consists of hourly data from 2000 to 2020. The target variables of this study are 2-m temperature and 10-m horizontal wind components. A selection of predictors from ERA5 is used as input to the LDM, and a residual approach against a reference UNET is leveraged in applying the LDM. The performance of the generative LDM is compared with reference baselines of increasing complexity: quadratic interpolation of ERA5, a UNET, and a Generative Adversarial Network (GAN) built on the same reference UNET. Results highlight the improvements introduced by the LDM architecture and the residual approach over these baselines. The models are evaluated on a yearly test dataset, assessing the models' performance through deterministic metrics, spatial distribution of errors, and reconstruction of frequency and power spectra distributions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge