Vinny Cahill

Geographically-aware Transformer-based Traffic Forecasting for Urban Motorway Digital Twins

Feb 05, 2026Abstract:The operational effectiveness of digital-twin technology in motorway traffic management depends on the availability of a continuous flow of high-resolution real-time traffic data. To function as a proactive decision-making support layer within traffic management, a digital twin must also incorporate predicted traffic conditions in addition to real-time observations. Due to the spatio-temporal complexity and the time-variant, non-linear nature of traffic dynamics, predicting motorway traffic remains a difficult problem. Sequence-based deep-learning models offer clear advantages over classical machine learning and statistical models in capturing long-range, temporal dependencies in time-series traffic data, yet limitations in forecasting accuracy and model complexity point to the need for further improvements. To improve motorway traffic forecasting, this paper introduces a Geographically-aware Transformer-based Traffic Forecasting GATTF model, which exploits the geographical relationships between distributed sensors using their mutual information (MI). The model has been evaluated using real-time data from the Geneva motorway network in Switzerland and results confirm that incorporating geographical awareness through MI enhances the accuracy of GATTF forecasting compared to a standard Transformer, without increasing model complexity.

Continual Reinforcement Learning for Cyber-Physical Systems: Lessons Learned and Open Challenges

Nov 19, 2025

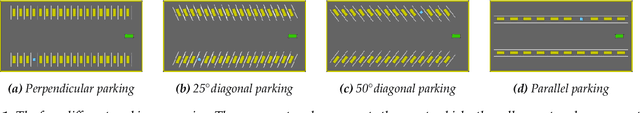

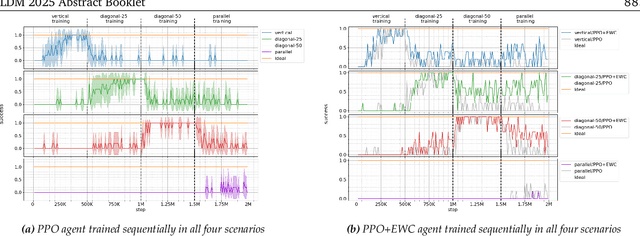

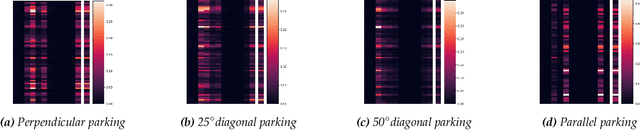

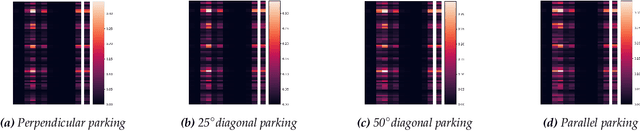

Abstract:Continual learning (CL) is a branch of machine learning that aims to enable agents to adapt and generalise previously learned abilities so that these can be reapplied to new tasks or environments. This is particularly useful in multi-task settings or in non-stationary environments, where the dynamics can change over time. This is particularly relevant in cyber-physical systems such as autonomous driving. However, despite recent advances in CL, successfully applying it to reinforcement learning (RL) is still an open problem. This paper highlights open challenges in continual RL (CRL) based on experiments in an autonomous driving environment. In this environment, the agent must learn to successfully park in four different scenarios corresponding to parking spaces oriented at varying angles. The agent is successively trained in these four scenarios one after another, representing a CL environment, using Proximal Policy Optimisation (PPO). These experiments exposed a number of open challenges in CRL: finding suitable abstractions of the environment, oversensitivity to hyperparameters, catastrophic forgetting, and efficient use of neural network capacity. Based on these identified challenges, we present open research questions that are important to be addressed for creating robust CRL systems. In addition, the identified challenges call into question the suitability of neural networks for CL. We also identify the need for interdisciplinary research, in particular between computer science and neuroscience.

Applying Neural Monte Carlo Tree Search to Unsignalized Multi-intersection Scheduling for Autonomous Vehicles

Oct 24, 2024

Abstract:Dynamic scheduling of access to shared resources by autonomous systems is a challenging problem, characterized as being NP-hard. The complexity of this task leads to a combinatorial explosion of possibilities in highly dynamic systems where arriving requests must be continuously scheduled subject to strong safety and time constraints. An example of such a system is an unsignalized intersection, where automated vehicles' access to potential conflict zones must be dynamically scheduled. In this paper, we apply Neural Monte Carlo Tree Search (NMCTS) to the challenging task of scheduling platoons of vehicles crossing unsignalized intersections. Crucially, we introduce a transformation model that maps successive sequences of potentially conflicting road-space reservation requests from platoons of vehicles into a series of board-game-like problems and use NMCTS to search for solutions representing optimal road-space allocation schedules in the context of past allocations. To optimize search, we incorporate a prioritized re-sampling method with parallel NMCTS (PNMCTS) to improve the quality of training data. To optimize training, a curriculum learning strategy is used to train the agent to schedule progressively more complex boards culminating in overlapping boards that represent busy intersections. In a busy single four-way unsignalized intersection simulation, PNMCTS solved 95\% of unseen scenarios, reducing crossing time by 43\% in light and 52\% in heavy traffic versus first-in, first-out control. In a 3x3 multi-intersection network, the proposed method maintained free-flow in light traffic when all intersections are under control of PNMCTS and outperformed state-of-the-art RL-based traffic-light controllers in average travel time by 74.5\% and total throughput by 16\% in heavy traffic.

Drama: Mamba-Enabled Model-Based Reinforcement Learning Is Sample and Parameter Efficient

Oct 11, 2024

Abstract:Model-based reinforcement learning (RL) offers a solution to the data inefficiency that plagues most model-free RL algorithms. However, learning a robust world model often demands complex and deep architectures, which are expensive to compute and train. Within the world model, dynamics models are particularly crucial for accurate predictions, and various dynamics-model architectures have been explored, each with its own set of challenges. Currently, recurrent neural network (RNN) based world models face issues such as vanishing gradients and difficulty in capturing long-term dependencies effectively. In contrast, use of transformers suffers from the well-known issues of self-attention mechanisms, where both memory and computational complexity scale as $O(n^2)$, with $n$ representing the sequence length. To address these challenges we propose a state space model (SSM) based world model, specifically based on Mamba, that achieves $O(n)$ memory and computational complexity while effectively capturing long-term dependencies and facilitating the use of longer training sequences efficiently. We also introduce a novel sampling method to mitigate the suboptimality caused by an incorrect world model in the early stages of training, combining it with the aforementioned technique to achieve a normalised score comparable to other state-of-the-art model-based RL algorithms using only a 7 million trainable parameter world model. This model is accessible and can be trained on an off-the-shelf laptop. Our code is available at https://github.com/realwenlongwang/drama.git.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge