Vinicius Lima

Advancing Crime Linkage Analysis with Machine Learning: A Comprehensive Review and Framework for Data-Driven Approaches

Oct 30, 2024Abstract:Crime linkage is the process of analyzing criminal behavior data to determine whether a pair or group of crime cases are connected or belong to a series of offenses. This domain has been extensively studied by researchers in sociology, psychology, and statistics. More recently, it has drawn interest from computer scientists, especially with advances in artificial intelligence. Despite this, the literature indicates that work in this latter discipline is still in its early stages. This study aims to understand the challenges faced by machine learning approaches in crime linkage and to support foundational knowledge for future data-driven methods. To achieve this goal, we conducted a comprehensive survey of the main literature on the topic and developed a general framework for crime linkage processes, thoroughly describing each step. Our goal was to unify insights from diverse fields into a shared terminology to enhance the research landscape for those intrigued by this subject.

Hotspot Prediction of Severe Traffic Accidents in the Federal District of Brazil

Dec 28, 2023Abstract:Traffic accidents are one of the biggest challenges in a society where commuting is so important. What triggers an accident can be dependent on several subjective parameters and varies within each region, city, or country. In the same way, it is important to understand those parameters in order to provide a knowledge basis to support decisions regarding future cases prevention. The literature presents several works where machine learning algorithms are used for prediction of accidents or severity of accidents, in which city-level datasets were used as evaluation studies. This work attempts to add to the diversity of research, by focusing mainly on concentration of accidents and how machine learning can be used to predict hotspots. This approach demonstrated to be a useful technique for authorities to understand nuances of accident concentration behavior. For the first time, data from the Federal District of Brazil collected from forensic traffic accident analysts were used and combined with data from local weather conditions to predict hotspots of collisions. Out of the five algorithms we considered, two had good performance: Multi-layer Perceptron and Random Forest, with the latter being the best one at 98% accuracy. As a result, we identify that weather parameters are not as important as the accident location, demonstrating that local intervention is important to reduce the number of accidents.

* Published at The Twelfth International Conference on Smart Cities, Systems, Devices and Technologies SMART 2023 https://www.thinkmind.org/index.php?view=article&articleid=smart_2023_1_60_40032

Graph Reinforcement Learning for Wireless Control Systems: Large-Scale Resource Allocation over Interference Channels

Jan 24, 2022

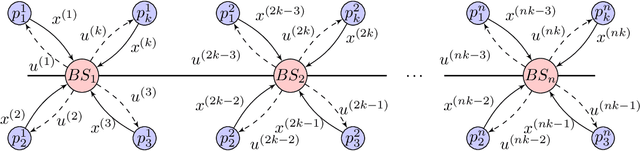

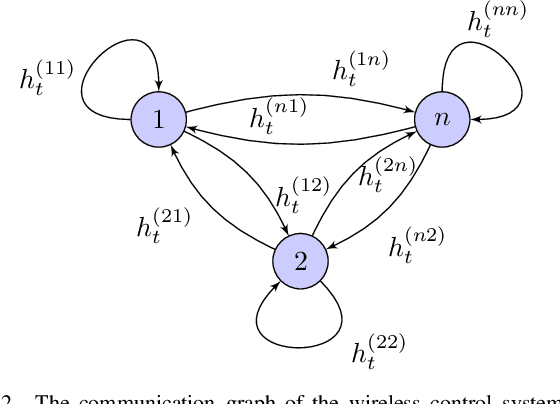

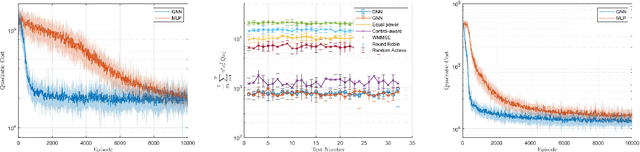

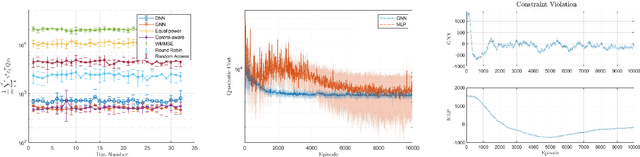

Abstract:Modern control systems routinely employ wireless networks to exchange information between spatially distributed plants, actuators and sensors. With wireless networks defined by random, rapidly changing transmission conditions that challenge assumptions commonly held in the design of control systems, proper allocation of communication resources is essential to achieve reliable operation. Designing resource allocation policies, however, is challenging, motivating recent works to successfully exploit deep learning and deep reinforcement learning techniques to design resource allocation and scheduling policies for wireless control systems. As the number of learnable parameters in a neural network grows with the size of the input signal, deep reinforcement learning algorithms may fail to scale, limiting the immediate generalization of such scheduling and resource allocation policies to large-scale systems. The interference and fading patterns among plants and controllers in the network, on the other hand, induce a time-varying communication graph that can be used to construct policy representations based on graph neural networks (GNNs), with the number of learnable parameters now independent of the number of plants in the network. That invariance to the number of nodes is key to design scalable and transferable resource allocation policies, which can be trained with reinforcement learning. Through extensive numerical experiments we show that the proposed graph reinforcement learning approach yields policies that not only outperform baseline solutions and deep reinforcement learning based policies in large-scale systems, but that can also be transferred across networks of varying size.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge