Victoria Florence

Self-Supervised Robot In-hand Object Learning

Apr 02, 2019

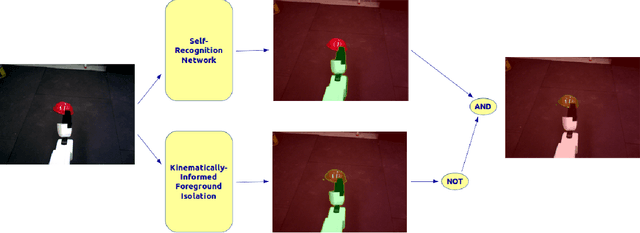

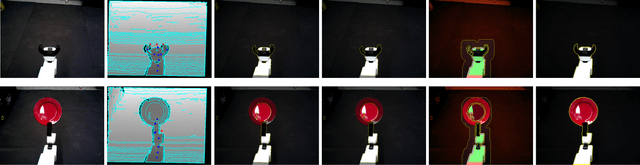

Abstract:In order to complete tasks in a new environment, robots must be able to recognize unseen, unique objects. Fully supervised methods have made great strides on the object segmentation task, but require many examples of each object class and don't scale to unseen environments. In this work, we present a method that acquires pixelwise object labels for manipulable in-hand objects with no human supervision. Our two-step approach does a foreground-background segmentation informed by robot kinematics then uses a self-recognition network to segment the robot from the object in the foreground. We are able to achieve 49.4% mIoU performance on a difficult and varied assortment of items.

Video Object Segmentation-based Visual Servo Control and Object Depth Estimation on a Mobile Robot Platform

Mar 20, 2019

Abstract:To be useful in everyday environments, robots must be able to identify and locate unstructured, real-world objects. In recent years, video object segmentation has made significant progress on densely separating such objects from background in real and challenging videos. This paper addresses the problem of identifying generic objects and locating them in 3D from a mobile robot platform equipped with an RGB camera. We achieve this by introducing a video object segmentation-based approach to visual servo control and active perception. We validate our approach in experiments using an HSR platform, which subsequently identifies, locates, and grasps objects from the YCB object dataset. We also develop a new Hadamard-Broyden update formulation, which enables HSR to automatically learn the relationship between actuators and visual features without any camera calibration. Using a variety of learned actuator-camera configurations, HSR also tracks people and other dynamic articulated objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge