Self-Supervised Robot In-hand Object Learning

Paper and Code

Apr 02, 2019

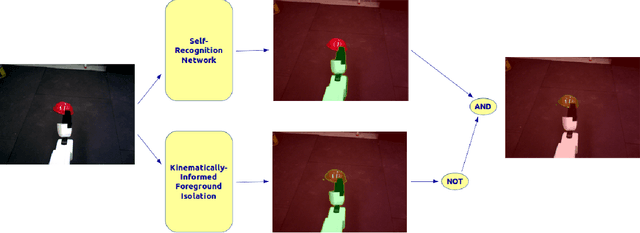

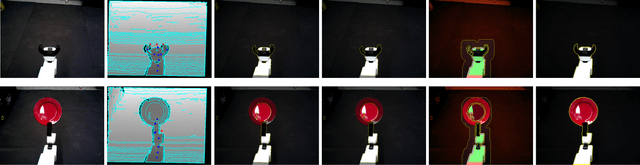

In order to complete tasks in a new environment, robots must be able to recognize unseen, unique objects. Fully supervised methods have made great strides on the object segmentation task, but require many examples of each object class and don't scale to unseen environments. In this work, we present a method that acquires pixelwise object labels for manipulable in-hand objects with no human supervision. Our two-step approach does a foreground-background segmentation informed by robot kinematics then uses a self-recognition network to segment the robot from the object in the foreground. We are able to achieve 49.4% mIoU performance on a difficult and varied assortment of items.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge