Vernon Austil

Learning to Guide a Saturation-Based Theorem Prover

Jun 07, 2021

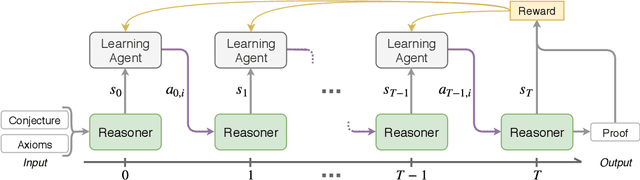

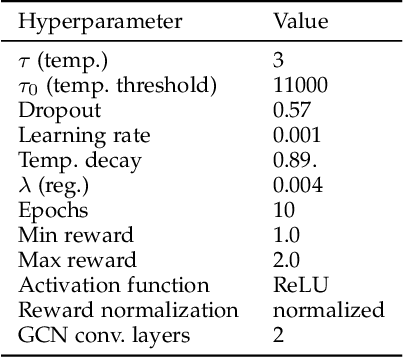

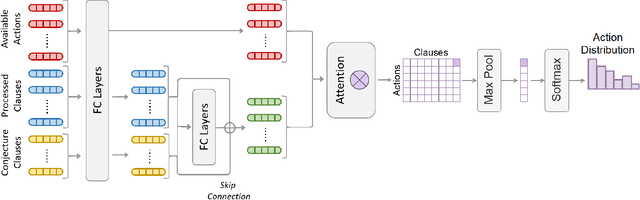

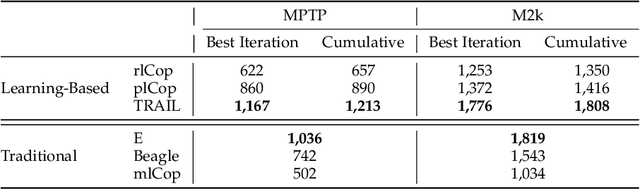

Abstract:Traditional automated theorem provers have relied on manually tuned heuristics to guide how they perform proof search. Recently, however, there has been a surge of interest in the design of learning mechanisms that can be integrated into theorem provers to improve their performance automatically. In this work, we introduce TRAIL, a deep learning-based approach to theorem proving that characterizes core elements of saturation-based theorem proving within a neural framework. TRAIL leverages (a) an effective graph neural network for representing logical formulas, (b) a novel neural representation of the state of a saturation-based theorem prover in terms of processed clauses and available actions, and (c) a novel representation of the inference selection process as an attention-based action policy. We show through a systematic analysis that these components allow TRAIL to significantly outperform previous reinforcement learning-based theorem provers on two standard benchmark datasets (up to 36% more theorems proved). In addition, to the best of our knowledge, TRAIL is the first reinforcement learning-based approach to exceed the performance of a state-of-the-art traditional theorem prover on a standard theorem proving benchmark (solving up to 17% more problems).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge