Vanessa Borst

WoundAIssist: A Patient-Centered Mobile App for AI-Assisted Wound Care With Physicians in the Loop

Jun 06, 2025Abstract:The rising prevalence of chronic wounds, especially in aging populations, presents a significant healthcare challenge due to prolonged hospitalizations, elevated costs, and reduced patient quality of life. Traditional wound care is resource-intensive, requiring frequent in-person visits that strain both patients and healthcare professionals (HCPs). Therefore, we present WoundAIssist, a patient-centered, AI-driven mobile application designed to support telemedical wound care. WoundAIssist enables patients to regularly document wounds at home via photographs and questionnaires, while physicians remain actively engaged in the care process through remote monitoring and video consultations. A distinguishing feature is an integrated lightweight deep learning model for on-device wound segmentation, which, combined with patient-reported data, enables continuous monitoring of wound healing progression. Developed through an iterative, user-centered process involving both patients and domain experts, WoundAIssist prioritizes an user-friendly design, particularly for elderly patients. A conclusive usability study with patients and dermatologists reported excellent usability, good app quality, and favorable perceptions of the AI-driven wound recognition. Our main contribution is two-fold: (I) the implementation and (II) evaluation of WoundAIssist, an easy-to-use yet comprehensive telehealth solution designed to bridge the gap between patients and HCPs. Additionally, we synthesize design insights for remote patient monitoring apps, derived from over three years of interdisciplinary research, that may inform the development of similar digital health tools across clinical domains.

TSRM: A Lightweight Temporal Feature Encoding Architecture for Time Series Forecasting and Imputation

Apr 26, 2025

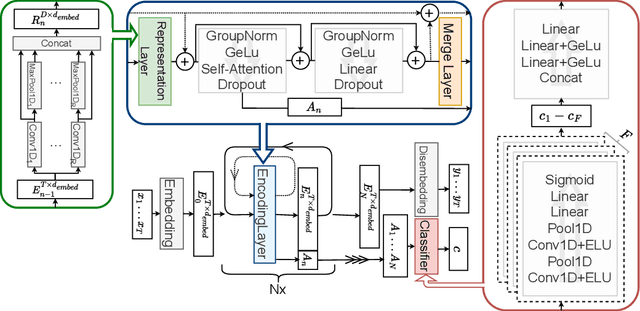

Abstract:We introduce a temporal feature encoding architecture called Time Series Representation Model (TSRM) for multivariate time series forecasting and imputation. The architecture is structured around CNN-based representation layers, each dedicated to an independent representation learning task and designed to capture diverse temporal patterns, followed by an attention-based feature extraction layer and a merge layer, designed to aggregate extracted features. The architecture is fundamentally based on a configuration that is inspired by a Transformer encoder, with self-attention mechanisms at its core. The TSRM architecture outperforms state-of-the-art approaches on most of the seven established benchmark datasets considered in our empirical evaluation for both forecasting and imputation tasks. At the same time, it significantly reduces complexity in the form of learnable parameters. The source code is available at https://github.com/RobertLeppich/TSRM.

WoundAmbit: Bridging State-of-the-Art Semantic Segmentation and Real-World Wound Care

Apr 08, 2025Abstract:Chronic wounds affect a large population, particularly the elderly and diabetic patients, who often exhibit limited mobility and co-existing health conditions. Automated wound monitoring via mobile image capture can reduce in-person physician visits by enabling remote tracking of wound size. Semantic segmentation is key to this process, yet wound segmentation remains underrepresented in medical imaging research. To address this, we benchmark state-of-the-art deep learning models from general-purpose vision, medical imaging, and top methods from public wound challenges. For fair comparison, we standardize training, data augmentation, and evaluation, conducting cross-validationto minimize partitioning bias. We also assess real-world deployment aspects, including generalization to an out-of-distribution wound dataset, computational efficiency, and interpretability. Additionally, we propose a reference object-based approach to convert AI-generated masks into clinically relevant wound size estimates, and evaluate this, along with mask quality, for the best models based on physician assessments. Overall, the transformer-based TransNeXt showed the highest levels of generalizability. Despite variations in inference times, all models processed at least one image per second on the CPU, which is deemed adequate for the intended application. Interpretability analysis typically revealed prominent activations in wound regions, emphasizing focus on clinically relevant features. Expert evaluation showed high mask approval for all analyzed models, with VWFormer and ConvNeXtS backbone performing the best. Size retrieval accuracy was similar across models, and predictions closely matched expert annotations. Finally, we demonstrate how our AI-driven wound size estimation framework, WoundAmbit, can be integrated into a custom telehealth system. Our code will be made available on GitHub upon publication.

Early Explorations of Lightweight Models for Wound Segmentation on Mobile Devices

Jul 11, 2024Abstract:The aging population poses numerous challenges to healthcare, including the increase in chronic wounds in the elderly. The current approach to wound assessment by therapists based on photographic documentation is subjective, highlighting the need for computer-aided wound recognition from smartphone photos. This offers objective and convenient therapy monitoring, while being accessible to patients from their home at any time. However, despite research in mobile image segmentation, there is a lack of focus on mobile wound segmentation. To address this gap, we conduct initial research on three lightweight architectures to investigate their suitability for smartphone-based wound segmentation. Using public datasets and UNet as a baseline, our results are promising, with both ENet and TopFormer, as well as the larger UNeXt variant, showing comparable performance to UNet. Furthermore, we deploy the models into a smartphone app for visual assessment of live segmentation, where results demonstrate the effectiveness of TopFormer in distinguishing wounds from wound-coloured objects. While our study highlights the potential of transformer models for mobile wound segmentation, future work should aim to further improve the mask contours.

Time Series Representation Models

May 28, 2024

Abstract:Time series analysis remains a major challenge due to its sparse characteristics, high dimensionality, and inconsistent data quality. Recent advancements in transformer-based techniques have enhanced capabilities in forecasting and imputation; however, these methods are still resource-heavy, lack adaptability, and face difficulties in integrating both local and global attributes of time series. To tackle these challenges, we propose a new architectural concept for time series analysis based on introspection. Central to this concept is the self-supervised pretraining of Time Series Representation Models (TSRMs), which once learned can be easily tailored and fine-tuned for specific tasks, such as forecasting and imputation, in an automated and resource-efficient manner. Our architecture is equipped with a flexible and hierarchical representation learning process, which is robust against missing data and outliers. It can capture and learn both local and global features of the structure, semantics, and crucial patterns of a given time series category, such as heart rate data. Our learned time series representation models can be efficiently adapted to a specific task, such as forecasting or imputation, without manual intervention. Furthermore, our architecture's design supports explainability by highlighting the significance of each input value for the task at hand. Our empirical study using four benchmark datasets shows that, compared to investigated state-of-the-art baseline methods, our architecture improves imputation and forecasting errors by up to 90.34% and 71.54%, respectively, while reducing the required trainable parameters by up to 92.43%. The source code is available at https://github.com/RobertLeppich/TSRM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge