Van-Thach Do

Geometry-Aware Coverage Path Planning on Complex 3D Surfaces

Mar 07, 2023Abstract:This paper presents a new approach to obtaining nearly complete coverage paths (CP) with low overlapping on 3D general surfaces using mesh models given or reconstructed from actual scenes. The CP is obtained by segmenting the mesh model into a given number of clusters using constrained centroidal Voronoi tessellation (CCVT) and finding the shortest path from cluster centroids using the geodesic metric efficiently. We introduce a new cost function to harmoniously achieve uniform areas of the obtained clusters and a restriction on the variation of triangle normals during the construction of CCVTs. The obtained clusters can be used to construct high-quality viewpoints (VP) for visual coverage tasks. Here, we utilize the planned VPs as cleaning configurations to perform residual powder removal in additive manufacturing using manipulator robots. The self-occlusion of VPs and ensuring collision-free robot configurations are addressed by integrating a proposed optimization-based strategy to find a set of candidate rays for each VP into the motion planning phase. CP planning benchmarks and physical experiments are conducted to demonstrate the effectiveness of the proposed approach. We show that our approach can compute the CPs and VPs of various mesh models with a massive number of triangles within a reasonable time.

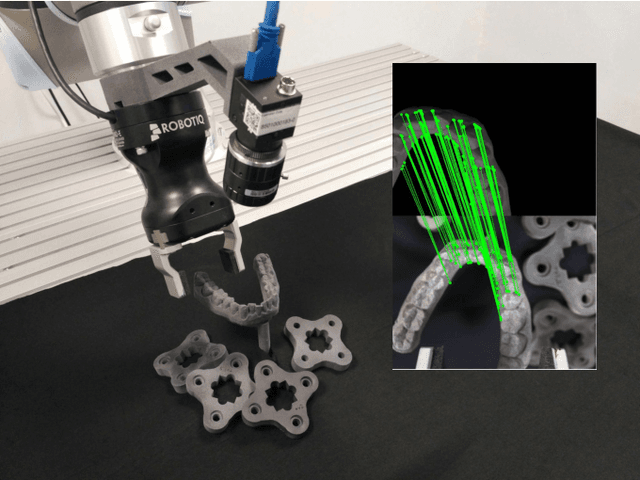

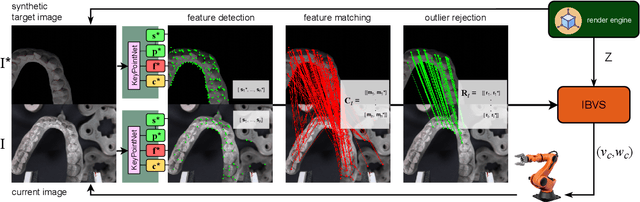

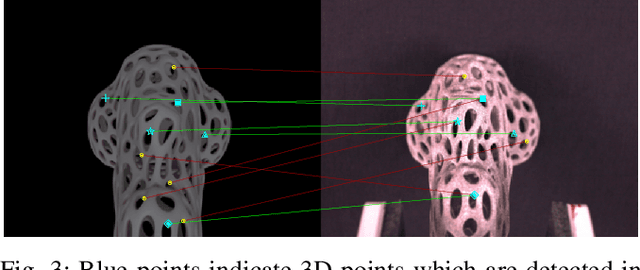

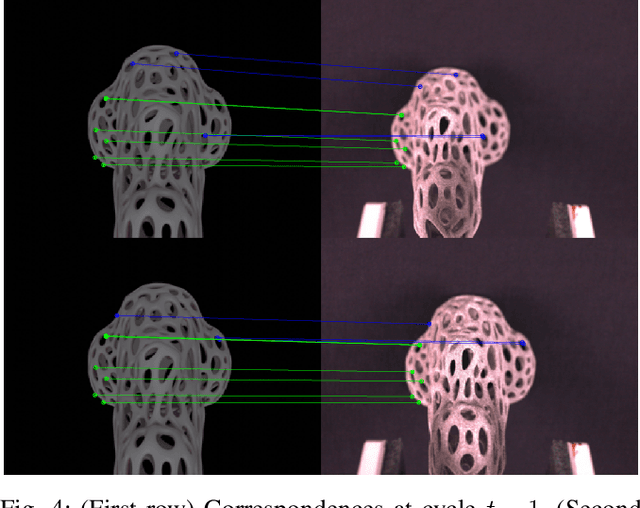

DFBVS: Deep Feature-Based Visual Servo

Jan 20, 2022

Abstract:Classical Visual Servoing (VS) rely on handcrafted visual features, which limit their generalizability. Recently, a number of approaches, some based on Deep Neural Networks, have been proposed to overcome this limitation by comparing directly the entire target and current camera images. However, by getting rid of the visual features altogether, those approaches require the target and current images to be essentially similar, which precludes the generalization to unknown, cluttered, scenes. Here we propose to perform VS based on visual features as in classical VS approaches but, contrary to the latter, we leverage recent breakthroughs in Deep Learning to automatically extract and match the visual features. By doing so, our approach enjoys the advantages from both worlds: (i) because our approach is based on visual features, it is able to steer the robot towards the object of interest even in presence of significant distraction in the background; (ii) because the features are automatically extracted and matched, our approach can easily and automatically generalize to unseen objects and scenes. In addition, we propose to use a render engine to synthesize the target image, which offers a further level of generalization. We demonstrate these advantages in a robotic grasping task, where the robot is able to steer, with high accuracy, towards the object to grasp, based simply on an image of the object rendered from the camera view corresponding to the desired robot grasping pose.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge