Víctor Samuel Pérez-Díaz

From Neurons to Neutrons: A Case Study in Interpretability

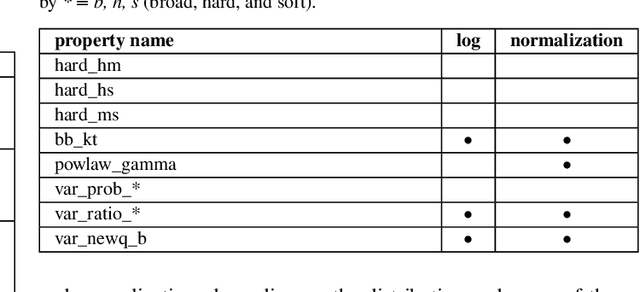

May 27, 2024Abstract:Mechanistic Interpretability (MI) promises a path toward fully understanding how neural networks make their predictions. Prior work demonstrates that even when trained to perform simple arithmetic, models can implement a variety of algorithms (sometimes concurrently) depending on initialization and hyperparameters. Does this mean neuron-level interpretability techniques have limited applicability? We argue that high-dimensional neural networks can learn low-dimensional representations of their training data that are useful beyond simply making good predictions. Such representations can be understood through the mechanistic interpretability lens and provide insights that are surprisingly faithful to human-derived domain knowledge. This indicates that such approaches to interpretability can be useful for deriving a new understanding of a problem from models trained to solve it. As a case study, we extract nuclear physics concepts by studying models trained to reproduce nuclear data.

Unsupervised Machine Learning for the Classification of Astrophysical X-ray Sources

Jan 22, 2024

Abstract:The automatic classification of X-ray detections is a necessary step in extracting astrophysical information from compiled catalogs of astrophysical sources. Classification is useful for the study of individual objects, statistics for population studies, as well as for anomaly detection, i.e., the identification of new unexplored phenomena, including transients and spectrally extreme sources. Despite the importance of this task, classification remains challenging in X-ray astronomy due to the lack of optical counterparts and representative training sets. We develop an alternative methodology that employs an unsupervised machine learning approach to provide probabilistic classes to Chandra Source Catalog sources with a limited number of labeled sources, and without ancillary information from optical and infrared catalogs. We provide a catalog of probabilistic classes for 8,756 sources, comprising a total of 14,507 detections, and demonstrate the success of the method at identifying emission from young stellar objects, as well as distinguishing between small-scale and large-scale compact accretors with a significant level of confidence. We investigate the consistency between the distribution of features among classified objects and well-established astrophysical hypotheses such as the unified AGN model. This provides interpretability to the probabilistic classifier. Code and tables are available publicly through GitHub. We provide a web playground for readers to explore our final classification at https://umlcaxs-playground.streamlit.app.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge