Utkarsh Singh

Uncooled Poisson Bolometer for High-Speed Event-Based Long-wave Thermal Imaging

Jan 26, 2026Abstract:Event-based vision provides high-speed, energy-efficient sensing for applications such as autonomous navigation and motion tracking. However, implementing this technology in the long-wave infrared remains a significant challenge. Traditional infrared sensors are hindered by slow thermal response times or the heavy power requirements of cryogenic cooling. Here, we introduce the first event-based infrared detector operating in a Poisson-counting regime. This is realized with a spintronic Poisson bolometer capable of broadband detection from 0.8-14$μ\text{m}$. In this regime, infrared signals are detected through statistically resolvable changes in stochastic switching events. This approach enables room-temperature operation with high timing resolution. Our device achieves a maximum event rate of 1,250 Hz, surpassing the temporal resolution of conventional uncooled microbolometers by a factor of 4. Power consumption is kept low at 0.2$μ$W per pixel. This work establishes an operating principle for infrared sensing and demonstrates a pathway toward high-speed, energy-efficient, event-driven thermal imaging.

Physics-Integrated Inference for Signal Recovery in Non-Gaussian Regimes

Jan 26, 2026Abstract:High-performance room-temperature sensing is often limited by non-stationary $1/f$ fluctuations and non-Gaussian stochasticity. In spintronic devices, thermally activated Néel switching creates heavy-tailed noise that masks weak signals, defeating linear filters optimized for Gaussian statistics. Here, we introduce a physics-integrated inference framework that decouples signal morphology from stochastic transients using a hierarchical 1D CNN-GRU topology. By learning the temporal signatures of Néel relaxation, this architecture reduces the Noise Equivalent Differential Temperature (NEDT) of spintronic Poisson bolometers by a factor of six (233.78 mK to 40.44 mK), effectively elevating room-temperature sensitivity toward cryogenic limits. We demonstrate the framework's universality across the electromagnetic and biological spectrum, achieving a 9-fold error suppression in Radar tracking, a 40\% uncertainty reduction in LiDAR, and a 15.56 dB SNR enhancement in ECG. This hardware-inference coupling recovers deterministic signals from fluctuation-dominated regimes, enabling near-ideal detection limits in noisy edge environments.

Car Drag Coefficient Prediction from 3D Point Clouds Using a Slice-Based Surrogate Model

Jan 05, 2026Abstract:The automotive industry's pursuit of enhanced fuel economy and performance necessitates efficient aerodynamic design. However, traditional evaluation methods such as computational fluid dynamics (CFD) and wind tunnel testing are resource intensive, hindering rapid iteration in the early design stages. Machine learning-based surrogate models offer a promising alternative, yet many existing approaches suffer from high computational complexity, limited interpretability, or insufficient accuracy for detailed geometric inputs. This paper introduces a novel lightweight surrogate model for the prediction of the aerodynamic drag coefficient (Cd) based on a sequential slice-wise processing of the geometry of the 3D vehicle. Inspired by medical imaging, 3D point clouds of vehicles are decomposed into an ordered sequence of 2D cross-sectional slices along the stream-wise axis. Each slice is encoded by a lightweight PointNet2D module, and the sequence of slice embeddings is processed by a bidirectional LSTM to capture longitudinal geometric evolution. The model, trained and evaluated on the DrivAerNet++ dataset, achieves a high coefficient of determination (R^2 > 0.9528) and a low mean absolute error (MAE approx 6.046 x 10^{-3}) in Cd prediction. With an inference time of approximately 0.025 seconds per sample on a consumer-grade GPU, our approach provides fast, accurate, and interpretable aerodynamic feedback, facilitating more agile and informed automotive design exploration.

* 14 pages, 5 figures. Published in: Bramer M., Stahl F. (eds) Artificial Intelligence XLII. SGAI 2025. Lecture Notes in Computer Science, vol 16302. Springer, Cham

Lightweight Transformers for Zero-Shot and Fine-Tuned Text-to-SQL Generation Using Spider

Aug 06, 2025Abstract:Text-to-SQL translation enables non-expert users to query relational databases using natural language, with applications in education and business intelligence. This study evaluates three lightweight transformer models - T5-Small, BART-Small, and GPT-2 - on the Spider dataset, focusing on low-resource settings. We developed a reusable, model-agnostic pipeline that tailors schema formatting to each model's architecture, training them across 1000 to 5000 iterations and evaluating on 1000 test samples using Logical Form Accuracy (LFAcc), BLEU, and Exact Match (EM) metrics. Fine-tuned T5-Small achieves the highest LFAcc (27.8%), outperforming BART-Small (23.98%) and GPT-2 (20.1%), highlighting encoder-decoder models' superiority in schema-aware SQL generation. Despite resource constraints limiting performance, our pipeline's modularity supports future enhancements, such as advanced schema linking or alternative base models. This work underscores the potential of compact transformers for accessible text-to-SQL solutions in resource-scarce environments.

Coherent Feed Forward Quantum Neural Network

Feb 01, 2024Abstract:Quantum machine learning, focusing on quantum neural networks (QNNs), remains a vastly uncharted field of study. Current QNN models primarily employ variational circuits on an ansatz or a quantum feature map, often requiring multiple entanglement layers. This methodology not only increases the computational cost of the circuit beyond what is practical on near-term quantum devices but also misleadingly labels these models as neural networks, given their divergence from the structure of a typical feed-forward neural network (FFNN). Moreover, the circuit depth and qubit needs of these models scale poorly with the number of data features, resulting in an efficiency challenge for real-world machine-learning tasks. We introduce a bona fide QNN model, which seamlessly aligns with the versatility of a traditional FFNN in terms of its adaptable intermediate layers and nodes, absent from intermediate measurements such that our entire model is coherent. This model stands out with its reduced circuit depth and number of requisite C-NOT gates to outperform prevailing QNN models. Furthermore, the qubit count in our model remains unaffected by the data's feature quantity. We test our proposed model on various benchmarking datasets such as the diagnostic breast cancer (Wisconsin) and credit card fraud detection datasets. We compare the outcomes of our model with the existing QNN methods to showcase the advantageous efficacy of our approach, even with a reduced requirement on quantum resources. Our model paves the way for application of quantum neural networks to real relevant machine learning problems.

memorAIs: an Optical Character Recognition and Rule-Based Medication Intake Reminder-Generating Solution

Dec 11, 2023Abstract:Memory-based medication non-adherence is an unsolved problem that is responsible for considerable disease burden in the United States. Digital medication intake reminder solutions with minimal onboarding requirements that are usable at the point of medication acquisition may help to alleviate this problem by offering a low barrier way to help people remember to take their medications. In this paper, we propose memorAIs, a digital medication intake reminder solution that mitigates onboarding friction by leveraging optical character recognition strategies for text extraction from medication bottles and rule based expressions for text processing to create configured medication reminders as local device calendar invitations. We describe our ideation and development process, as well as limitations of the current implementation. memorAIs was the winner of the Patient Safety award at the 2023 Columbia University DivHacks Hackathon, presented by the Patient Safety Technology Challenge, sponsored by the Pittsburgh Regional Health Initiative.

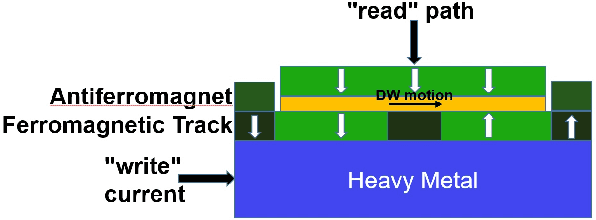

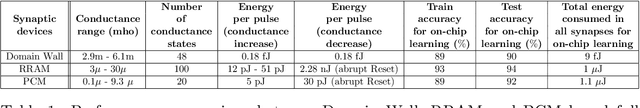

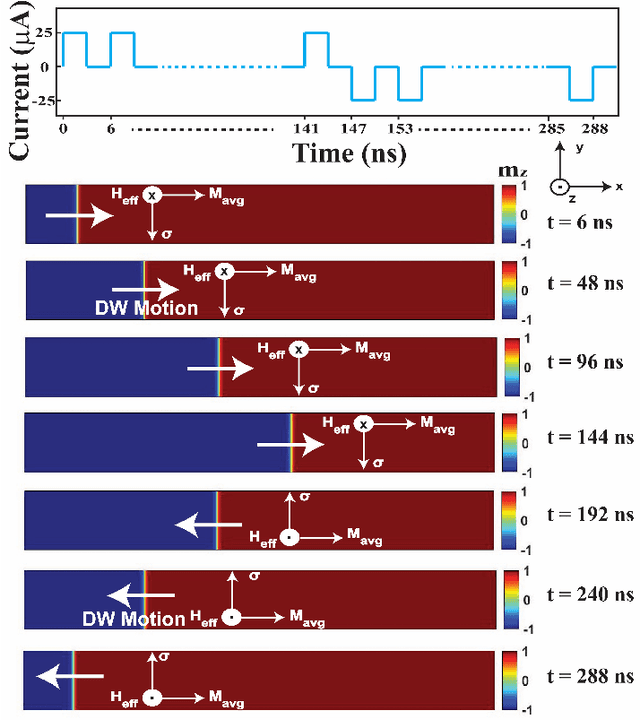

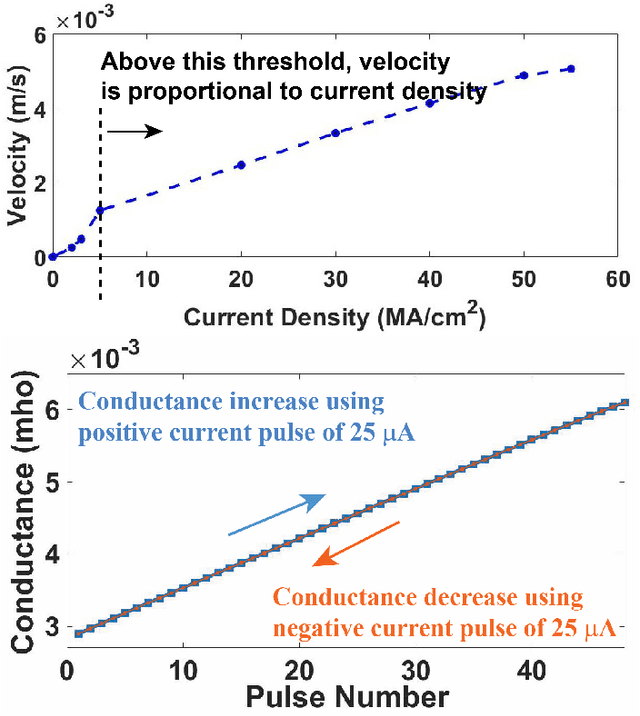

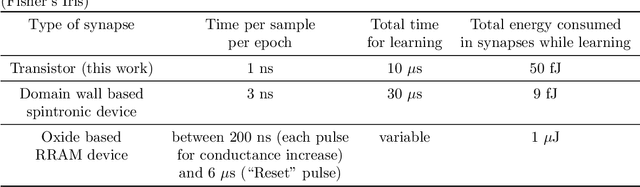

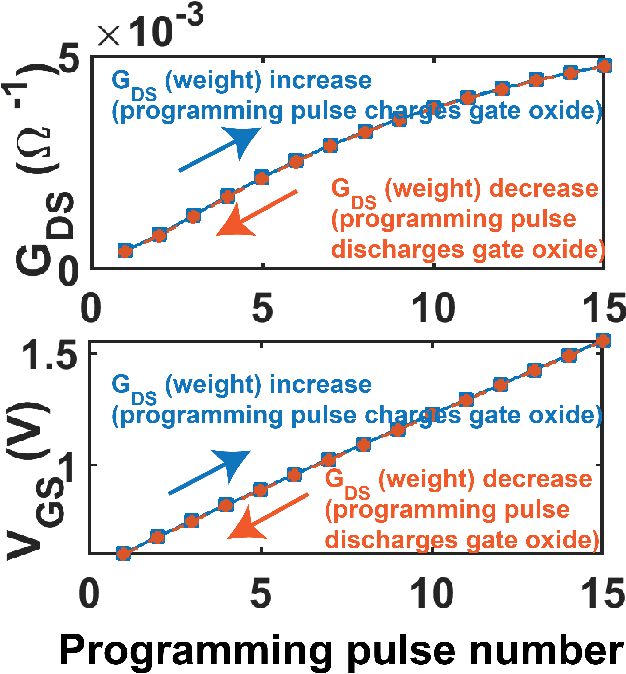

Comparing domain wall synapse with other Non Volatile Memory devices for on-chip learning in Analog Hardware Neural Network

Oct 28, 2019

Abstract:Resistive Random Access Memory (RRAM) and Phase Change Memory (PCM) devices have been popularly used as synapses in crossbar array based analog Neural Network (NN) circuit to achieve more energy and time efficient data classification compared to conventional computers. Here we demonstrate the advantages of recently proposed spin orbit torque driven Domain Wall (DW) device as synapse compared to the RRAM and PCM devices with respect to on-chip learning (training in hardware) in such NN. Synaptic characteristic of DW synapse, obtained by us from micromagnetic modeling, turns out to be much more linear and symmetric (between positive and negative update) than that of RRAM and PCM synapse. This makes design of peripheral analog circuits for on-chip learning much easier in DW synapse based NN compared to that for RRAM and PCM synapses. We next incorporate the DW synapse as a Verilog-A model in the crossbar array based NN circuit we design on SPICE circuit simulator. Successful on-chip learning is demonstrated through SPICE simulations on the popular Fisher's Iris dataset. Time and energy required for learning turn out to be orders of magnitude lower for DW synapse based NN circuit compared to that for RRAM and PCM synapse based NN circuits.

On-chip learning in a conventional silicon MOSFET based Analog Hardware Neural Network

Jul 01, 2019

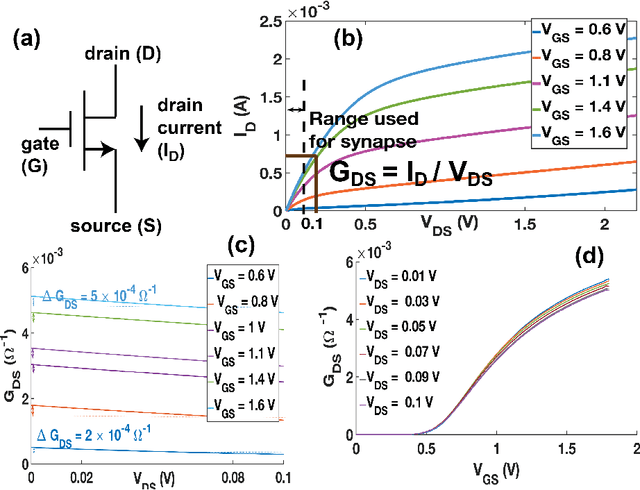

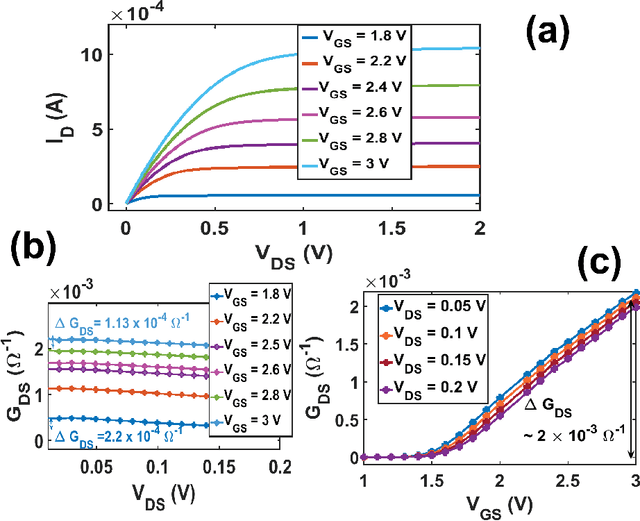

Abstract:On-chip learning in a crossbar array based analog hardware Neural Network (NN) has been shown to have major advantages in terms of speed and energy compared to training NN on a traditional computer. However analog hardware NN proposals and implementations thus far have mostly involved Non Volatile Memory (NVM) devices like Resistive Random Access Memory (RRAM), Phase Change Memory (PCM), spintronic devices or floating gate transistors as synapses. Fabricating systems based on RRAM, PCM or spintronic devices need in-house laboratory facilities and cannot be done through merchant foundries, unlike conventional silicon based CMOS chips. Floating gate transistors need large voltage pulses for weight update, making on-chip learning in such systems energy inefficient. This paper proposes and implements through SPICE simulations on-chip learning in analog hardware NN using only conventional silicon based MOSFETs (without any floating gate) as synapses since they are easy to fabricate. We first model the synaptic characteristic of our single transistor synapse using SPICE circuit simulator and benchmark it against experimentally obtained current-voltage characteristics of a transistor. Next we design a Fully Connected Neural Network (FCNN) crossbar array using such transistor synapses. We also design analog peripheral circuits for neuron and synaptic weight update calculation, needed for on-chip learning, again using conventional transistors. Simulating the entire system on SPICE simulator, we obtain high training and test accuracy on the standard Fisher's Iris dataset, widely used in machine learning. We also compare the speed and energy performance of our transistor based implementation of analog hardware NN with some previous implementations of NN with NVM devices and show comparable performance with respect to on-chip learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge