Torsten Schlett

Fairness measures for biometric quality assessment

Aug 21, 2024Abstract:Quality assessment algorithms measure the quality of a captured biometric sample. Since the sample quality strongly affects the recognition performance of a biometric system, it is essential to only process samples of sufficient quality and discard samples of low-quality. Even though quality assessment algorithms are not intended to yield very different quality scores across demographic groups, quality score discrepancies are possible, resulting in different discard ratios. To ensure that quality assessment algorithms do not take demographic characteristics into account when assessing sample quality and consequently to ensure that the quality algorithms perform equally for all individuals, it is crucial to develop a fairness measure. In this work we propose and compare multiple fairness measures for evaluating quality components across demographic groups. Proposed measures, could be used as potential candidates for an upcoming standard in this important field.

Double Trouble? Impact and Detection of Duplicates in Face Image Datasets

Jan 25, 2024Abstract:Various face image datasets intended for facial biometrics research were created via web-scraping, i.e. the collection of images publicly available on the internet. This work presents an approach to detect both exactly and nearly identical face image duplicates, using file and image hashes. The approach is extended through the use of face image preprocessing. Additional steps based on face recognition and face image quality assessment models reduce false positives, and facilitate the deduplication of the face images both for intra- and inter-subject duplicate sets. The presented approach is applied to five datasets, namely LFW, TinyFace, Adience, CASIA-WebFace, and C-MS-Celeb (a cleaned MS-Celeb-1M variant). Duplicates are detected within every dataset, with hundreds to hundreds of thousands of duplicates for all except LFW. Face recognition and quality assessment experiments indicate a minor impact on the results through the duplicate removal. The final deduplication data is publicly available.

Considerations on the Evaluation of Biometric Quality Assessment Algorithms

Mar 23, 2023

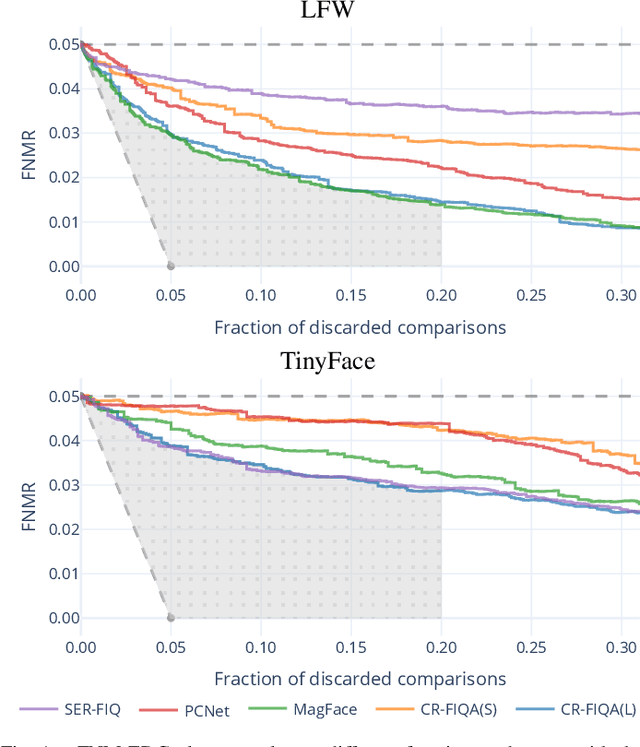

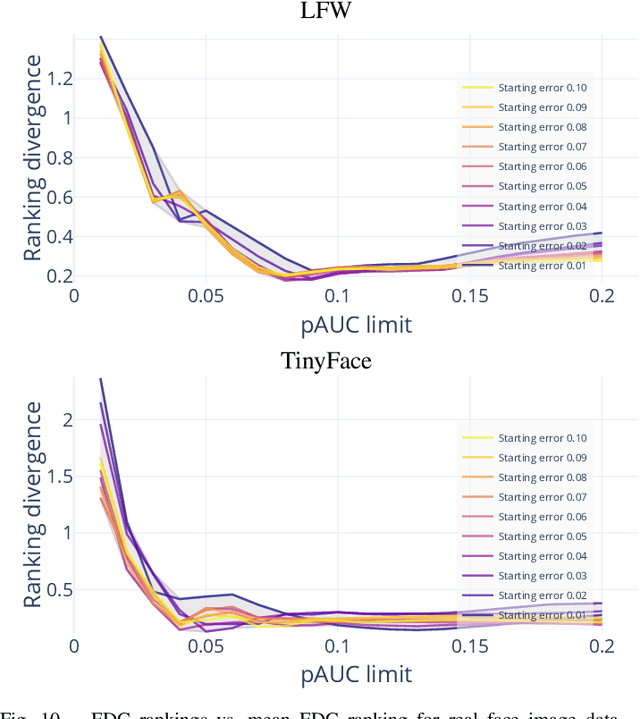

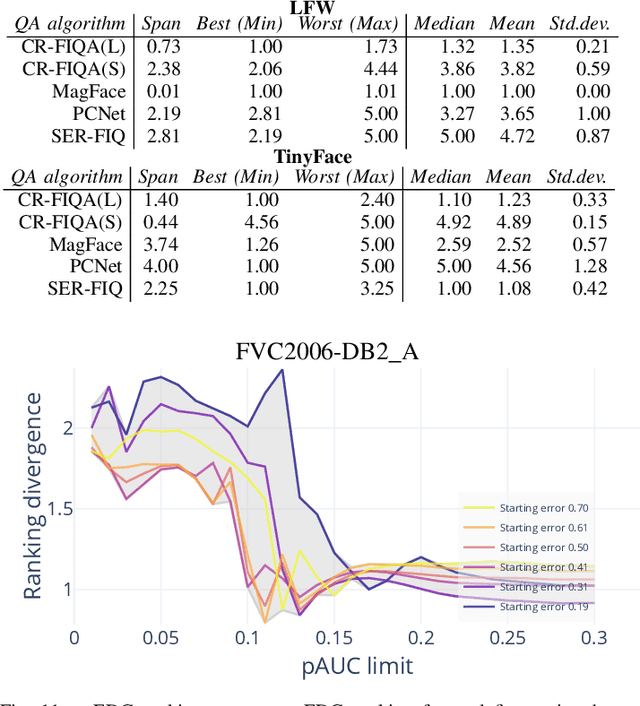

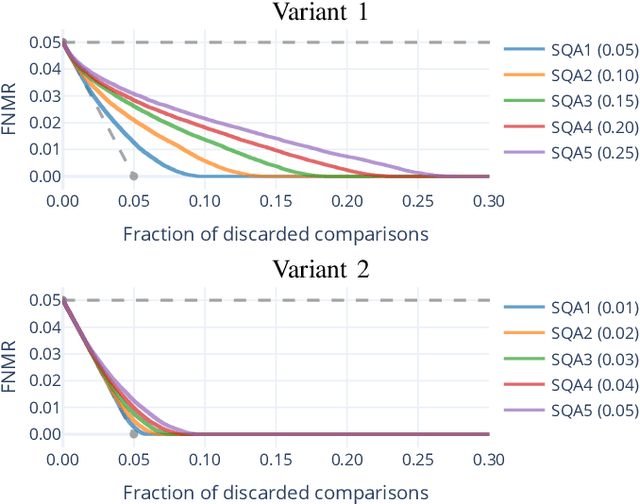

Abstract:Quality assessment algorithms can be used to estimate the utility of a biometric sample for the purpose of biometric recognition. "Error versus Discard Characteristic" (EDC) plots, and "partial Area Under Curve" (pAUC) values of curves therein, are generally used by researchers to evaluate the predictive performance of such quality assessment algorithms. An EDC curve depends on an error type such as the "False Non Match Rate" (FNMR), a quality assessment algorithm, a biometric recognition system, a set of comparisons each corresponding to a biometric sample pair, and a comparison score threshold corresponding to a starting error. To compute an EDC curve, comparisons are progressively discarded based on the associated samples' lowest quality scores, and the error is computed for the remaining comparisons. Additionally, a discard fraction limit or range must be selected to compute pAUC values, which can then be used to quantitatively rank quality assessment algorithms. This paper discusses and analyses various details for this kind of quality assessment algorithm evaluation, including general EDC properties, interpretability improvements for pAUC values based on a hard lower error limit and a soft upper error limit, the use of relative instead of discrete rankings, stepwise vs. linear curve interpolation, and normalisation of quality scores to a [0, 100] integer range. We also analyse the stability of quantitative quality assessment algorithm rankings based on pAUC values across varying pAUC discard fraction limits and starting errors, concluding that higher pAUC discard fraction limits should be preferred. The analyses are conducted both with synthetic data and with real data for a face image quality assessment scenario, with a focus on general modality-independent conclusions for EDC evaluations.

Effect of Lossy Compression Algorithms on Face Image Quality and Recognition

Feb 24, 2023

Abstract:Lossy face image compression can degrade the image quality and the utility for the purpose of face recognition. This work investigates the effect of lossy image compression on a state-of-the-art face recognition model, and on multiple face image quality assessment models. The analysis is conducted over a range of specific image target sizes. Four compression types are considered, namely JPEG, JPEG 2000, downscaled PNG, and notably the new JPEG XL format. Frontal color images from the ColorFERET database were used in a Region Of Interest (ROI) variant and a portrait variant. We primarily conclude that JPEG XL allows for superior mean and worst case face recognition performance especially at lower target sizes, below approximately 5kB for the ROI variant, while there appears to be no critical advantage among the compression types at higher target sizes. Quality assessments from modern models correlate well overall with the compression effect on face recognition performance.

Face Image Quality Assessment: A Literature Survey

Sep 02, 2020

Abstract:The performance of face analysis and recognition systems depends on the quality of the acquired face data, which is influenced by numerous factors. Automatically assessing the quality of face data in terms of biometric utility can thus be useful to filter out low quality data. This survey provides an overview of the face quality assessment literature in the framework of face biometrics, with a focus on face recognition based on visible wavelength face images as opposed to e.g. depth or infrared quality assessment. A trend towards deep learning based methods is observed, including notable conceptual differences among the recent approaches. Besides image selection, face image quality assessment can also be used in a variety of other application scenarios, which are discussed herein. Open issues and challenges are pointed out, i.a. highlighting the importance of comparability for algorithm evaluations, and the challenge for future work to create deep learning approaches that are interpretable in addition to providing accurate utility predictions.

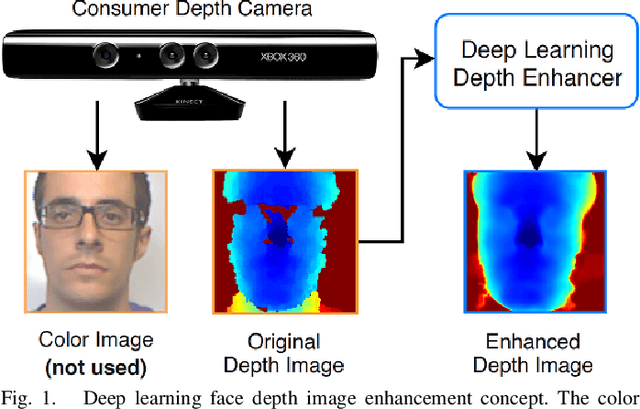

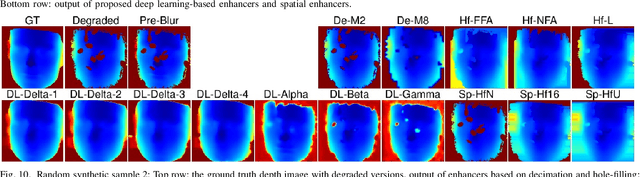

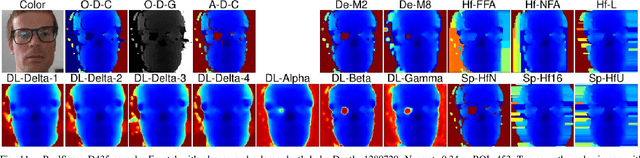

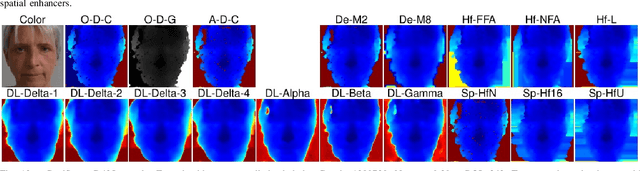

Deep Learning-based Single Image Face Depth Data Enhancement

Jun 19, 2020

Abstract:Face recognition can benefit from the utilization of depth data captured using low-cost cameras, in particular for presentation attack detection purposes. Depth video output from these capture devices can however contain defects such as holes, as well as general depth inaccuracies. This work proposes a deep learning-based face depth enhancement method. The trained artificial neural networks utilize U-Net-like architectures, and are compared against general enhancer types. All tested enhancer types exclusively use depth data as input, which differs from methods that enhance depth based on additional input data such as visible light color images. Due to the noted apparent lack of real-world camera datasets with suitable properties, face depth ground truth images and degraded forms thereof are synthesized with help of PRNet, both for the deep learning training and for an experimental quantitative evaluation of all enhancer types. Generated enhancer output samples are also presented for real camera data, namely custom RealSense D435 depth images and Kinect v1 data from the KinectFaceDB. It is concluded that the deep learning enhancement approach is superior to the tested general enhancers, without overly falsifying depth data when non-face input is provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge