Tony Zheng

E-CARE: An Efficient LLM-based Commonsense-Augmented Framework for E-Commerce

Nov 06, 2025Abstract:Finding relevant products given a user query plays a pivotal role in an e-commerce platform, as it can spark shopping behaviors and result in revenue gains. The challenge lies in accurately predicting the correlation between queries and products. Recently, mining the cross-features between queries and products based on the commonsense reasoning capacity of Large Language Models (LLMs) has shown promising performance. However, such methods suffer from high costs due to intensive real-time LLM inference during serving, as well as human annotations and potential Supervised Fine Tuning (SFT). To boost efficiency while leveraging the commonsense reasoning capacity of LLMs for various e-commerce tasks, we propose the Efficient Commonsense-Augmented Recommendation Enhancer (E-CARE). During inference, models augmented with E-CARE can access commonsense reasoning with only a single LLM forward pass per query by utilizing a commonsense reasoning factor graph that encodes most of the reasoning schema from powerful LLMs. The experiments on 2 downstream tasks show an improvement of up to 12.1% on precision@5.

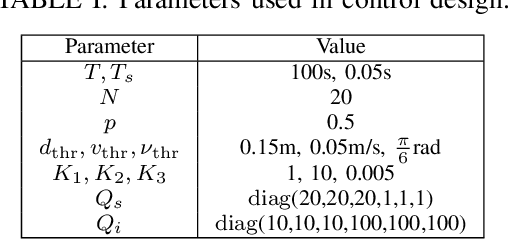

Data-Driven Optimization for Deposition with Degradable Tools

May 26, 2023Abstract:We present a data-driven optimization approach for robotic controlled deposition with a degradable tool. Existing methods make the assumption that the tool tip is not changing or is replaced frequently. Errors can accumulate over time as the tool wears away and this leads to poor performance in the case where the tool degradation is unaccounted for during deposition. In the proposed approach, we utilize visual and force feedback to update the unknown model parameters of our tool-tip. Subsequently, we solve a constrained finite time optimal control problem for tracking a reference deposition profile, where our robot plans with the learned tool degradation dynamics. We focus on a robotic drawing problem as an illustrative example. Using real-world experiments, we show that the error in target vs actual deposition decreases when learned degradation models are used in the control design.

Safe Human-Robot Collaborative Transportation via Trust-Driven Role Adaptation

Jul 13, 2022

Abstract:We study a human-robot collaborative transportation task in presence of obstacles. The task for each agent is to carry a rigid object to a common target position, while safely avoiding obstacles and satisfying the compliance and actuation constraints of the other agent. Human and robot do not share the local view of the environment. The human policy either assists the robot when they deem the robot actions safe based on their perception of the environment, or actively leads the task. Using estimated human inputs, the robot plans a trajectory for the transported object by solving a constrained finite time optimal control problem. Sensors on the robot measure the inputs applied by the human. The robot then appropriately applies a weighted combination of the human's applied and its own planned inputs, where the weights are chosen based on the robot's trust value on its estimates of the human's inputs. This allows for a dynamic leader-follower role adaptation of the robot throughout the task. Furthermore, under a low value of trust, if the robot approaches any obstacle potentially unknown to the human, it triggers a safe stopping policy, maintaining safety of the system and signaling a required change in the human's intent. With experimental results, we demonstrate the efficacy of the proposed approach.

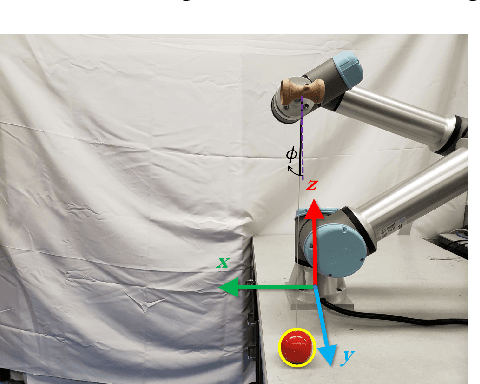

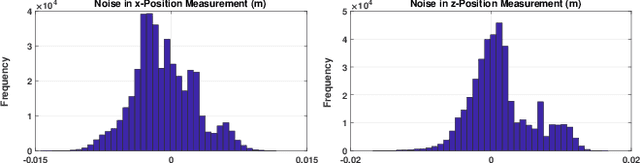

Learning to Play Cup-and-Ball with Noisy Camera Observations

Jul 19, 2020

Abstract:Playing the cup-and-ball game is an intriguing task for robotics research since it abstracts important problem characteristics including system nonlinearity, contact forces and precise positioning as terminal goal. In this paper, we present a learning model based control strategy for the cup-and-ball game, where a Universal Robots UR5e manipulator arm learns to catch a ball in one of the cups on a Kendama. Our control problem is divided into two sub-tasks, namely $(i)$ swinging the ball up in a constrained motion, and $(ii)$ catching the free-falling ball. The swing-up trajectory is computed offline, and applied in open-loop to the arm. Subsequently, a convex optimization problem is solved online during the ball's free-fall to control the manipulator and catch the ball. The controller utilizes noisy position feedback of the ball from an Intel RealSense D435 depth camera. We propose a novel iterative framework, where data is used to learn the support of the camera noise distribution iteratively in order to update the control policy. The probability of a catch with a fixed policy is computed empirically with a user specified number of roll-outs. Our design guarantees that probability of the catch increases in the limit, as the learned support nears the true support of the camera noise distribution. High-fidelity Mujoco simulations and preliminary experimental results support our theoretical analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge