Tongling Wang

Generating Pseudo-labels Adaptively for Few-shot Model-Agnostic Meta-Learning

Jul 09, 2022

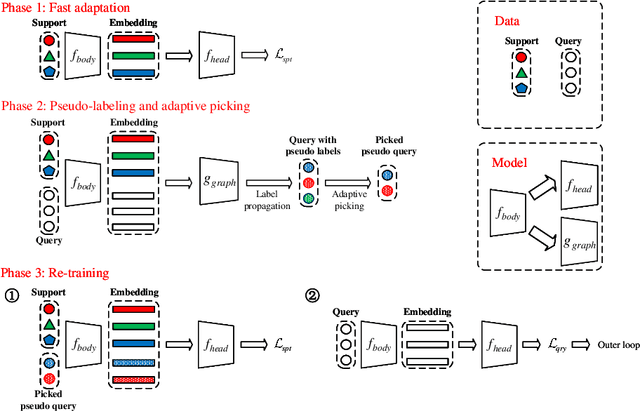

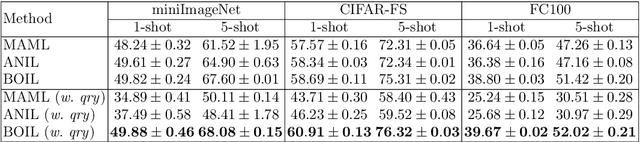

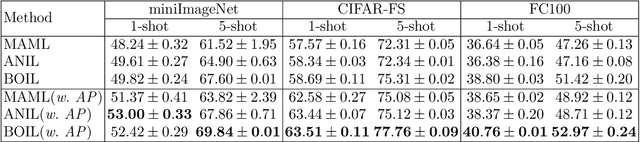

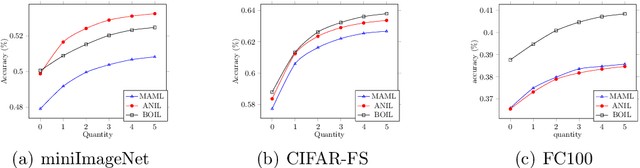

Abstract:Model-Agnostic Meta-Learning (MAML) is a famous few-shot learning method that has inspired many follow-up efforts, such as ANIL and BOIL. However, as an inductive method, MAML is unable to fully utilize the information of query set, limiting its potential of gaining higher generality. To address this issue, we propose a simple yet effective method that generates psuedo-labels adaptively and could boost the performance of the MAML family. The proposed methods, dubbed Generative Pseudo-label based MAML (GP-MAML), GP-ANIL and GP-BOIL, leverage statistics of the query set to improve the performance on new tasks. Specifically, we adaptively add pseudo labels and pick samples from the query set, then re-train the model using the picked query samples together with the support set. The GP series can also use information from the pseudo query set to re-train the network during the meta-testing. While some transductive methods, such as Transductive Propagation Network (TPN), struggle to achieve this goal.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge