Tommaso Menara

Representation Learning for Context-Dependent Decision-Making

May 12, 2022

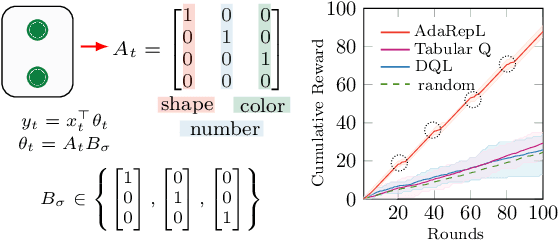

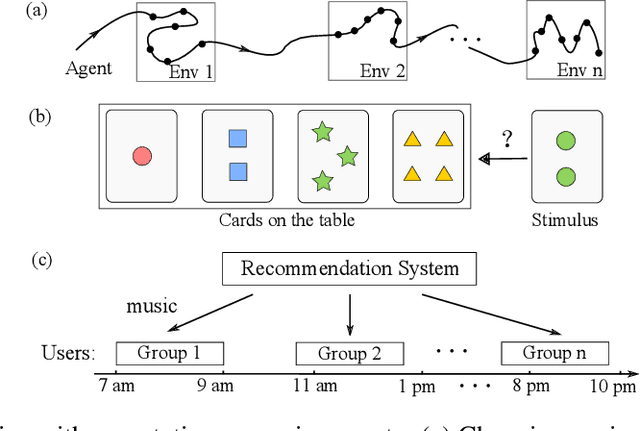

Abstract:Humans are capable of adjusting to changing environments flexibly and quickly. Empirical evidence has revealed that representation learning plays a crucial role in endowing humans with such a capability. Inspired by this observation, we study representation learning in the sequential decision-making scenario with contextual changes. We propose an online algorithm that is able to learn and transfer context-dependent representations and show that it significantly outperforms the existing ones that do not learn representations adaptively. As a case study, we apply our algorithm to the Wisconsin Card Sorting Task, a well-established test for the mental flexibility of humans in sequential decision-making. By comparing our algorithm with the standard Q-learning and Deep-Q learning algorithms, we demonstrate the benefits of adaptive representation learning.

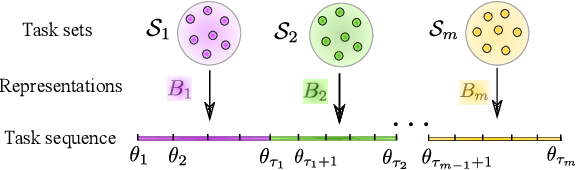

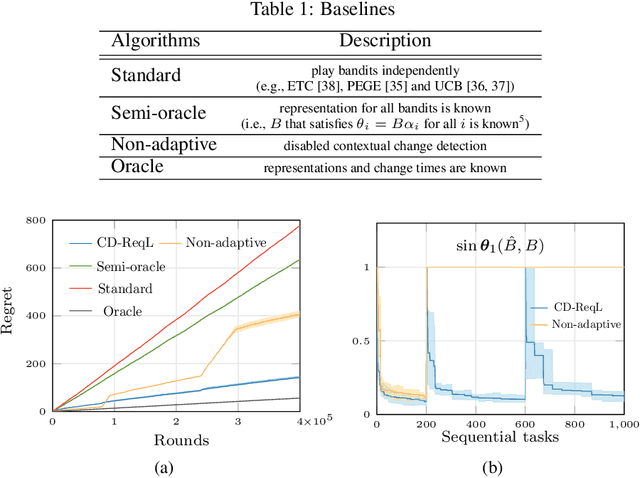

Non-Stationary Representation Learning in Sequential Linear Bandits

Jan 13, 2022

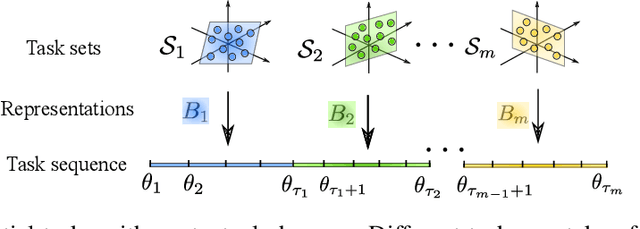

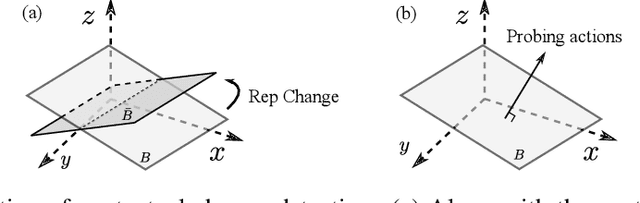

Abstract:In this paper, we study representation learning for multi-task decision-making in non-stationary environments. We consider the framework of sequential linear bandits, where the agent performs a series of tasks drawn from distinct sets associated with different environments. The embeddings of tasks in each set share a low-dimensional feature extractor called representation, and representations are different across sets. We propose an online algorithm that facilitates efficient decision-making by learning and transferring non-stationary representations in an adaptive fashion. We prove that our algorithm significantly outperforms the existing ones that treat tasks independently. We also conduct experiments using both synthetic and real data to validate our theoretical insights and demonstrate the efficacy of our algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge