Tom Vermeire

How to choose an Explainability Method? Towards a Methodical Implementation of XAI in Practice

Jul 09, 2021

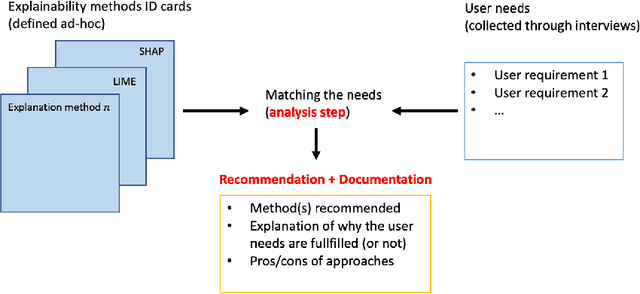

Abstract:Explainability is becoming an important requirement for organizations that make use of automated decision-making due to regulatory initiatives and a shift in public awareness. Various and significantly different algorithmic methods to provide this explainability have been introduced in the field, but the existing literature in the machine learning community has paid little attention to the stakeholder whose needs are rather studied in the human-computer interface community. Therefore, organizations that want or need to provide this explainability are confronted with the selection of an appropriate method for their use case. In this paper, we argue there is a need for a methodology to bridge the gap between stakeholder needs and explanation methods. We present our ongoing work on creating this methodology to help data scientists in the process of providing explainability to stakeholders. In particular, our contributions include documents used to characterize XAI methods and user requirements (shown in Appendix), which our methodology builds upon.

Explainable Image Classification with Evidence Counterfactual

Apr 16, 2020

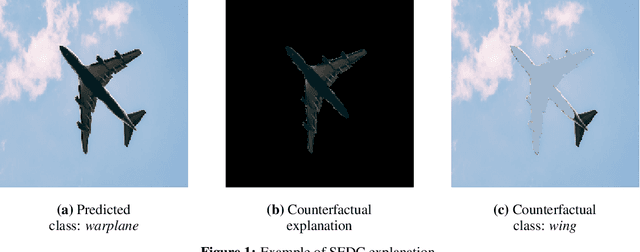

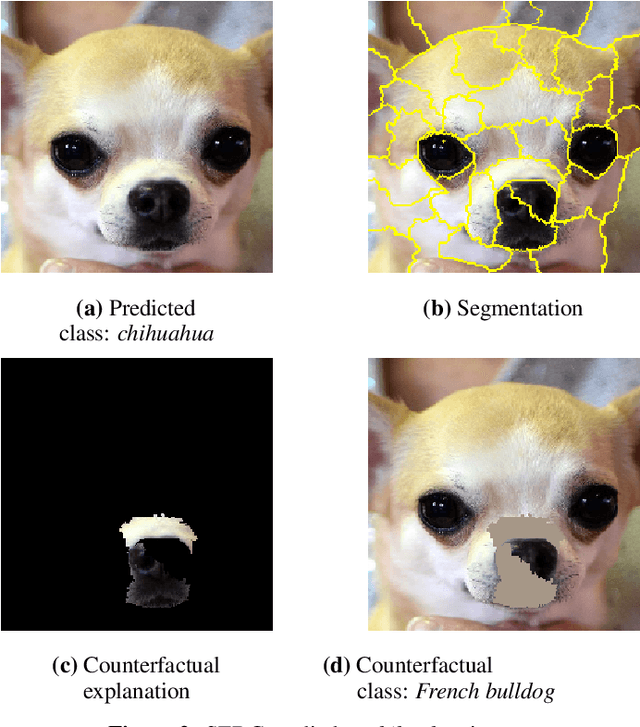

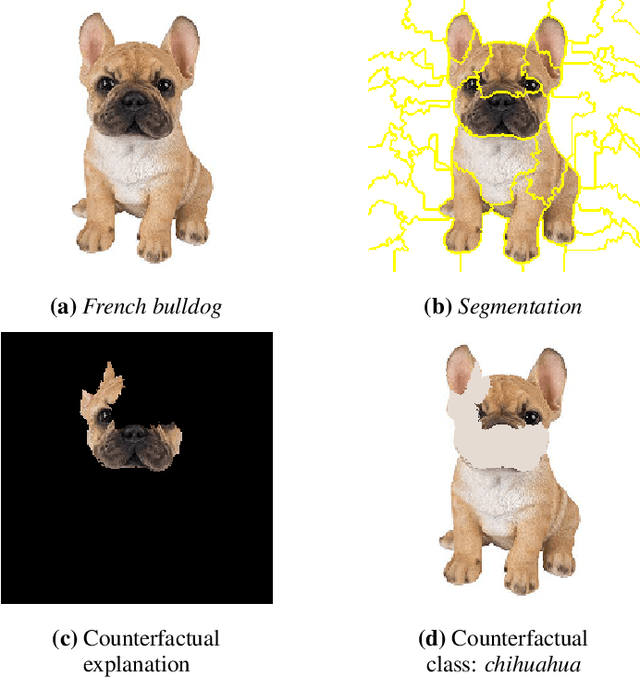

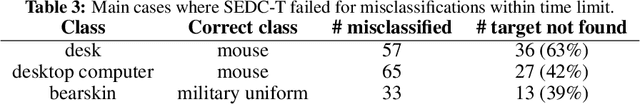

Abstract:The complexity of state-of-the-art modeling techniques for image classification impedes the ability to explain model predictions in an interpretable way. Existing explanation methods generally create importance rankings in terms of pixels or pixel groups. However, the resulting explanations lack an optimal size, do not consider feature dependence and are only related to one class. Counterfactual explanation methods are considered promising to explain complex model decisions, since they are associated with a high degree of human interpretability. In this paper, SEDC is introduced as a model-agnostic instance-level explanation method for image classification to obtain visual counterfactual explanations. For a given image, SEDC searches a small set of segments that, in case of removal, alters the classification. As image classification tasks are typically multiclass problems, SEDC-T is proposed as an alternative method that allows specifying a target counterfactual class. We compare SEDC(-T) with popular feature importance methods such as LRP, LIME and SHAP, and we describe how the mentioned importance ranking issues are addressed. Moreover, concrete examples and experiments illustrate the potential of our approach (1) to obtain trust and insight, and (2) to obtain input for model improvement by explaining misclassifications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge