Tom Bäckström

Performance and Complexity Trade-off Optimization of Speech Models During Training

Jan 21, 2026Abstract:In speech machine learning, neural network models are typically designed by choosing an architecture with fixed layer sizes and structure. These models are then trained to maximize performance on metrics aligned with the task's objective. While the overall architecture is usually guided by prior knowledge of the task, the sizes of individual layers are often chosen heuristically. However, this approach does not guarantee an optimal trade-off between performance and computational complexity; consequently, post hoc methods such as weight quantization or model pruning are typically employed to reduce computational cost. This occurs because stochastic gradient descent (SGD) methods can only optimize differentiable functions, while factors influencing computational complexity, such as layer sizes and floating-point operations per second (FLOP/s), are non-differentiable and require modifying the model structure during training. We propose a reparameterization technique based on feature noise injection that enables joint optimization of performance and computational complexity during training using SGD-based methods. Unlike traditional pruning methods, our approach allows the model size to be dynamically optimized for a target performance-complexity trade-off, without relying on heuristic criteria to select which weights or structures to remove. We demonstrate the effectiveness of our method through three case studies, including a synthetic example and two practical real-world applications: voice activity detection and audio anti-spoofing. The code related to our work is publicly available to encourage further research.

DiVeQ: Differentiable Vector Quantization Using the Reparameterization Trick

Sep 30, 2025Abstract:Vector quantization is common in deep models, yet its hard assignments block gradients and hinder end-to-end training. We propose DiVeQ, which treats quantization as adding an error vector that mimics the quantization distortion, keeping the forward pass hard while letting gradients flow. We also present a space-filling variant (SF-DiVeQ) that assigns to a curve constructed by the lines connecting codewords, resulting in less quantization error and full codebook usage. Both methods train end-to-end without requiring auxiliary losses or temperature schedules. On VQ-VAE compression and VQGAN generation across various data sets, they improve reconstruction and sample quality over alternative quantization approaches.

Privacy Disclosure of Similarity in Speech and Language Processing

Aug 07, 2025Abstract:Speaker, author, and other biometric identification applications often compare a sample's similarity to a database of templates to determine the identity. Given that data may be noisy and similarity measures can be inaccurate, such a comparison may not reliably identify the true identity as the most similar. Still, even the similarity rank based on an inaccurate similarity measure can disclose private information about the true identity. We propose a methodology for quantifying the privacy disclosure of such a similarity rank by estimating its probability distribution. It is based on determining the histogram of the similarity rank of the true speaker, or when data is scarce, modeling the histogram with the beta-binomial distribution. We express the disclosure in terms of entropy (bits), such that the disclosure from independent features are additive. Our experiments demonstrate that all tested speaker and author characterizations contain personally identifying information (PII) that can aid in identification, with embeddings from speaker recognition algorithms containing the most information, followed by phone embeddings, linguistic embeddings, and fundamental frequency. Our initial experiments show that the disclosure of PII increases with the length of test samples, but it is bounded by the length of database templates. The provided metric, similarity rank disclosure, provides a way to compare the disclosure of PII between biometric features and merge them to aid identification. It can thus aid in the holistic evaluation of threats to privacy in speech and other biometric technologies.

Good practices for evaluation of machine learning systems

Dec 04, 2024

Abstract:Many development decisions affect the results obtained from ML experiments: training data, features, model architecture, hyperparameters, test data, etc. Among these aspects, arguably the most important design decisions are those that involve the evaluation procedure. This procedure is what determines whether the conclusions drawn from the experiments will or will not generalize to unseen data and whether they will be relevant to the application of interest. If the data is incorrectly selected, the wrong metric is chosen for evaluation or the significance of the comparisons between models is overestimated, conclusions may be misleading or result in suboptimal development decisions. To avoid such problems, the evaluation protocol should be very carefully designed before experimentation starts. In this work we discuss the main aspects involved in the design of the evaluation protocol: data selection, metric selection, and statistical significance. This document is not meant to be an exhaustive tutorial on each of these aspects. Instead, the goal is to explain the main guidelines that should be followed in each case. We include examples taken from the speech processing field, and provide a list of common mistakes related to each aspect.

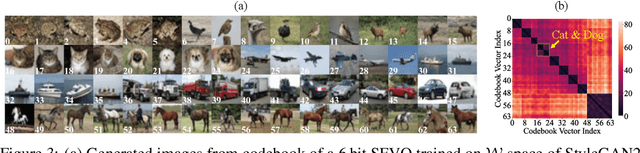

Unsupervised Panoptic Interpretation of Latent Spaces in GANs Using Space-Filling Vector Quantization

Oct 27, 2024

Abstract:Generative adversarial networks (GANs) learn a latent space whose samples can be mapped to real-world images. Such latent spaces are difficult to interpret. Some earlier supervised methods aim to create an interpretable latent space or discover interpretable directions that require exploiting data labels or annotated synthesized samples for training. However, we propose using a modification of vector quantization called space-filling vector quantization (SFVQ), which quantizes the data on a piece-wise linear curve. SFVQ can capture the underlying morphological structure of the latent space and thus make it interpretable. We apply this technique to model the latent space of pretrained StyleGAN2 and BigGAN networks on various datasets. Our experiments show that the SFVQ curve yields a general interpretable model of the latent space that determines which part of the latent space corresponds to what specific generative factors. Furthermore, we demonstrate that each line of SFVQ's curve can potentially refer to an interpretable direction for applying intelligible image transformations. We also showed that the points located on an SFVQ line can be used for controllable data augmentation.

Privacy in Speech Technology

May 09, 2023Abstract:Speech technology for communication, accessing information and services has rapidly improved in quality. It is convenient and appealing because speech is the primary mode of communication for humans. Such technology however also presents proven threats to privacy. Speech is a tool for communication and it will thus inherently contain private information. Importantly, it however also contains a wealth of side information, such as information related to health, emotions, affiliations, and relationships, all of which are private. Exposing such private information can lead to serious threats such as price gouging, harassment, extortion, and stalking. This paper is a tutorial on privacy issues related to speech technology, modeling their threats, approaches for protecting users' privacy, measuring the performance of privacy-protecting methods, perception of privacy as well as societal and legal consequences. In addition to a tutorial overview, it also presents lines for further development where improvements are most urgently needed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge