Mohammad Hassan Vali

Sparsely Supervised Diffusion

Feb 02, 2026Abstract:Diffusion models have shown remarkable success across a wide range of generative tasks. However, they often suffer from spatially inconsistent generation, arguably due to the inherent locality of their denoising mechanisms. This can yield samples that are locally plausible but globally inconsistent. To mitigate this issue, we propose sparsely supervised learning for diffusion models, a simple yet effective masking strategy that can be implemented with only a few lines of code. Interestingly, the experiments show that it is safe to mask up to 98\% of pixels during diffusion model training. Our method delivers competitive FID scores across experiments and, most importantly, avoids training instability on small datasets. Moreover, the masking strategy reduces memorization and promotes the use of essential contextual information during generation.

Self-Attention Decomposition For Training Free Diffusion Editing

Oct 26, 2025Abstract:Diffusion models achieve remarkable fidelity in image synthesis, yet precise control over their outputs for targeted editing remains challenging. A key step toward controllability is to identify interpretable directions in the model's latent representations that correspond to semantic attributes. Existing approaches for finding interpretable directions typically rely on sampling large sets of images or training auxiliary networks, which limits efficiency. We propose an analytical method that derives semantic editing directions directly from the pretrained parameters of diffusion models, requiring neither additional data nor fine-tuning. Our insight is that self-attention weight matrices encode rich structural information about the data distribution learned during training. By computing the eigenvectors of these weight matrices, we obtain robust and interpretable editing directions. Experiments demonstrate that our method produces high-quality edits across multiple datasets while reducing editing time significantly by 60% over current benchmarks.

DiVeQ: Differentiable Vector Quantization Using the Reparameterization Trick

Sep 30, 2025Abstract:Vector quantization is common in deep models, yet its hard assignments block gradients and hinder end-to-end training. We propose DiVeQ, which treats quantization as adding an error vector that mimics the quantization distortion, keeping the forward pass hard while letting gradients flow. We also present a space-filling variant (SF-DiVeQ) that assigns to a curve constructed by the lines connecting codewords, resulting in less quantization error and full codebook usage. Both methods train end-to-end without requiring auxiliary losses or temperature schedules. On VQ-VAE compression and VQGAN generation across various data sets, they improve reconstruction and sample quality over alternative quantization approaches.

Privacy Disclosure of Similarity in Speech and Language Processing

Aug 07, 2025Abstract:Speaker, author, and other biometric identification applications often compare a sample's similarity to a database of templates to determine the identity. Given that data may be noisy and similarity measures can be inaccurate, such a comparison may not reliably identify the true identity as the most similar. Still, even the similarity rank based on an inaccurate similarity measure can disclose private information about the true identity. We propose a methodology for quantifying the privacy disclosure of such a similarity rank by estimating its probability distribution. It is based on determining the histogram of the similarity rank of the true speaker, or when data is scarce, modeling the histogram with the beta-binomial distribution. We express the disclosure in terms of entropy (bits), such that the disclosure from independent features are additive. Our experiments demonstrate that all tested speaker and author characterizations contain personally identifying information (PII) that can aid in identification, with embeddings from speaker recognition algorithms containing the most information, followed by phone embeddings, linguistic embeddings, and fundamental frequency. Our initial experiments show that the disclosure of PII increases with the length of test samples, but it is bounded by the length of database templates. The provided metric, similarity rank disclosure, provides a way to compare the disclosure of PII between biometric features and merge them to aid identification. It can thus aid in the holistic evaluation of threats to privacy in speech and other biometric technologies.

Compressing 3D Gaussian Splatting by Noise-Substituted Vector Quantization

Apr 08, 2025

Abstract:3D Gaussian Splatting (3DGS) has demonstrated remarkable effectiveness in 3D reconstruction, achieving high-quality results with real-time radiance field rendering. However, a key challenge is the substantial storage cost: reconstructing a single scene typically requires millions of Gaussian splats, each represented by 59 floating-point parameters, resulting in approximately 1 GB of memory. To address this challenge, we propose a compression method by building separate attribute codebooks and storing only discrete code indices. Specifically, we employ noise-substituted vector quantization technique to jointly train the codebooks and model features, ensuring consistency between gradient descent optimization and parameter discretization. Our method reduces the memory consumption efficiently (around $45\times$) while maintaining competitive reconstruction quality on standard 3D benchmark scenes. Experiments on different codebook sizes show the trade-off between compression ratio and image quality. Furthermore, the trained compressed model remains fully compatible with popular 3DGS viewers and enables faster rendering speed, making it well-suited for practical applications.

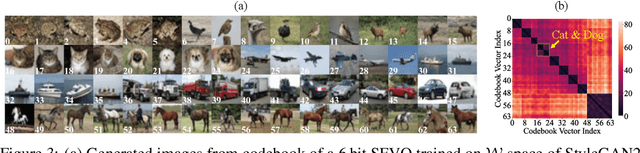

Unsupervised Panoptic Interpretation of Latent Spaces in GANs Using Space-Filling Vector Quantization

Oct 27, 2024

Abstract:Generative adversarial networks (GANs) learn a latent space whose samples can be mapped to real-world images. Such latent spaces are difficult to interpret. Some earlier supervised methods aim to create an interpretable latent space or discover interpretable directions that require exploiting data labels or annotated synthesized samples for training. However, we propose using a modification of vector quantization called space-filling vector quantization (SFVQ), which quantizes the data on a piece-wise linear curve. SFVQ can capture the underlying morphological structure of the latent space and thus make it interpretable. We apply this technique to model the latent space of pretrained StyleGAN2 and BigGAN networks on various datasets. Our experiments show that the SFVQ curve yields a general interpretable model of the latent space that determines which part of the latent space corresponds to what specific generative factors. Furthermore, we demonstrate that each line of SFVQ's curve can potentially refer to an interpretable direction for applying intelligible image transformations. We also showed that the points located on an SFVQ line can be used for controllable data augmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge