Tochukwu Onyeogulu

Identification of Cognitive Workload during Surgical Tasks with Multimodal Deep Learning

Sep 12, 2022

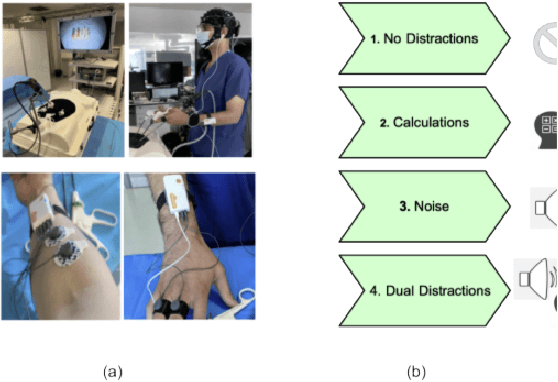

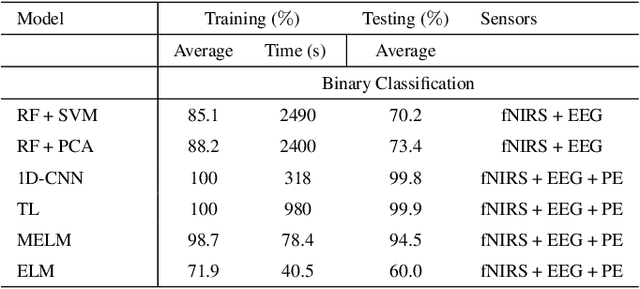

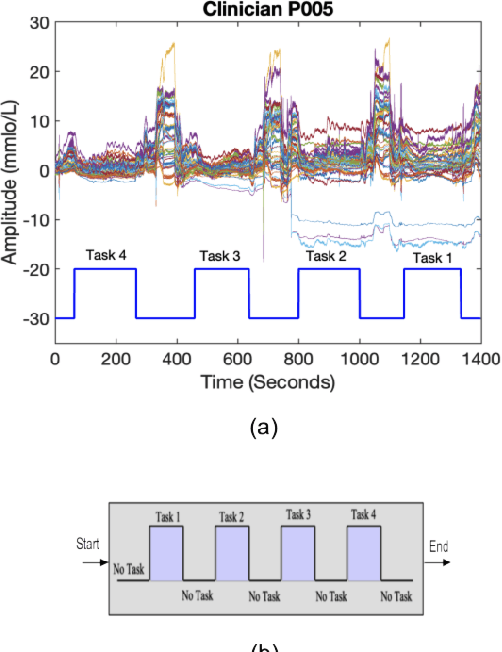

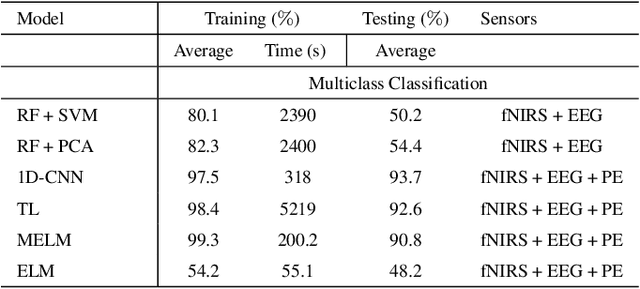

Abstract:In operating Rooms (ORs), activities are usually different from other typical working environments. In particular, surgeons are frequently exposed to multiple psycho-organizational constraints that may cause negative repercussions on their health and performance. This is commonly attributed to an increase in the associated Cognitive Workload (CWL) that results from dealing with unexpected and repetitive tasks, as well as large amounts of information and potentially risky cognitive overload. In this paper, a cascade of two machine learning approaches is suggested for the multimodal recognition of CWL in a number of four different surgical tasks. First, a model based on the concept of transfer learning is used to identify if a surgeon is experiencing any CWL. Secondly, a Convolutional Neural Network (CNN) uses this information to identify different types of CWL associated to each surgical task. The suggested multimodal approach consider adjacent signals from electroencephalogram (EEG), functional near-infrared spectroscopy (fNIRS) and pupil eye diameter. The concatenation of signals allows complex correlations in terms of time (temporal) and channel location (spatial). Data collection is performed by a Multi-sensing AI Environment for Surgical Task $\&$ Role Optimisation platform (MAESTRO) developed at HARMS Lab. To compare the performance of the proposed methodology, a number of state-of-art machine learning techniques have been implemented. The tests show that the proposed model has a precision of 93%.

Situation Awareness for Automated Surgical Check-listing in AI-Assisted Operating Room

Sep 12, 2022

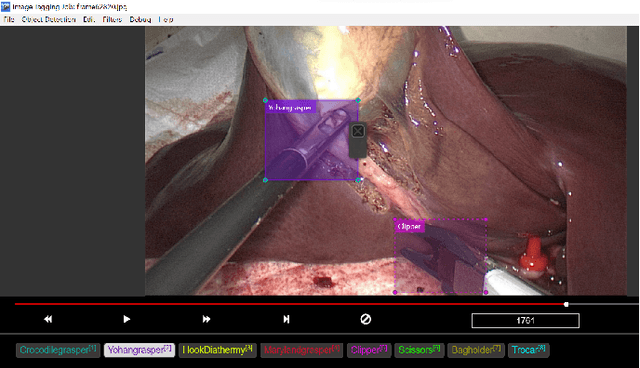

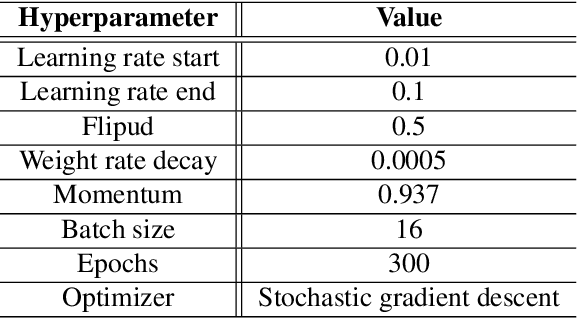

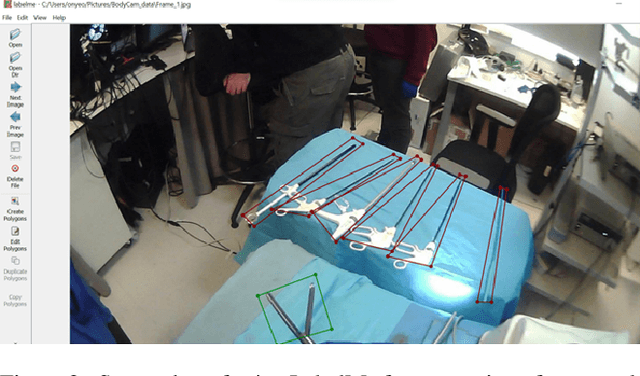

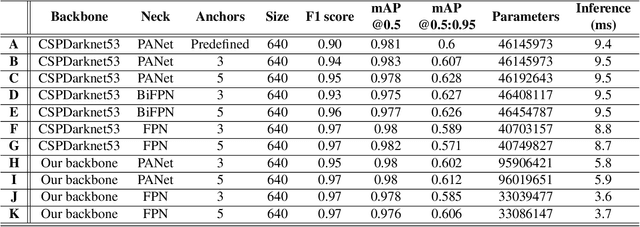

Abstract:Nowadays, there are more surgical procedures that are being performed using minimally invasive surgery (MIS). This is due to its many benefits, such as minimal post-operative problems, less bleeding, minor scarring, and a speedy recovery. However, the MIS's constrained field of view, small operating room, and indirect viewing of the operating scene could lead to surgical tools colliding and potentially harming human organs or tissues. Therefore, MIS problems can be considerably reduced, and surgical procedure accuracy and success rates can be increased by using an endoscopic video feed to detect and monitor surgical instruments in real-time. In this paper, a set of improvements made to the YOLOV5 object detector to enhance the detection of surgical instruments was investigated, analyzed, and evaluated. In doing this, we performed performance-based ablation studies, explored the impact of altering the YOLOv5 model's backbone, neck, and anchor structural elements, and annotated a unique endoscope dataset. Additionally, we compared the effectiveness of our ablation investigations with that of four additional SOTA object detectors (YOLOv7, YOLOR, Scaled-YOLOv4 and YOLOv3-SPP). Except for YOLOv3-SPP, which had the same model performance of 98.3% in mAP and a similar inference speed, all of our benchmark models, including the original YOLOv5, were surpassed by our top refined model in experiments using our fresh endoscope dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge