Tingxun Lv

Adversarial Contrastive Self-Supervised Learning

Feb 26, 2022

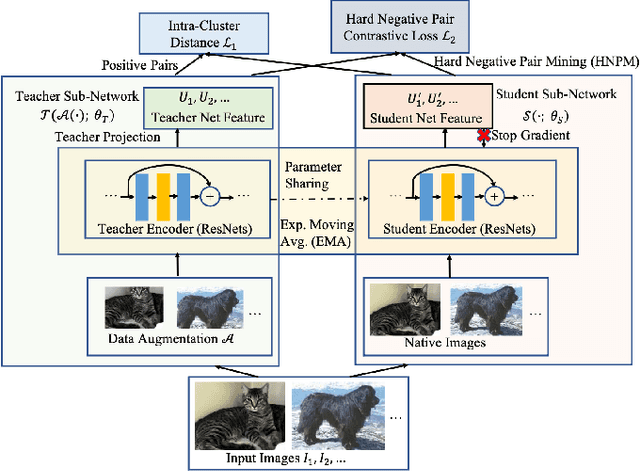

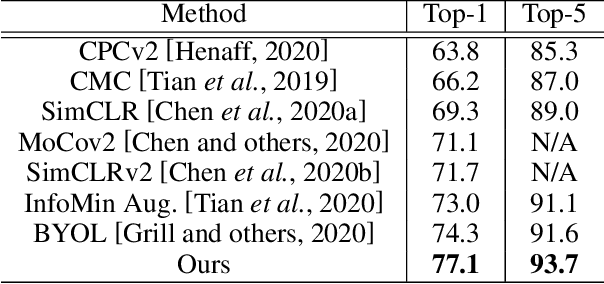

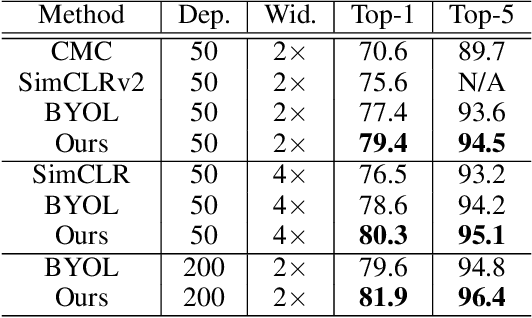

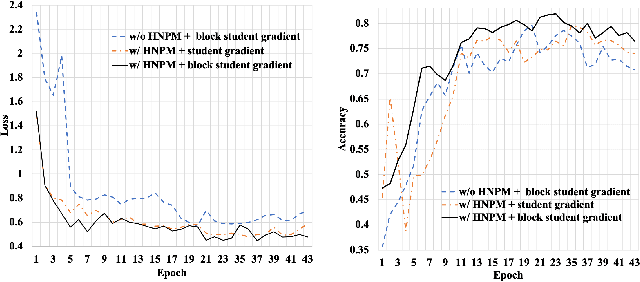

Abstract:Recently, learning from vast unlabeled data, especially self-supervised learning, has been emerging and attracted widespread attention. Self-supervised learning followed by the supervised fine-tuning on a few labeled examples can significantly improve label efficiency and outperform standard supervised training using fully annotated data. In this work, we present a novel self-supervised deep learning paradigm based on online hard negative pair mining. Specifically, we design a student-teacher network to generate multi-view of the data for self-supervised learning and integrate hard negative pair mining into the training. Then we derive a new triplet-like loss considering both positive sample pairs and mined hard negative sample pairs. Extensive experiments demonstrate the effectiveness of the proposed method and its components on ILSVRC-2012.

Shifted Chunk Transformer for Spatio-Temporal Representational Learning

Aug 27, 2021

Abstract:Spatio-temporal representational learning has been widely adopted in various fields such as action recognition, video object segmentation, and action anticipation. Previous spatio-temporal representational learning approaches primarily employ ConvNets or sequential models,e.g., LSTM, to learn the intra-frame and inter-frame features. Recently, Transformer models have successfully dominated the study of natural language processing (NLP), image classification, etc. However, the pure-Transformer based spatio-temporal learning can be prohibitively costly on memory and computation to extract fine-grained features from a tiny patch. To tackle the training difficulty and enhance the spatio-temporal learning, we construct a shifted chunk Transformer with pure self-attention blocks. Leveraging the recent efficient Transformer design in NLP, this shifted chunk Transformer can learn hierarchical spatio-temporal features from a local tiny patch to a global video clip. Our shifted self-attention can also effectively model complicated inter-frame variances. Furthermore, we build a clip encoder based on Transformer to model long-term temporal dependencies. We conduct thorough ablation studies to validate each component and hyper-parameters in our shifted chunk Transformer, and it outperforms previous state-of-the-art approaches on Kinetics-400, Kinetics-600, UCF101, and HMDB51. Code and trained models will be released.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge