Tingji Zhang

OpenAttack: An Open-source Textual Adversarial Attack Toolkit

Sep 19, 2020

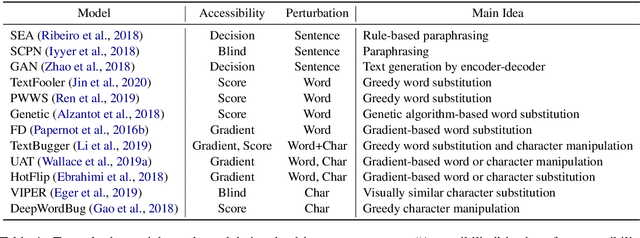

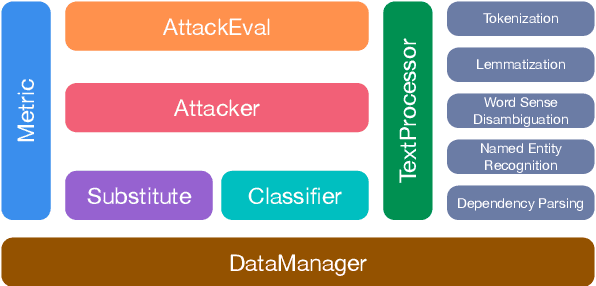

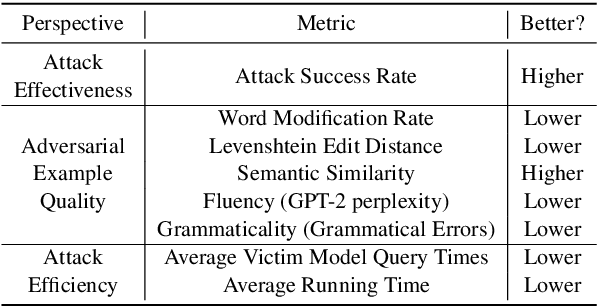

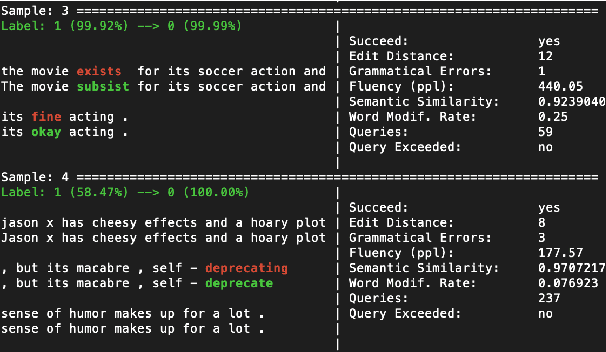

Abstract:Textual adversarial attacking has received wide and increasing attention in recent years. Various attack models have been proposed, which are enormously distinct and implemented with different programming frameworks and settings. These facts hinder quick utilization and apt comparison of attack models. In this paper, we present an open-source textual adversarial attack toolkit named OpenAttack. It currently builds in 12 typical attack models that cover all the attack types. Its highly inclusive modular design not only supports quick utilization of existing attack models, but also enables great flexibility and extensibility. OpenAttack has broad uses including comparing and evaluating attack models, measuring robustness of a victim model, assisting in developing new attack models, and adversarial training. Source code, built-in models and documentation can be obtained at https://github.com/thunlp/OpenAttack.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge