Tianzhe Wang

M3C: A Framework towards Convergent, Flexible, and Unsupervised Learning of Mixture Graph Matching and Clustering

Oct 27, 2023

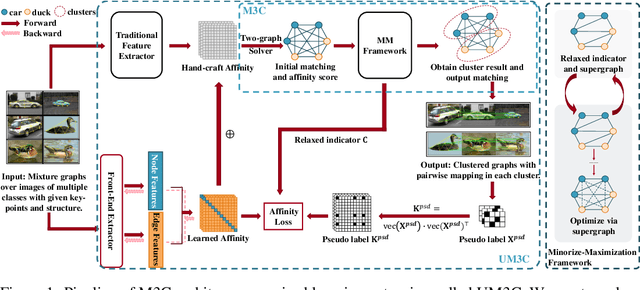

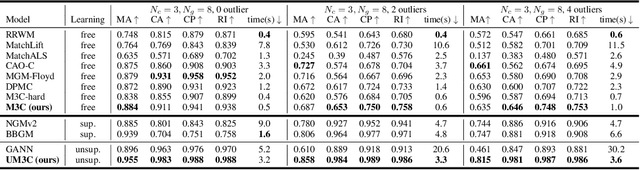

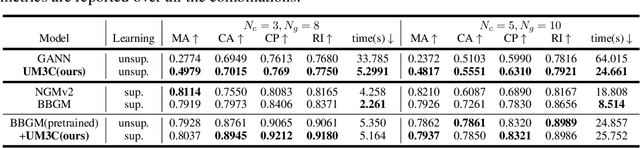

Abstract:Existing graph matching methods typically assume that there are similar structures between graphs and they are matchable. However, these assumptions do not align with real-world applications. This work addresses a more realistic scenario where graphs exhibit diverse modes, requiring graph grouping before or along with matching, a task termed mixture graph matching and clustering. We introduce Minorize-Maximization Matching and Clustering (M3C), a learning-free algorithm that guarantees theoretical convergence through the Minorize-Maximization framework and offers enhanced flexibility via relaxed clustering. Building on M3C, we develop UM3C, an unsupervised model that incorporates novel edge-wise affinity learning and pseudo label selection. Extensive experimental results on public benchmarks demonstrate that our method outperforms state-of-the-art graph matching and mixture graph matching and clustering approaches in both accuracy and efficiency. Source code will be made publicly available.

Learning Universe Model for Partial Matching Networks over Multiple Graphs

Oct 19, 2022

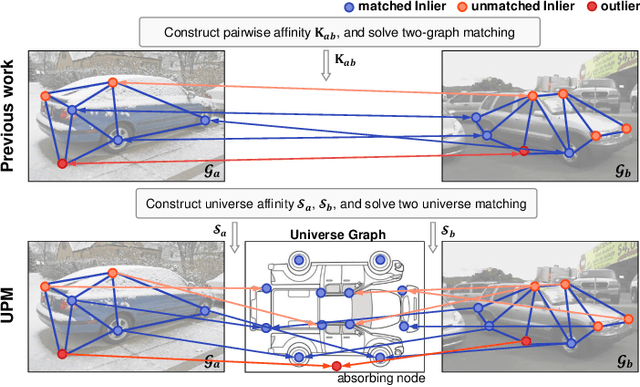

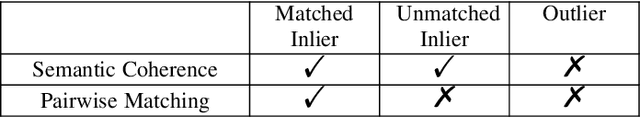

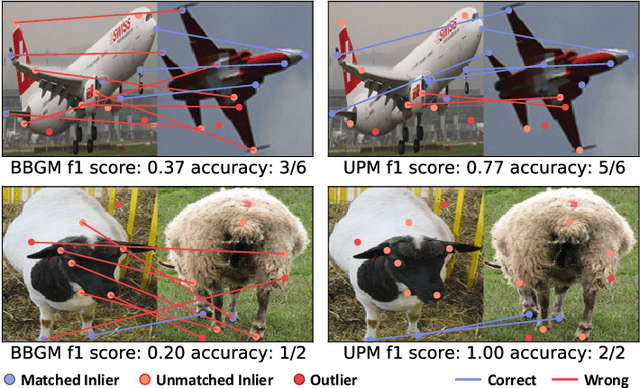

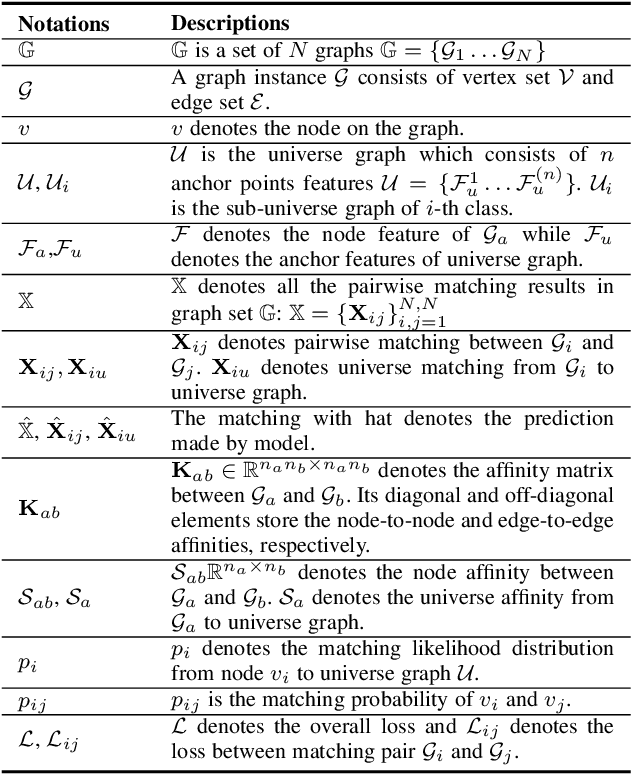

Abstract:We consider the general setting for partial matching of two or multiple graphs, in the sense that not necessarily all the nodes in one graph can find their correspondences in another graph and vice versa. We take a universe matching perspective to this ubiquitous problem, whereby each node is either matched into an anchor in a virtual universe graph or regarded as an outlier. Such a universe matching scheme enjoys a few important merits, which have not been adopted in existing learning-based graph matching (GM) literature. First, the subtle logic for inlier matching and outlier detection can be clearly modeled, which is otherwise less convenient to handle in the pairwise matching scheme. Second, it enables end-to-end learning especially for universe level affinity metric learning for inliers matching, and loss design for gathering outliers together. Third, the resulting matching model can easily handle new arriving graphs under online matching, or even the graphs coming from different categories of the training set. To our best knowledge, this is the first deep learning network that can cope with two-graph matching, multiple-graph matching, online matching, and mixture graph matching simultaneously. Extensive experimental results show the state-of-the-art performance of our method in these settings.

APQ: Joint Search for Network Architecture, Pruning and Quantization Policy

Jun 15, 2020

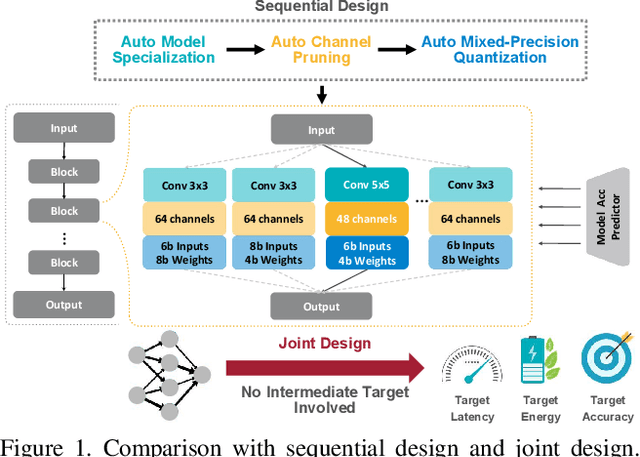

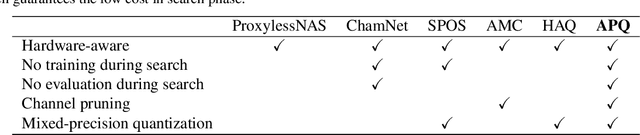

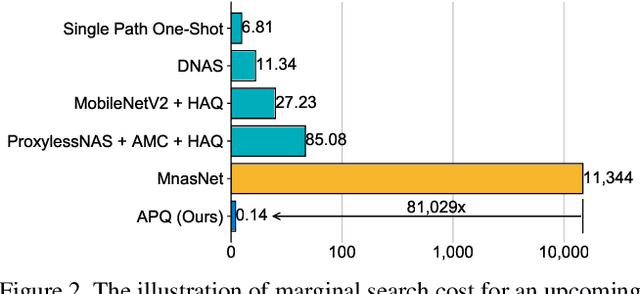

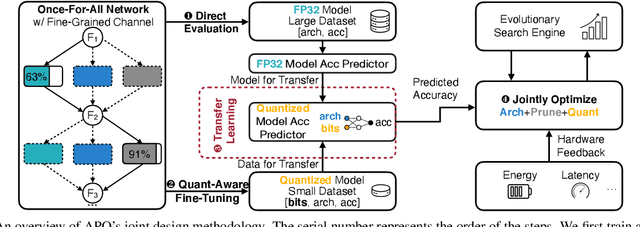

Abstract:We present APQ for efficient deep learning inference on resource-constrained hardware. Unlike previous methods that separately search the neural architecture, pruning policy, and quantization policy, we optimize them in a joint manner. To deal with the larger design space it brings, a promising approach is to train a quantization-aware accuracy predictor to quickly get the accuracy of the quantized model and feed it to the search engine to select the best fit. However, training this quantization-aware accuracy predictor requires collecting a large number of quantized <model, accuracy> pairs, which involves quantization-aware finetuning and thus is highly time-consuming. To tackle this challenge, we propose to transfer the knowledge from a full-precision (i.e., fp32) accuracy predictor to the quantization-aware (i.e., int8) accuracy predictor, which greatly improves the sample efficiency. Besides, collecting the dataset for the fp32 accuracy predictor only requires to evaluate neural networks without any training cost by sampling from a pretrained once-for-all network, which is highly efficient. Extensive experiments on ImageNet demonstrate the benefits of our joint optimization approach. With the same accuracy, APQ reduces the latency/energy by 2x/1.3x over MobileNetV2+HAQ. Compared to the separate optimization approach (ProxylessNAS+AMC+HAQ), APQ achieves 2.3% higher ImageNet accuracy while reducing orders of magnitude GPU hours and CO2 emission, pushing the frontier for green AI that is environmental-friendly. The code and video are publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge