Tian Tsong Ng

Tracking objects using 3D object proposals

Dec 19, 2017

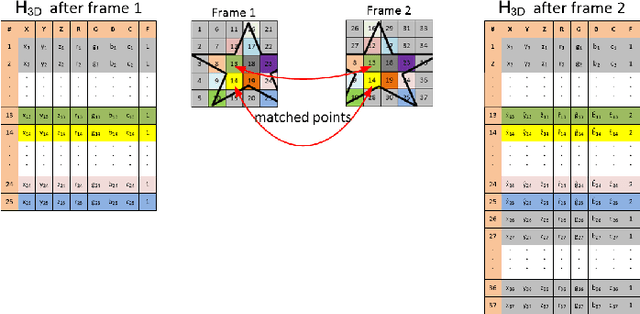

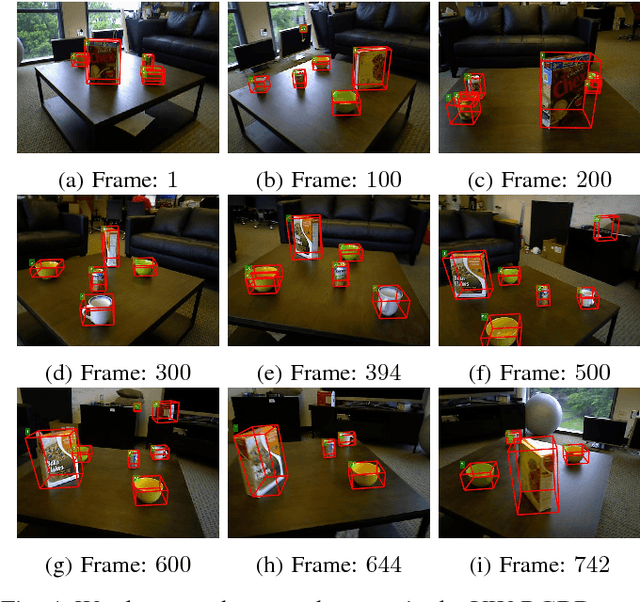

Abstract:3D object proposals, quickly detected regions in a 3D scene that likely contain an object of interest, are an effective approach to improve the computational efficiency and accuracy of the object detection framework. In this work, we propose a novel online method that uses our previously developed 3D object proposals, in a RGB-D video sequence, to match and track static objects in the scene using shape matching. Our main observation is that depth images provide important information about the geometry of the scene that is often ignored in object matching techniques. Our method takes less than a second in MATLAB on the UW-RGBD scene dataset on a single thread CPU and thus, has potential to be used in low-power chips in Unmanned Aerial Vehicles (UAVs), quadcopters, and drones.

* 4 pages, 4 figures, published in APSIPA 2017

Locating 3D Object Proposals: A Depth-Based Online Approach

Sep 08, 2017

Abstract:2D object proposals, quickly detected regions in an image that likely contain an object of interest, are an effective approach for improving the computational efficiency and accuracy of object detection in color images. In this work, we propose a novel online method that generates 3D object proposals in a RGB-D video sequence. Our main observation is that depth images provide important information about the geometry of the scene. Diverging from the traditional goal of 2D object proposals to provide a high recall (lots of 2D bounding boxes near potential objects), we aim for precise 3D proposals. We leverage on depth information per frame and multi-view scene information to obtain accurate 3D object proposals. Using efficient but robust registration enables us to combine multiple frames of a scene in near real time and generate 3D bounding boxes for potential 3D regions of interest. Using standard metrics, such as Precision-Recall curves and F-measure, we show that the proposed approach is significantly more accurate than the current state-of-the-art techniques. Our online approach can be integrated into SLAM based video processing for quick 3D object localization. Our method takes less than a second in MATLAB on the UW-RGBD scene dataset on a single thread CPU and thus, has potential to be used in low-power chips in Unmanned Aerial Vehicles (UAVs), quadcopters, and drones.

* 14 pages, 12 figures, journal

Calibration of depth cameras using denoised depth images

Sep 08, 2017

Abstract:Depth sensing devices have created various new applications in scientific and commercial research with the advent of Microsoft Kinect and PMD (Photon Mixing Device) cameras. Most of these applications require the depth cameras to be pre-calibrated. However, traditional calibration methods using a checkerboard do not work very well for depth cameras due to the low image resolution. In this paper, we propose a depth calibration scheme which excels in estimating camera calibration parameters when only a handful of corners and calibration images are available. We exploit the noise properties of PMD devices to denoise depth measurements and perform camera calibration using the denoised depth as an additional set of measurements. Our synthetic and real experiments show that our depth denoising and depth based calibration scheme provides significantly better results than traditional calibration methods.

* 5 pages, 3 figures, conference

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge