Thomas Ward

SUrgical PRediction GAN for Events Anticipation

May 10, 2021

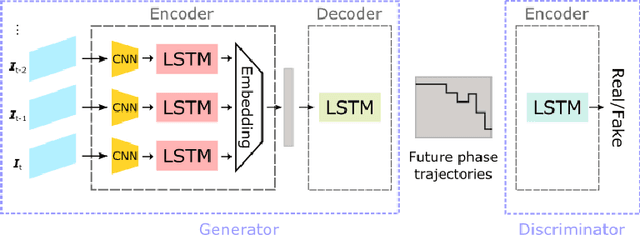

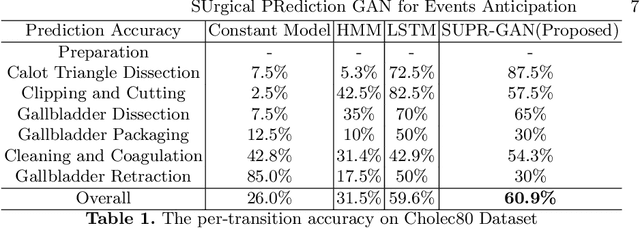

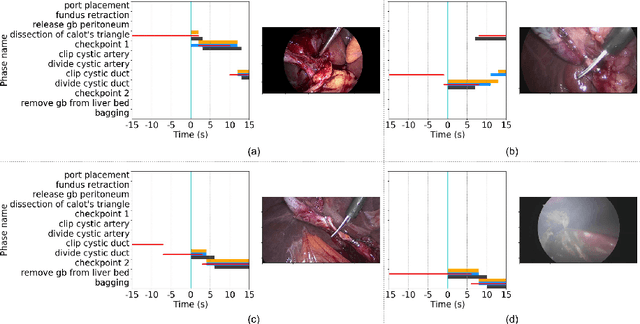

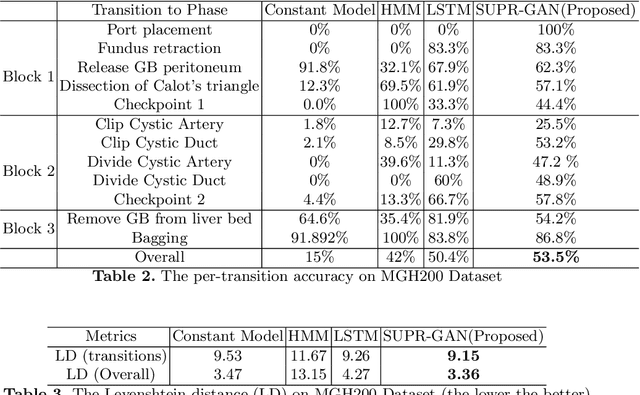

Abstract:Comprehension of surgical workflow is the foundation upon which computers build the understanding of surgery. In this work, we moved beyond just the identification of surgical phases to predict future surgical phases and the transitions between them. We used a novel GAN formulation that sampled the future surgical phases trajectory conditioned, on past laparoscopic video frames, and compared it to state-of-the-art approaches for surgical video analysis and alternative prediction methods. We demonstrated its effectiveness in inferring and predicting the progress of laparoscopic cholecystectomy videos. We quantified the horizon-accuracy trade-off and explored average performance as well as the performance on the more difficult, and clinically important, transitions between phases. Lastly, we surveyed surgeons to evaluate the plausibility of these predicted trajectories.

Aggregating Long-Term Context for Learning Surgical Workflows

Sep 11, 2020

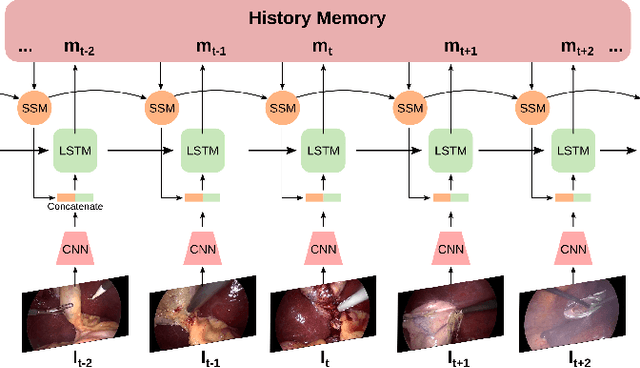

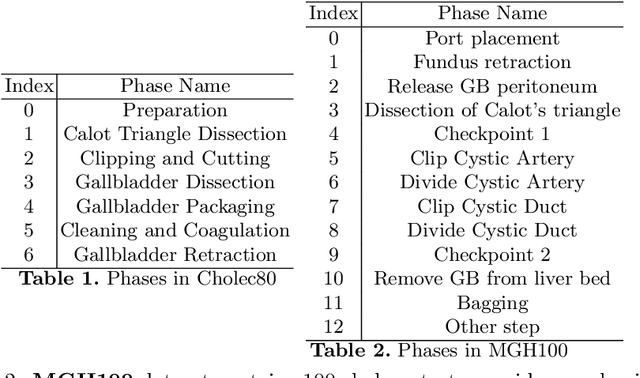

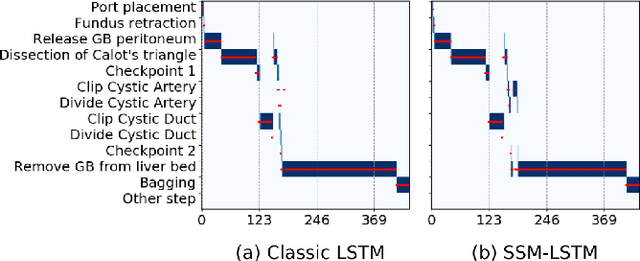

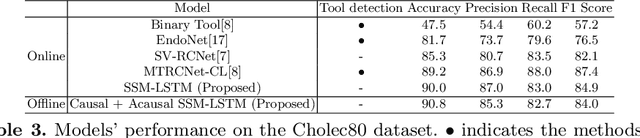

Abstract:Analyzing surgical workflow is crucial for computers to understand surgeries. Deep learning techniques have recently been widely applied to recognize surgical workflows. Many of the existing temporal neural network models are limited in their capability to handle long-term dependencies in the data, instead of relying upon strong performance of the underlying per-frame visual models. We propose a new temporal network structure that leverages task-specific network representation to collect long-term sufficient statistics that are propagated by a sufficient statistics model (SSM). We leverage our approach within an LSTM back-bone for the task of surgical phase recognition and explore several choices for propagated statistics. We demonstrate superior results over existing state-of-the-art segmentation and novel segmentation techniques, on two laparoscopic cholecystectomy datasets: the already published Cholec80dataset and MGH100, a novel dataset with more challenging, yet clinically meaningful, segment labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge