Thanh-Hai Phung

A SAM-based Solution for Hierarchical Panoptic Segmentation of Crops and Weeds Competition

Sep 24, 2023

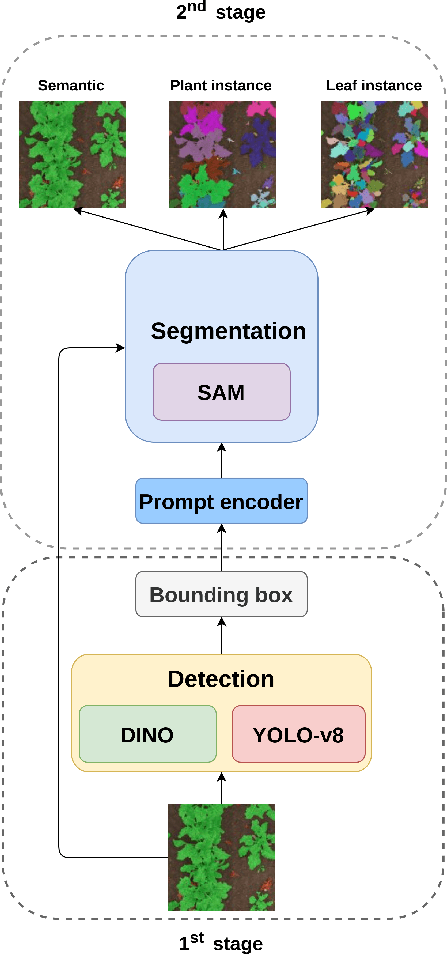

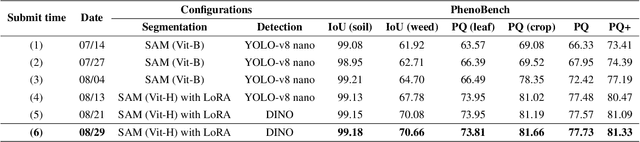

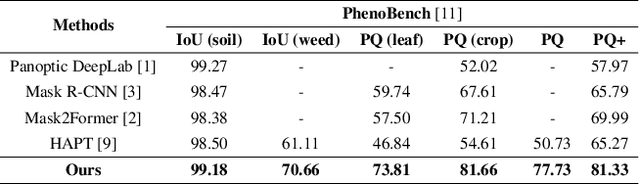

Abstract:Panoptic segmentation in agriculture is an advanced computer vision technique that provides a comprehensive understanding of field composition. It facilitates various tasks such as crop and weed segmentation, plant panoptic segmentation, and leaf instance segmentation, all aimed at addressing challenges in agriculture. Exploring the application of panoptic segmentation in agriculture, the 8th Workshop on Computer Vision in Plant Phenotyping and Agriculture (CVPPA) hosted the challenge of hierarchical panoptic segmentation of crops and weeds using the PhenoBench dataset. To tackle the tasks presented in this competition, we propose an approach that combines the effectiveness of the Segment AnyThing Model (SAM) for instance segmentation with prompt input from object detection models. Specifically, we integrated two notable approaches in object detection, namely DINO and YOLO-v8. Our best-performing model achieved a PQ+ score of 81.33 based on the evaluation metrics of the competition.

Mask or Non-Mask? Robust Face Mask Detector via Triplet-Consistency Representation Learning

Oct 01, 2021

Abstract:In the absence of vaccines or medicines to stop COVID-19, one of the effective methods to slow the spread of the coronavirus and reduce the overloading of healthcare is to wear a face mask. Nevertheless, to mandate the use of face masks or coverings in public areas, additional human resources are required, which is tedious and attention-intensive. To automate the monitoring process, one of the promising solutions is to leverage existing object detection models to detect the faces with or without masks. As such, security officers do not have to stare at the monitoring devices or crowds, and only have to deal with the alerts triggered by the detection of faces without masks. Existing object detection models usually focus on designing the CNN-based network architectures for extracting discriminative features. However, the size of training datasets of face mask detection is small, while the difference between faces with and without masks is subtle. Therefore, in this paper, we propose a face mask detection framework that uses the context attention module to enable the effective attention of the feed-forward convolution neural network by adapting their attention maps feature refinement. Moreover, we further propose an anchor-free detector with Triplet-Consistency Representation Learning by integrating the consistency loss and the triplet loss to deal with the small-scale training data and the similarity between masks and occlusions. Extensive experimental results show that our method outperforms the other state-of-the-art methods. The source code is released as a public download to improve public health at https://github.com/wei-1006/MaskFaceDetection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge