Tarek Echekki

North Carolina State University

Probabilistic transfer learning methodology to expedite high fidelity simulation of reactive flows

May 17, 2024

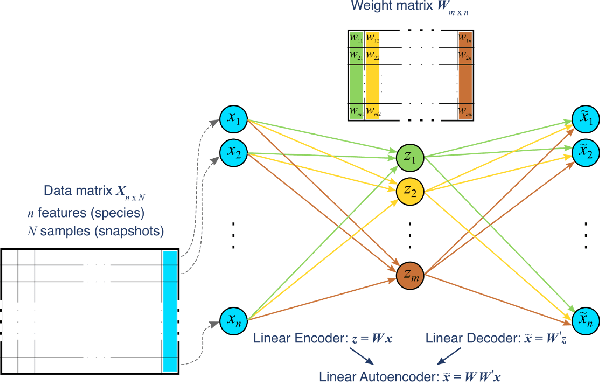

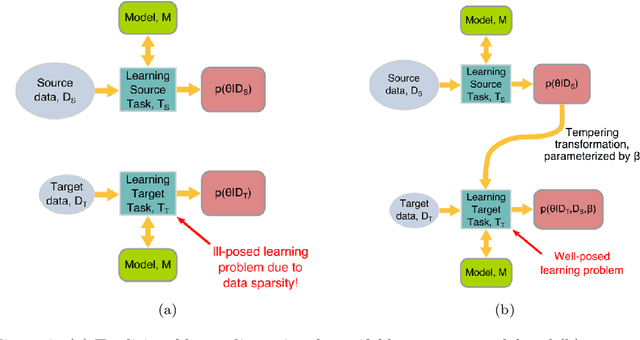

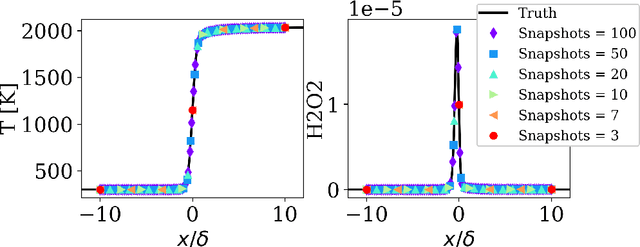

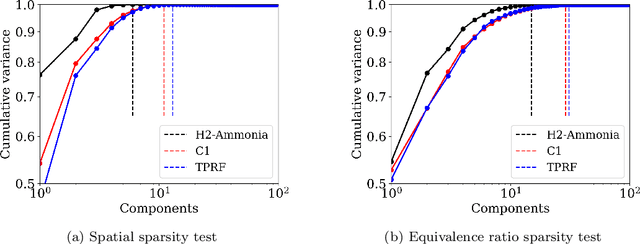

Abstract:Reduced order models based on the transport of a lower dimensional manifold representation of the thermochemical state, such as Principal Component (PC) transport and Machine Learning (ML) techniques, have been developed to reduce the computational cost associated with the Direct Numerical Simulations (DNS) of reactive flows. Both PC transport and ML normally require an abundance of data to exhibit sufficient predictive accuracy, which might not be available due to the prohibitive cost of DNS or experimental data acquisition. To alleviate such difficulties, similar data from an existing dataset or domain (source domain) can be used to train ML models, potentially resulting in adequate predictions in the domain of interest (target domain). This study presents a novel probabilistic transfer learning (TL) framework to enhance the trust in ML models in correctly predicting the thermochemical state in a lower dimensional manifold and a sparse data setting. The framework uses Bayesian neural networks, and autoencoders, to reduce the dimensionality of the state space and diffuse the knowledge from the source to the target domain. The new framework is applied to one-dimensional freely-propagating flame solutions under different data sparsity scenarios. The results reveal that there is an optimal amount of knowledge to be transferred, which depends on the amount of data available in the target domain and the similarity between the domains. TL can reduce the reconstruction error by one order of magnitude for cases with large sparsity. The new framework required 10 times less data for the target domain to reproduce the same error as in the abundant data scenario. Furthermore, comparisons with a state-of-the-art deterministic TL strategy show that the probabilistic method can require four times less data to achieve the same reconstruction error.

Transfer learning for predicting source terms of principal component transport in chemically reactive flow

Dec 01, 2023Abstract:The objective of this study is to evaluate whether the number of requisite training samples can be reduced with the use of various transfer learning models for predicting, for example, the chemical source terms of the data-driven reduced-order model that represents the homogeneous ignition process of a hydrogen/air mixture. Principal component analysis is applied to reduce the dimensionality of the hydrogen/air mixture in composition space. Artificial neural networks (ANNs) are used to tabulate the reaction rates of principal components, and subsequently, a system of ordinary differential equations is solved. As the number of training samples decreases at the target task (i.e.,for T0 > 1000 K and various phi), the reduced-order model fails to predict the ignition evolution of a hydrogen/air mixture. Three transfer learning strategies are then applied to the training of the ANN model with a sparse dataset. The performance of the reduced-order model with a sparse dataset is found to be remarkably enhanced if the training of the ANN model is restricted by a regularization term that controls the degree of knowledge transfer from source to target tasks. To this end, a novel transfer learning method is introduced, parameter control via partial initialization and regularization (PaPIR), whereby the amount of knowledge transferred is systemically adjusted for the initialization and regularization of the ANN model in the target task. It is found that an additional performance gain can be achieved by changing the initialization scheme of the ANN model in the target task when the task similarity between source and target tasks is relatively low.

A Framework for Combustion Chemistry Acceleration with DeepONets

Apr 06, 2023

Abstract:A combustion chemistry acceleration scheme is developed based on deep operator networks (DeepONets). The scheme is based on the identification of combustion reaction dynamics through a modified DeepOnet architecture such that the solutions of thermochemical scalars are projected to new solutions in small and flexible time increments. The approach is designed to efficiently implement chemistry acceleration without the need for computationally expensive integration of stiff chemistry. An additional framework of latent-space dynamics identification with modified DeepOnet is also proposed which enhances the computational efficiency and widens the applicability of the proposed scheme. The scheme is demonstrated on simple chemical kinetics of hydrogen oxidation to more complex chemical kinetics of n-dodecane high- and low-temperature oxidations. The proposed framework accurately learns the chemical kinetics and efficiently reproduces species and temperature temporal profiles corresponding to each application. In addition, a very large speed-up with a great extrapolation capability is also observed with the proposed scheme.

Generalized Joint Probability Density Function Formulation inTurbulent Combustion using DeepONet

Apr 05, 2021

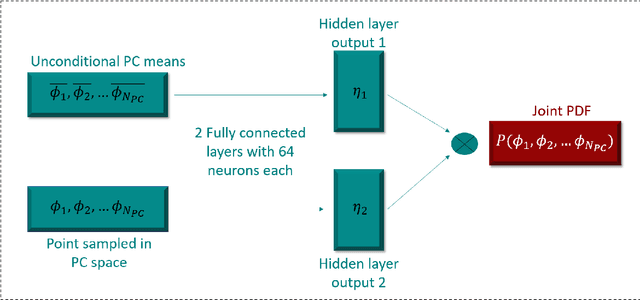

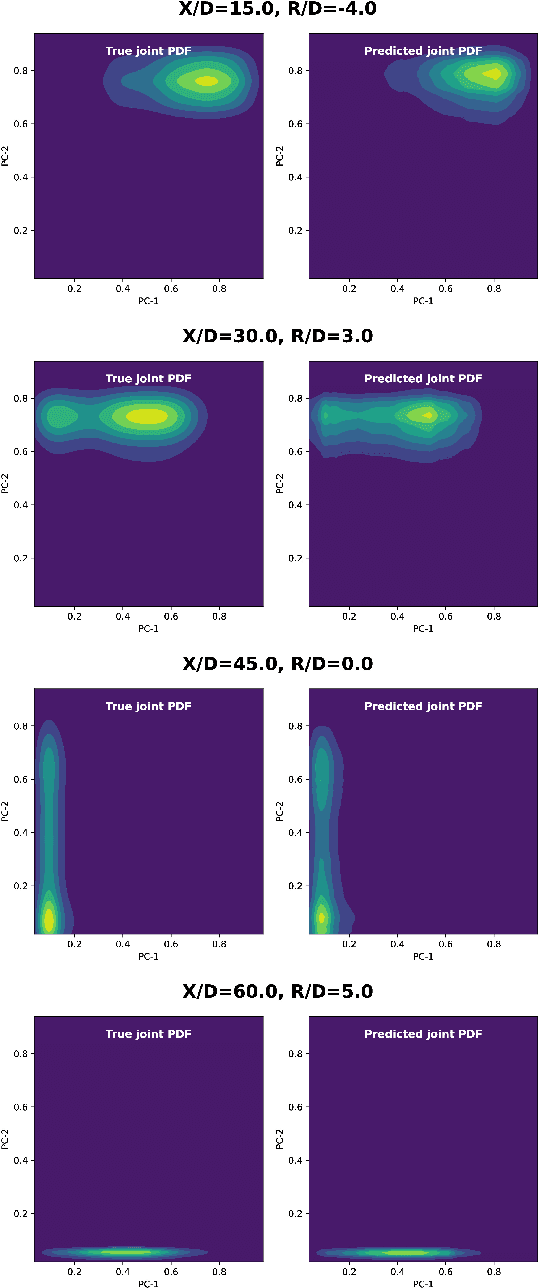

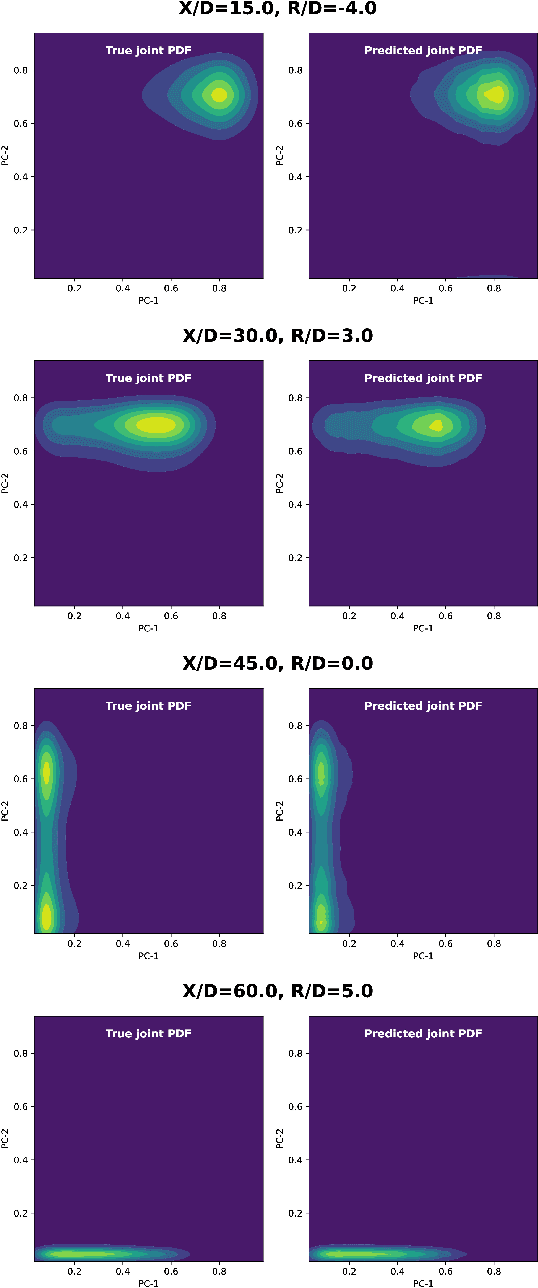

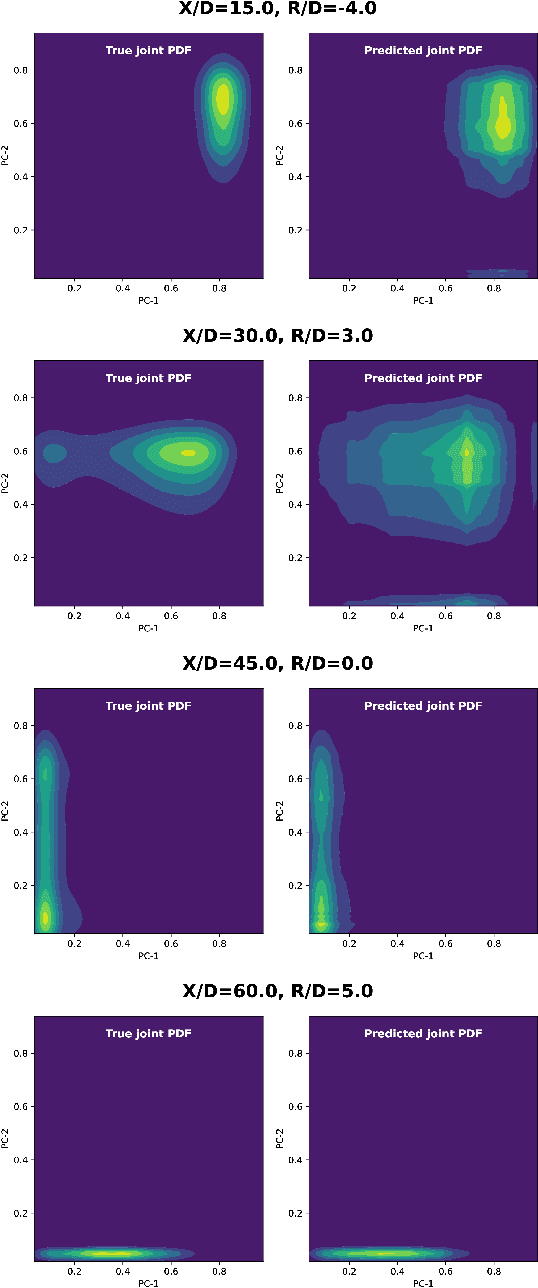

Abstract:Joint probability density function (PDF)-based models in turbulent combustion provide direct closure for turbulence-chemistry interactions. The joint PDFs capture the turbulent flame dynamics at different spatial locations and hence it is crucial to represent them accurately. The jointPDFs are parameterized on the unconditional means of thermo-chemical state variables, which can be high dimensional. Thus, accurate construction of joint PDFs at various spatial locations may require an exorbitant amount of data. In a previous work, we introduced a framework that alleviated data requirements by constructing joint PDFs in a lower dimensional space using principal component analysis (PCA) in conjunction with Kernel Density Estimation (KDE). However, constructing the principal component (PC) joint PDFs is still computationally expensive as they are required to be calculated at each spatial location in the turbulent flame. In this work, we propose the concept of a generalized joint PDF model using the Deep Operator Network (DeepONet). The DeepONet is a machine learning model that is parameterized on the unconditional means of PCs at a given spatial location and discrete PC coordinates and predicts the joint probability density value for the corresponding PC coordinate. We demonstrate the accuracy and generalizability of the DeepONet on the Sandia flames, D, E and F. The DeepONet is trained based on the PC joint PDFs observed inflame E and yields excellent predictions of joint PDFs shapes at different spatial locations of flamesD and F, which are not seen during training

An Efficient Machine-Learning Approach for PDF Tabulation in Turbulent Combustion Closure

May 18, 2020

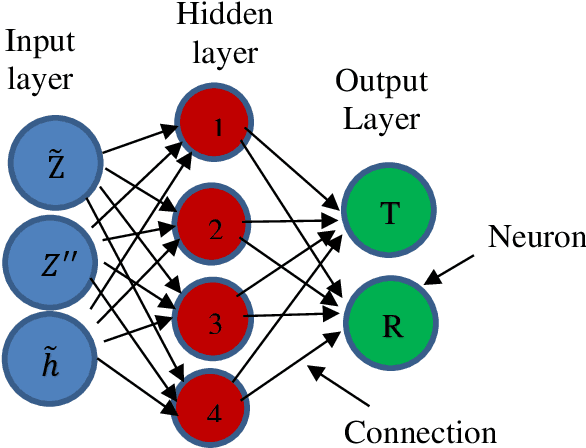

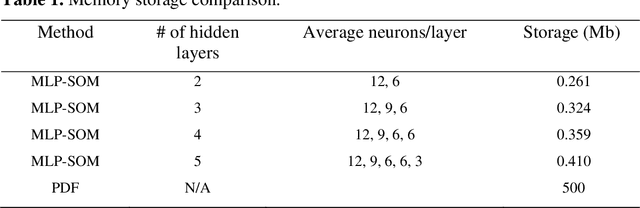

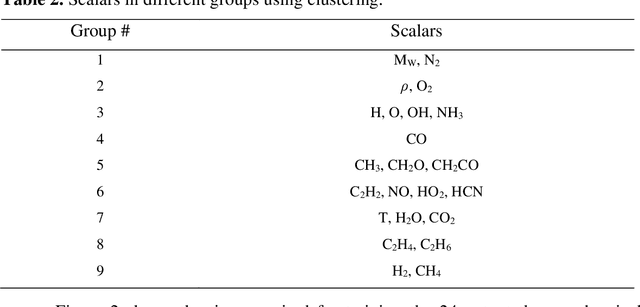

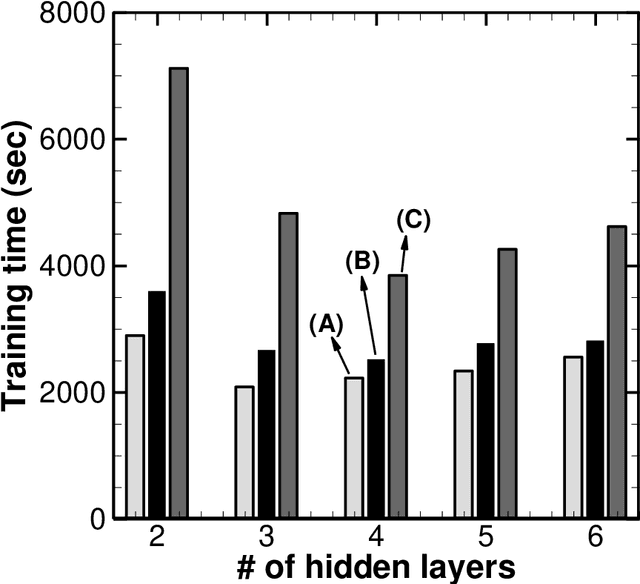

Abstract:Probability density function (PDF) based turbulent combustion modelling is limited by the need to store multi-dimensional PDF tables that can take up large amounts of memory. A significant saving in storage can be achieved by using various machine-learning techniques that represent the thermo-chemical quantities of a PDF table using mathematical functions. These functions can be computationally more expensive than the existing interpolation methods used for thermo-chemical quantities. More importantly, the training time can amount to a considerable portion of the simulation time. In this work, we address these issues by introducing an adaptive training algorithm that relies on multi-layer perception (MLP) neural networks for regression and self-organizing maps (SOMs) for clustering data to tabulate using different networks. The algorithm is designed to address both the multi-dimensionality of the PDF table as well as the computational efficiency of the proposed algorithm. SOM clustering divides the PDF table into several parts based on similarities in data. Each cluster of data is trained using an MLP algorithm on simple network architectures to generate local functions for thermo-chemical quantities. The algorithm is validated for the so-called DLR-A turbulent jet diffusion flame using both RANS and LES simulations and the results of the PDF tabulation are compared to the standard linear interpolation method. The comparison yields a very good agreement between the two tabulation techniques and establishes the MLP-SOM approach as a viable method for PDF tabulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge