Tanvir Islam

TLoRA: Tri-Matrix Low-Rank Adaptation of Large Language Models

Apr 25, 2025

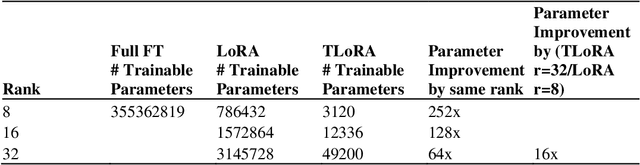

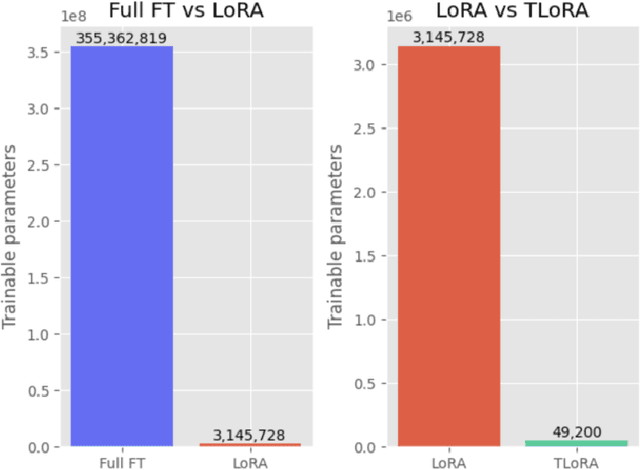

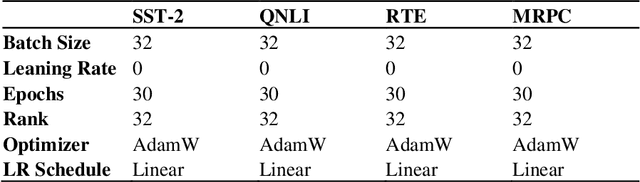

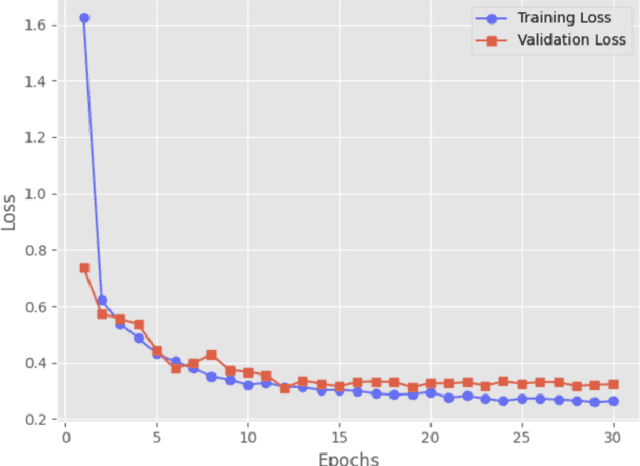

Abstract:We propose TLoRA, a novel tri-matrix low-rank adaptation method that decomposes weight updates into three matrices: two fixed random matrices and one trainable matrix, combined with a learnable, layer-wise scaling factor. This tri-matrix design enables TLoRA to achieve highly efficient parameter adaptation while introducing minimal additional computational overhead. Through extensive experiments on the GLUE benchmark, we demonstrate that TLoRA achieves comparable performance to existing low-rank methods such as LoRA and Adapter-based techniques, while requiring significantly fewer trainable parameters. Analyzing the adaptation dynamics, we observe that TLoRA exhibits Gaussian-like weight distributions, stable parameter norms, and scaling factor variability across layers, further highlighting its expressive power and adaptability. Additionally, we show that TLoRA closely resembles LoRA in its eigenvalue distributions, parameter norms, and cosine similarity of updates, underscoring its ability to effectively approximate LoRA's adaptation behavior. Our results establish TLoRA as a highly efficient and effective fine-tuning method for LLMs, offering a significant step forward in resource-efficient model adaptation.

Extended Histogram-based Outlier Score (EHBOS)

Feb 08, 2025Abstract:Histogram-Based Outlier Score (HBOS) is a widely used outlier or anomaly detection method known for its computational efficiency and simplicity. However, its assumption of feature independence limits its ability to detect anomalies in datasets where interactions between features are critical. In this paper, we propose the Extended Histogram-Based Outlier Score (EHBOS), which enhances HBOS by incorporating two-dimensional histograms to capture dependencies between feature pairs. This extension allows EHBOS to identify contextual and dependency-driven anomalies that HBOS fails to detect. We evaluate EHBOS on 17 benchmark datasets, demonstrating its effectiveness and robustness across diverse anomaly detection scenarios. EHBOS outperforms HBOS on several datasets, particularly those where feature interactions are critical in defining the anomaly structure, achieving notable improvements in ROC AUC. These results highlight that EHBOS can be a valuable extension to HBOS, with the ability to model complex feature dependencies. EHBOS offers a powerful new tool for anomaly detection, particularly in datasets where contextual or relational anomalies play a significant role.

Human Behavior-based Personalized Meal Recommendation and Menu Planning Social System

Aug 12, 2023

Abstract:The traditional dietary recommendation systems are basically nutrition or health-aware where the human feelings on food are ignored. Human affects vary when it comes to food cravings, and not all foods are appealing in all moods. A questionnaire-based and preference-aware meal recommendation system can be a solution. However, automated recognition of social affects on different foods and planning the menu considering nutritional demand and social-affect has some significant benefits of the questionnaire-based and preference-aware meal recommendations. A patient with severe illness, a person in a coma, or patients with locked-in syndrome and amyotrophic lateral sclerosis (ALS) cannot express their meal preferences. Therefore, the proposed framework includes a social-affective computing module to recognize the affects of different meals where the person's affect is detected using electroencephalography signals. EEG allows to capture the brain signals and analyze them to anticipate affective toward a food. In this study, we have used a 14-channel wireless Emotive Epoc+ to measure affectivity for different food items. A hierarchical ensemble method is applied to predict affectivity upon multiple feature extraction methods and TOPSIS (Technique for Order of Preference by Similarity to Ideal Solution) is used to generate a food list based on the predicted affectivity. In addition to the meal recommendation, an automated menu planning approach is also proposed considering a person's energy intake requirement, affectivity, and nutritional values of the different menus. The bin-packing algorithm is used for the personalized menu planning of breakfast, lunch, dinner, and snacks. The experimental findings reveal that the suggested affective computing, meal recommendation, and menu planning algorithms perform well across a variety of assessment parameters.

Personalization of Stress Mobile Sensing using Self-Supervised Learning

Aug 04, 2023

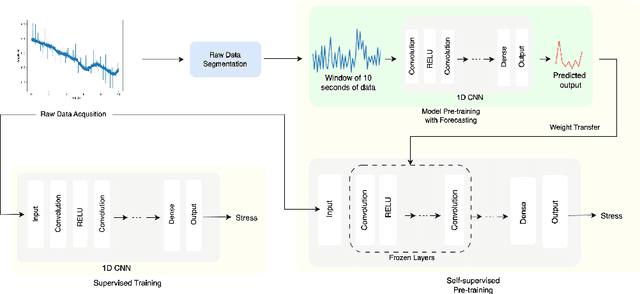

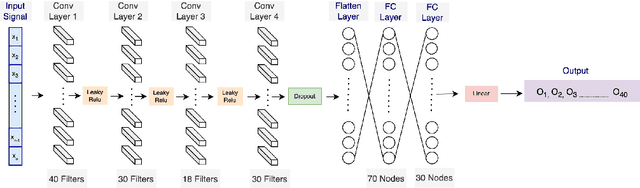

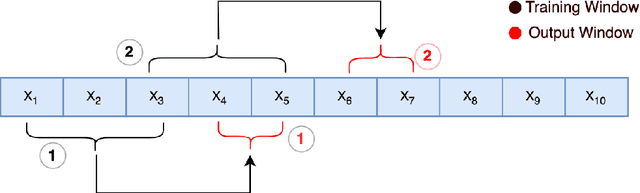

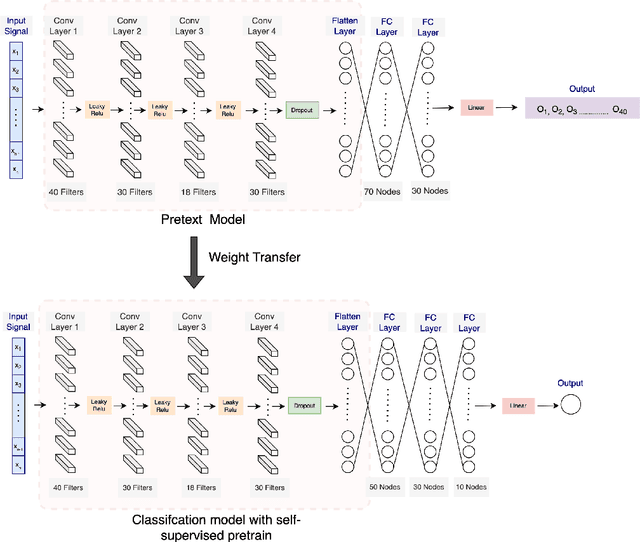

Abstract:Stress is widely recognized as a major contributor to a variety of health issues. Stress prediction using biosignal data recorded by wearables is a key area of study in mobile sensing research because real-time stress prediction can enable digital interventions to immediately react at the onset of stress, helping to avoid many psychological and physiological symptoms such as heart rhythm irregularities. Electrodermal activity (EDA) is often used to measure stress. However, major challenges with the prediction of stress using machine learning include the subjectivity and sparseness of the labels, a large feature space, relatively few labels, and a complex nonlinear and subjective relationship between the features and outcomes. To tackle these issues, we examine the use of model personalization: training a separate stress prediction model for each user. To allow the neural network to learn the temporal dynamics of each individual's baseline biosignal patterns, thus enabling personalization with very few labels, we pre-train a 1-dimensional convolutional neural network (CNN) using self-supervised learning (SSL). We evaluate our method using the Wearable Stress and Affect prediction (WESAD) dataset. We fine-tune the pre-trained networks to the stress prediction task and compare against equivalent models without any self-supervised pre-training. We discover that embeddings learned using our pre-training method outperform supervised baselines with significantly fewer labeled data points: the models trained with SSL require less than 30% of the labels to reach equivalent performance without personalized SSL. This personalized learning method can enable precision health systems which are tailored to each subject and require few annotations by the end user, thus allowing for the mobile sensing of increasingly complex, heterogeneous, and subjective outcomes such as stress.

Personalized Prediction of Recurrent Stress Events Using Self-Supervised Learning on Multimodal Time-Series Data

Jul 07, 2023

Abstract:Chronic stress can significantly affect physical and mental health. The advent of wearable technology allows for the tracking of physiological signals, potentially leading to innovative stress prediction and intervention methods. However, challenges such as label scarcity and data heterogeneity render stress prediction difficult in practice. To counter these issues, we have developed a multimodal personalized stress prediction system using wearable biosignal data. We employ self-supervised learning (SSL) to pre-train the models on each subject's data, allowing the models to learn the baseline dynamics of the participant's biosignals prior to fine-tuning the stress prediction task. We test our model on the Wearable Stress and Affect Detection (WESAD) dataset, demonstrating that our SSL models outperform non-SSL models while utilizing less than 5% of the annotations. These results suggest that our approach can personalize stress prediction to each user with minimal annotations. This paradigm has the potential to enable personalized prediction of a variety of recurring health events using complex multimodal data streams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge