Tania Panayiotou

Adaptive Autopilot: Constrained DRL for Diverse Driving Behaviors

Jul 02, 2024

Abstract:In pursuit of autonomous vehicles, achieving human-like driving behavior is vital. This study introduces adaptive autopilot (AA), a unique framework utilizing constrained-deep reinforcement learning (C-DRL). AA aims to safely emulate human driving to reduce the necessity for driver intervention. Focusing on the car-following scenario, the process involves (i) extracting data from the highD natural driving study and categorizing it into three driving styles using a rule-based classifier; (ii) employing deep neural network (DNN) regressors to predict human-like acceleration across styles; and (iii) using C-DRL, specifically the soft actor-critic Lagrangian technique, to learn human-like safe driving policies. Results indicate effectiveness in each step, with the rule-based classifier distinguishing driving styles, the regressor model accurately predicting acceleration, outperforming traditional car-following models, and C-DRL agents learning optimal policies for humanlike driving across styles.

Edge-Assisted ML-Aided Uncertainty-Aware Vehicle Collision Avoidance at Urban Intersections

Apr 22, 2024

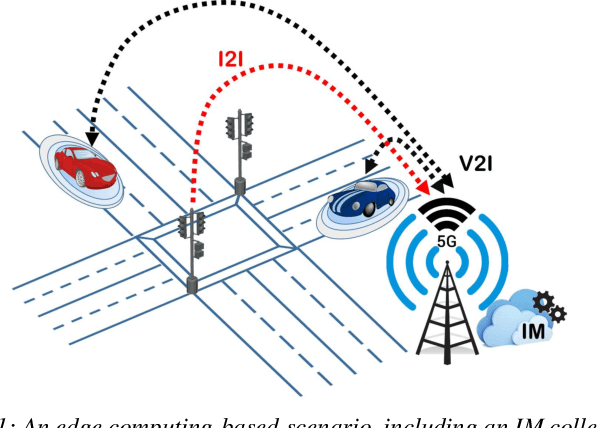

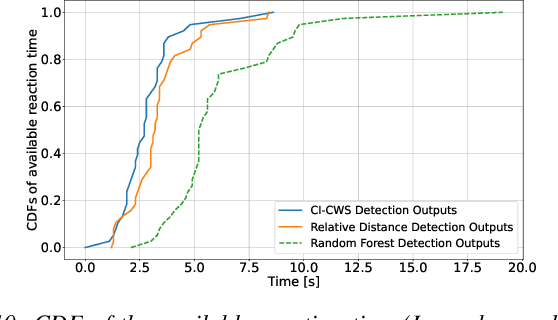

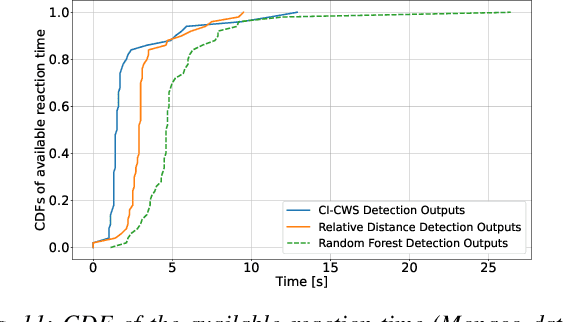

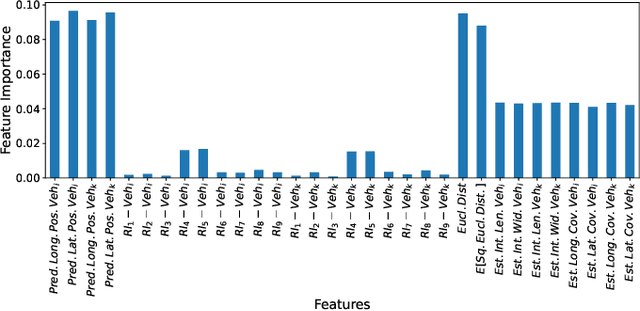

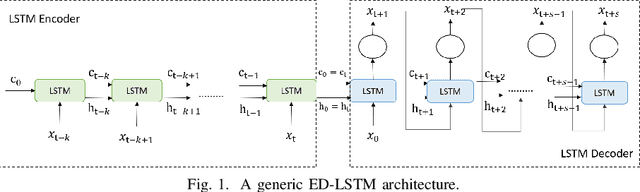

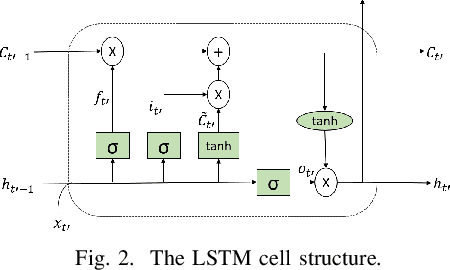

Abstract:Intersection crossing represents one of the most dangerous sections of the road infrastructure and Connected Vehicles (CVs) can serve as a revolutionary solution to the problem. In this work, we present a novel framework that detects preemptively collisions at urban crossroads, exploiting the Multi-access Edge Computing (MEC) platform of 5G networks. At the MEC, an Intersection Manager (IM) collects information from both vehicles and the road infrastructure to create a holistic view of the area of interest. Based on the historical data collected, the IM leverages the capabilities of an encoder-decoder recurrent neural network to predict, with high accuracy, the future vehicles' trajectories. As, however, accuracy is not a sufficient measure of how much we can trust a model, trajectory predictions are additionally associated with a measure of uncertainty towards confident collision forecasting and avoidance. Hence, contrary to any other approach in the state of the art, an uncertainty-aware collision prediction framework is developed that is shown to detect well in advance (and with high reliability) if two vehicles are on a collision course. Subsequently, collision detection triggers a number of alarms that signal the colliding vehicles to brake. Under real-world settings, thanks to the preemptive capabilities of the proposed approach, all the simulated imminent dangers are averted.

Multi-Step Traffic Prediction for Multi-Period Planning in Optical Networks

Apr 12, 2024Abstract:A multi-period planning framework is proposed that exploits multi-step ahead traffic predictions to address service overprovisioning and improve adaptability to traffic changes, while ensuring the necessary quality-of-service (QoS) levels. An encoder-decoder deep learning model is initially leveraged for multi-step ahead prediction by analyzing real-traffic traces. This information is then exploited by multi-period planning heuristics to efficiently utilize available network resources while minimizing undesired service disruptions (caused due to lightpath re-allocations), with these heuristics outperforming a single-step ahead prediction approach.

Machine Learning for Real-Time Anomaly Detection in Optical Networks

Jun 19, 2023

Abstract:This work proposes a real-time anomaly detection scheme that leverages the multi-step ahead prediction capabilities of encoder-decoder (ED) deep learning models with recurrent units. Specifically, an encoder-decoder is used to model soft-failure evolution over a long future horizon (i.e., for several days ahead) by analyzing past quality-of-transmission (QoT) observations. This information is subsequently used for real-time anomaly detection (e.g., of attack incidents), as the knowledge of how the QoT is expected to evolve allows capturing unexpected network behavior. Specifically, for anomaly detection, a statistical hypothesis testing scheme is used, alleviating the limitations of supervised (SL) and unsupervised learning (UL) schemes, usually applied for this purpose. Indicatively, the proposed scheme eliminates the need for labeled anomalies, required when SL is applied, and the need for on-line analyzing entire datasets to identify abnormal instances (i.e., UL). Overall, it is shown that by utilizing QoT evolution information, the proposed approach can effectively detect abnormal deviations in real-time. Importantly, it is shown that the information concerning soft-failure evolution (i.e., QoT predictions) is essential to accurately detect anomalies.

Modeling Soft-Failure Evolution for Triggering Timely Repair with Low QoT Margins

Aug 30, 2022

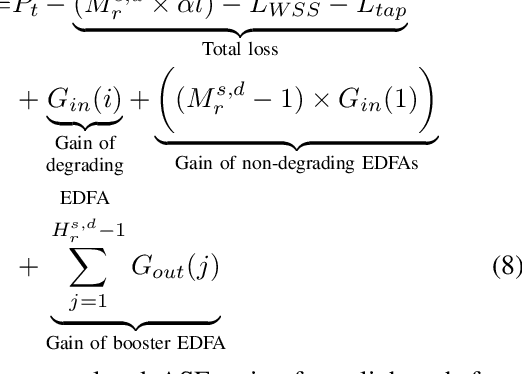

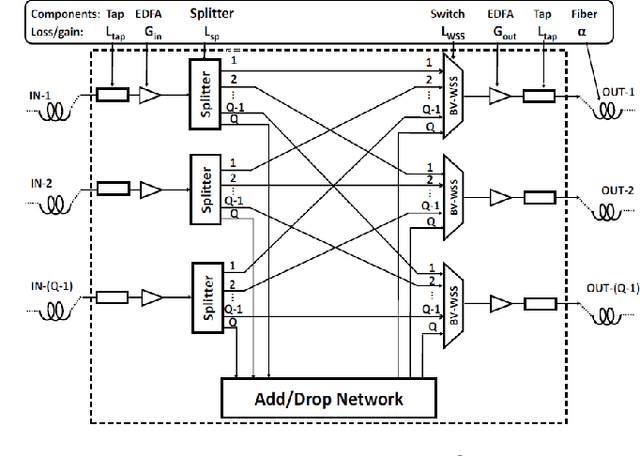

Abstract:In this work, the capabilities of an encoder-decoder learning framework are leveraged to predict soft-failure evolution over a long future horizon. This enables the triggering of timely repair actions with low quality-of-transmission (QoT) margins before a costly hard-failure occurs, ultimately reducing the frequency of repair actions and associated operational expenses. Specifically, it is shown that the proposed scheme is capable of triggering a repair action several days prior to the expected day of a hard-failure, contrary to soft-failure detection schemes utilizing rule-based fixed QoT margins, that may lead either to premature repair actions (i.e., several months before the event of a hard-failure) or to repair actions that are taken too late (i.e., after the hard failure has occurred). Both frameworks are evaluated and compared for a lightpath established in an elastic optical network, where soft-failure evolution can be modeled by analyzing bit-error-rate information monitored at the coherent receivers.

Centralized and Distributed Machine Learning-Based QoT Estimation for Sliceable Optical Networks

Sep 27, 2019

Abstract:Dynamic network slicing has emerged as a promising and fundamental framework for meeting 5G's diverse use cases. As machine learning (ML) is expected to play a pivotal role in the efficient control and management of these networks, in this work we examine the ML-based Quality-of-Transmission (QoT) estimation problem under the dynamic network slicing context, where each slice has to meet a different QoT requirement. We examine ML-based QoT frameworks with the aim of finding QoT model/s that are fine-tuned according to the diverse QoT requirements. Centralized and distributed frameworks are examined and compared according to their accuracy and training time. We show that the distributed QoT models outperform the centralized QoT model, especially as the number of diverse QoT requirements increases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge