Tammy Riklin Raviv

HyperFusion: A Hypernetwork Approach to Multimodal Integration of Tabular and Medical Imaging Data for Predictive Modeling

Mar 20, 2024

Abstract:The integration of diverse clinical modalities such as medical imaging and the tabular data obtained by the patients' Electronic Health Records (EHRs) is a crucial aspect of modern healthcare. The integrative analysis of multiple sources can provide a comprehensive understanding of a patient's condition and can enhance diagnoses and treatment decisions. Deep Neural Networks (DNNs) consistently showcase outstanding performance in a wide range of multimodal tasks in the medical domain. However, the complex endeavor of effectively merging medical imaging with clinical, demographic and genetic information represented as numerical tabular data remains a highly active and ongoing research pursuit. We present a novel framework based on hypernetworks to fuse clinical imaging and tabular data by conditioning the image processing on the EHR's values and measurements. This approach aims to leverage the complementary information present in these modalities to enhance the accuracy of various medical applications. We demonstrate the strength and the generality of our method on two different brain Magnetic Resonance Imaging (MRI) analysis tasks, namely, brain age prediction conditioned by subject's sex, and multiclass Alzheimer's Disease (AD) classification conditioned by tabular data. We show that our framework outperforms both single-modality models and state-of-the-art MRI-tabular data fusion methods. The code, enclosed to this manuscript will be made publicly available.

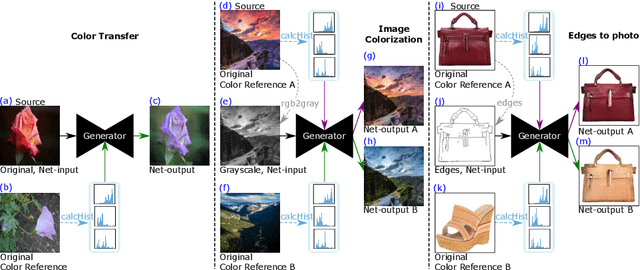

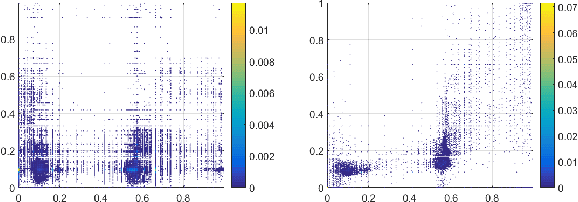

DeepHist: Differentiable Joint and Color Histogram Layers for Image-to-Image Translation

May 06, 2020

Abstract:We present the DeepHist - a novel Deep Learning framework for augmenting a network by histogram layers and demonstrate its strength by addressing image-to-image translation problems. Specifically, given an input image and a reference color distribution we aim to generate an output image with the structural appearance (content) of the input (source) yet with the colors of the reference. The key idea is a new technique for a differentiable construction of joint and color histograms of the output images. We further define a color distribution loss based on the Earth Mover's Distance between the output's and the reference's color histograms and a Mutual Information loss based on the joint histograms of the source and the output images. Promising results are shown for the tasks of color transfer, image colorization and edges $\rightarrow$ photo, where the color distribution of the output image is controlled. Comparison to Pix2Pix and CyclyGANs are shown.

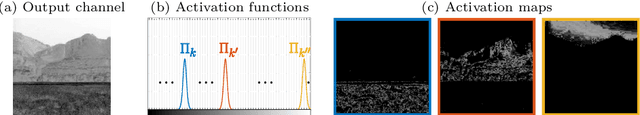

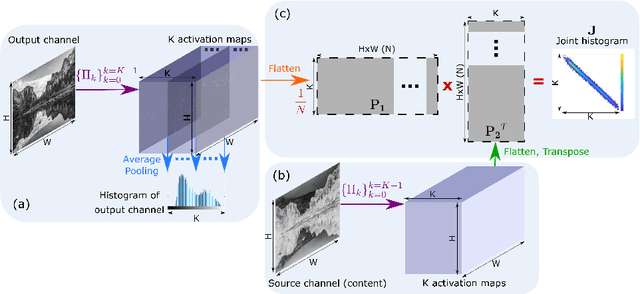

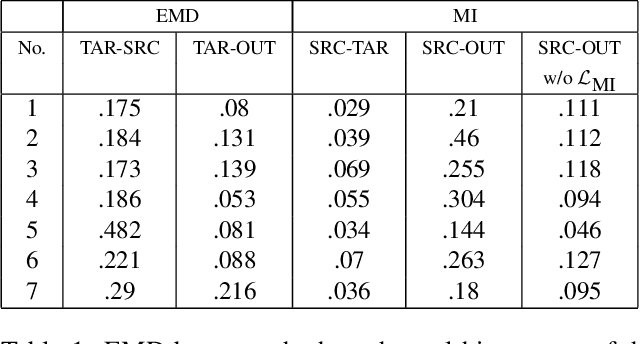

Hue-Net: Intensity-based Image-to-Image Translation with Differentiable Histogram Loss Functions

Dec 12, 2019

Abstract:We present the Hue-Net - a novel Deep Learning framework for Intensity-based Image-to-Image Translation. The key idea is a new technique termed network augmentation which allows a differentiable construction of intensity histograms from images. We further introduce differentiable representations of (1D) cyclic and joint (2D) histograms and use them for defining loss functions based on cyclic Earth Mover's Distance (EMD) and Mutual Information (MI). While the Hue-Net can be applied to several image-to-image translation tasks, we choose to demonstrate its strength on color transfer problems, where the aim is to paint a source image with the colors of a different target image. Note that the desired output image does not exist and therefore cannot be used for supervised pixel-to-pixel learning. This is accomplished by using the HSV color-space and defining an intensity-based loss that is built on the EMD between the cyclic hue histograms of the output and the target images. To enforce color-free similarity between the source and the output images, we define a semantic-based loss by a differentiable approximation of the MI of these images. The incorporation of histogram loss functions in addition to an adversarial loss enables the construction of semantically meaningful and realistic images. Promising results are presented for different datasets.

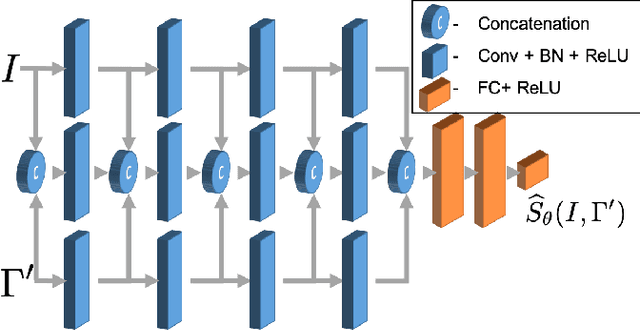

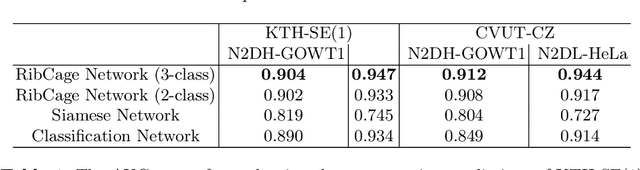

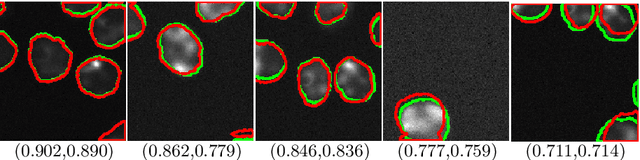

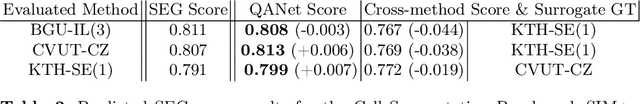

QANet - Quality Assurance Network for Microscopy Cell Segmentation

Apr 23, 2019

Abstract:Tools and methods for automatic image segmentation are rapidly developing, each with its own strengths and weaknesses. While these methods are designed to be as general as possible, there are no guarantees for their performance on new data. The choice between methods is usually based on benchmark performance whereas the data in the benchmark can be significantly different than that of the user. We introduce a novel Deep Learning method which, given an image and a proposed corresponding segmentation, estimates the Intersection over Union measure (IoU) with respect to the unknown ground truth. We refer to this method as a Quality Assurance Network - QANet. The QANet is designed to give the user an estimate of the segmentation quality on the users own, private, data without the need for human inspection or labelling. It is based on the RibCage Network architecture, originally proposed as a discriminator in an adversarial network framework. Promising IoU prediction results are demonstrated based on the Cell Segmentation Benchmark.

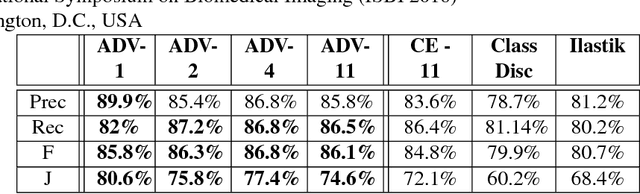

Microscopy Cell Segmentation via Adversarial Neural Networks

Sep 13, 2018

Abstract:We present a novel method for cell segmentation in microscopy images which is inspired by the Generative Adversarial Neural Network (GAN) approach. Our framework is built on a pair of two competitive artificial neural networks, with a unique architecture, termed Rib Cage, which are trained simultaneously and together define a min-max game resulting in an accurate segmentation of a given image. Our approach has two main strengths, similar to the GAN, the method does not require a formulation of a loss function for the optimization process. This allows training on a limited amount of annotated data in a weakly supervised manner. Promising segmentation results on real fluorescent microscopy data are presented. The code is freely available at: https://github.com/arbellea/DeepCellSeg.git

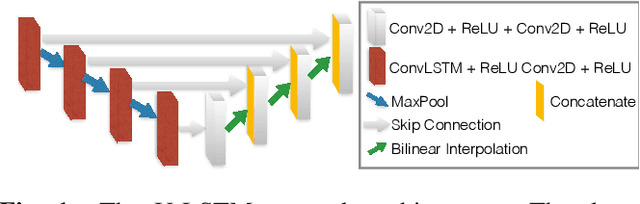

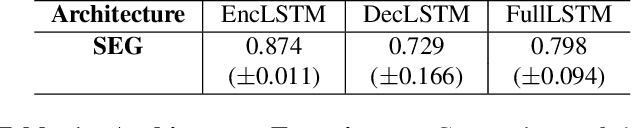

Microscopy Cell Segmentation via Convolutional LSTM Networks

May 29, 2018

Abstract:Live cell microscopy sequences exhibit complex spatial structures and complicated temporal behaviour, making their analysis a challenging task. Considering cell segmentation problem, which plays a significant role in the analysis, the spatial properties of the data can be captured using Convolutional Neural Networks (CNNs). Recent approaches show promising segmentation results using convolutional encoder-decoders such as the U-Net. Nevertheless, these methods are limited by their inability to incorporate temporal information, that can facilitate segmentation of individual touching cells or of cells that are partially visible. In order to accommodate cell dynamics we propose a novel segmentation approach which integrates Convolutional Long Short Term Memory (C-LSTM) with the U-Net. The network's unique architecture allows it to capture multi-scale, compact, spatio-temporal encoding in the C-LSTMs memory units. Promising results, surpassing the state-of-the-art, are presented. The code is freely available at: TBD

Fiber-Flux Diffusion Density for White Matter Tracts Analysis: Application to Mild Anomalies Localization in Contact Sports Players

Sep 18, 2017

Abstract:We present the concept of fiber-flux density for locally quantifying white matter (WM) fiber bundles. By combining scalar diffusivity measures (e.g., fractional anisotropy) with fiber-flux measurements, we define new local descriptors called Fiber-Flux Diffusion Density (FFDD) vectors. Applying each descriptor throughout fiber bundles allows along-tract coupling of a specific diffusion measure with geometrical properties, such as fiber orientation and coherence. A key step in the proposed framework is the construction of an FFDD dissimilarity measure for sub-voxel alignment of fiber bundles, based on the fast marching method (FMM). The obtained aligned WM tract-profiles enable meaningful inter-subject comparisons and group-wise statistical analysis. We demonstrate our method using two different datasets of contact sports players. Along-tract pairwise comparison as well as group-wise analysis, with respect to non-player healthy controls, reveal significant and spatially-consistent FFDD anomalies. Comparing our method with along-tract FA analysis shows improved sensitivity to subtle structural anomalies in football players over standard FA measurements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge