Mor Avi-Aharon

DeepHist: Differentiable Joint and Color Histogram Layers for Image-to-Image Translation

May 06, 2020

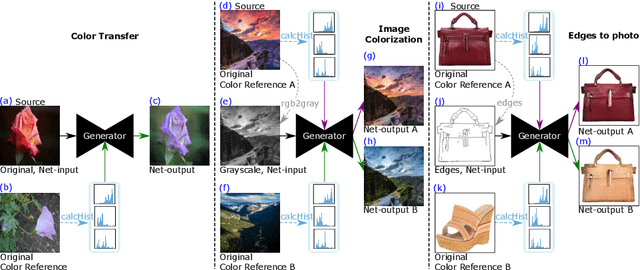

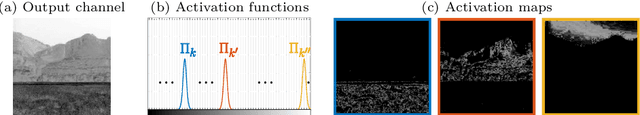

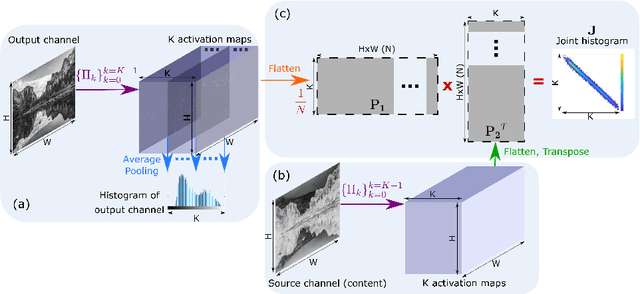

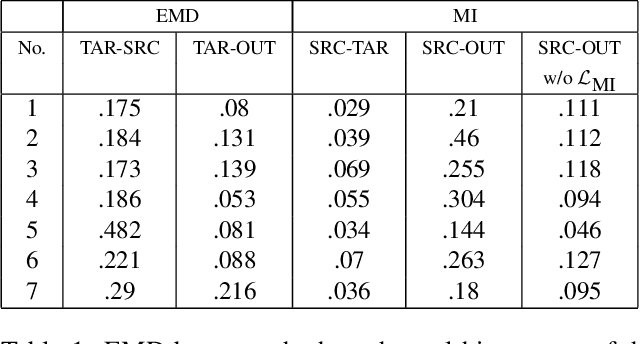

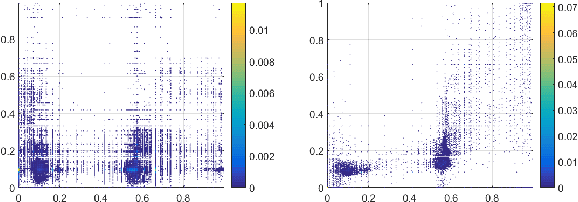

Abstract:We present the DeepHist - a novel Deep Learning framework for augmenting a network by histogram layers and demonstrate its strength by addressing image-to-image translation problems. Specifically, given an input image and a reference color distribution we aim to generate an output image with the structural appearance (content) of the input (source) yet with the colors of the reference. The key idea is a new technique for a differentiable construction of joint and color histograms of the output images. We further define a color distribution loss based on the Earth Mover's Distance between the output's and the reference's color histograms and a Mutual Information loss based on the joint histograms of the source and the output images. Promising results are shown for the tasks of color transfer, image colorization and edges $\rightarrow$ photo, where the color distribution of the output image is controlled. Comparison to Pix2Pix and CyclyGANs are shown.

Hue-Net: Intensity-based Image-to-Image Translation with Differentiable Histogram Loss Functions

Dec 12, 2019

Abstract:We present the Hue-Net - a novel Deep Learning framework for Intensity-based Image-to-Image Translation. The key idea is a new technique termed network augmentation which allows a differentiable construction of intensity histograms from images. We further introduce differentiable representations of (1D) cyclic and joint (2D) histograms and use them for defining loss functions based on cyclic Earth Mover's Distance (EMD) and Mutual Information (MI). While the Hue-Net can be applied to several image-to-image translation tasks, we choose to demonstrate its strength on color transfer problems, where the aim is to paint a source image with the colors of a different target image. Note that the desired output image does not exist and therefore cannot be used for supervised pixel-to-pixel learning. This is accomplished by using the HSV color-space and defining an intensity-based loss that is built on the EMD between the cyclic hue histograms of the output and the target images. To enforce color-free similarity between the source and the output images, we define a semantic-based loss by a differentiable approximation of the MI of these images. The incorporation of histogram loss functions in addition to an adversarial loss enables the construction of semantically meaningful and realistic images. Promising results are presented for different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge