Takahiro Toizumi

CURVE: CLIP-Utilized Reinforcement Learning for Visual Image Enhancement via Simple Image Processing

May 29, 2025

Abstract:Low-Light Image Enhancement (LLIE) is crucial for improving both human perception and computer vision tasks. This paper addresses two challenges in zero-reference LLIE: obtaining perceptually 'good' images using the Contrastive Language-Image Pre-Training (CLIP) model and maintaining computational efficiency for high-resolution images. We propose CLIP-Utilized Reinforcement learning-based Visual image Enhancement (CURVE). CURVE employs a simple image processing module which adjusts global image tone based on B\'ezier curve and estimates its processing parameters iteratively. The estimator is trained by reinforcement learning with rewards designed using CLIP text embeddings. Experiments on low-light and multi-exposure datasets demonstrate the performance of CURVE in terms of enhancement quality and processing speed compared to conventional methods.

Recognition-Oriented Low-Light Image Enhancement based on Global and Pixelwise Optimization

Jan 08, 2025Abstract:In this paper, we propose a novel low-light image enhancement method aimed at improving the performance of recognition models. Despite recent advances in deep learning, the recognition of images under low-light conditions remains a challenge. Although existing low-light image enhancement methods have been developed to improve image visibility for human vision, they do not specifically focus on enhancing recognition model performance. Our proposed low-light image enhancement method consists of two key modules: the Global Enhance Module, which adjusts the overall brightness and color balance of the input image, and the Pixelwise Adjustment Module, which refines image features at the pixel level. These modules are trained to enhance input images to improve downstream recognition model performance effectively. Notably, the proposed method can be applied as a frontend filter to improve low-light recognition performance without requiring retraining of downstream recognition models. Experimental results demonstrate that our method improves the performance of pretrained recognition models under low-light conditions and its effectiveness.

ERUP-YOLO: Enhancing Object Detection Robustness for Adverse Weather Condition by Unified Image-Adaptive Processing

Nov 05, 2024

Abstract:We propose an image-adaptive object detection method for adverse weather conditions such as fog and low-light. Our framework employs differentiable preprocessing filters to perform image enhancement suitable for later-stage object detections. Our framework introduces two differentiable filters: a B\'ezier curve-based pixel-wise (BPW) filter and a kernel-based local (KBL) filter. These filters unify the functions of classical image processing filters and improve performance of object detection. We also propose a domain-agnostic data augmentation strategy using the BPW filter. Our method does not require data-specific customization of the filter combinations, parameter ranges, and data augmentation. We evaluate our proposed approach, called Enhanced Robustness by Unified Image Processing (ERUP)-YOLO, by applying it to the YOLOv3 detector. Experiments on adverse weather datasets demonstrate that our proposed filters match or exceed the expressiveness of conventional methods and our ERUP-YOLO achieved superior performance in a wide range of adverse weather conditions, including fog and low-light conditions.

Adaptive Deep Iris Feature Extractor at Arbitrary Resolutions

Jul 11, 2024Abstract:This paper proposes a deep feature extractor for iris recognition at arbitrary resolutions. Resolution degradation reduces the recognition performance of deep learning models trained by high-resolution images. Using various-resolution images for training can improve the model's robustness while sacrificing recognition performance for high-resolution images. To achieve higher recognition performance at various resolutions, we propose a method of resolution-adaptive feature extraction with automatically switching networks. Our framework includes resolution expert modules specialized for different resolution degradations, including down-sampling and out-of-focus blurring. The framework automatically switches them depending on the degradation condition of an input image. Lower-resolution experts are trained by knowledge-distillation from the high-resolution expert in such a manner that both experts can extract common identity features. We applied our framework to three conventional neural network models. The experimental results show that our method enhances the recognition performance at low-resolution in the conventional methods and also maintains their performance at high-resolution.

Improving Low-Light Image Recognition Performance Based on Image-adaptive Learnable Module

Jan 12, 2024

Abstract:In recent years, significant progress has been made in image recognition technology based on deep neural networks. However, improving recognition performance under low-light conditions remains a significant challenge. This study addresses the enhancement of recognition model performance in low-light conditions. We propose an image-adaptive learnable module which apply appropriate image processing on input images and a hyperparameter predictor to forecast optimal parameters used in the module. Our proposed approach allows for the enhancement of recognition performance under low-light conditions by easily integrating as a front-end filter without the need to retrain existing recognition models designed for low-light conditions. Through experiments, our proposed method demonstrates its contribution to enhancing image recognition performance under low-light conditions.

Segmentation-free Direct Iris Localization Networks

Oct 19, 2022

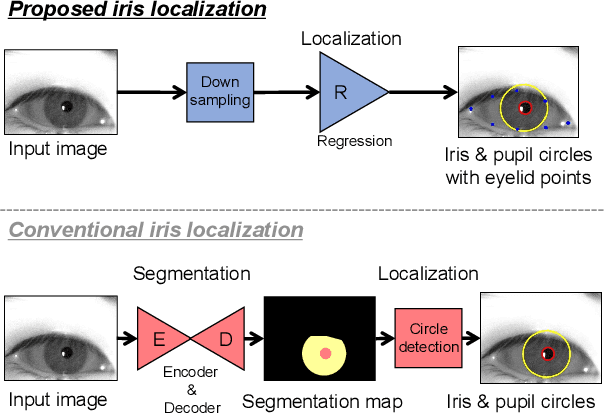

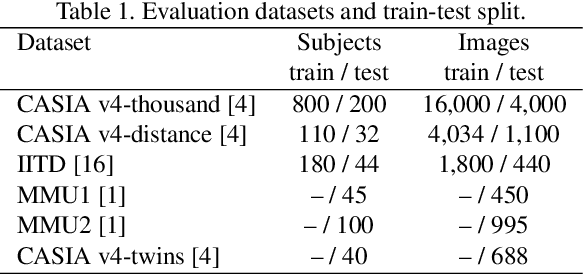

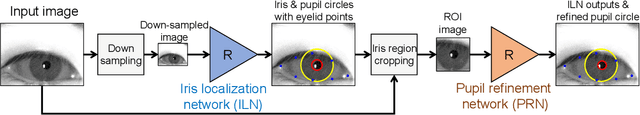

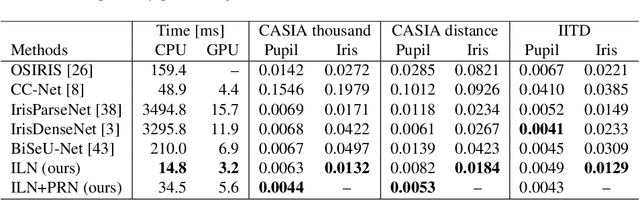

Abstract:This paper proposes an efficient iris localization method without using iris segmentation and circle fitting. Conventional iris localization methods first extract iris regions by using semantic segmentation methods such as U-Net. Afterward, the inner and outer iris circles are localized using the traditional circle fitting algorithm. However, this approach requires high-resolution encoder-decoder networks for iris segmentation, so it causes computational costs to be high. In addition, traditional circle fitting tends to be sensitive to noise in input images and fitting parameters, causing the iris recognition performance to be poor. To solve these problems, we propose an iris localization network (ILN), that can directly localize pupil and iris circles with eyelid points from a low-resolution iris image. We also introduce a pupil refinement network (PRN) to improve the accuracy of pupil localization. Experimental results show that the combination of ILN and PRN works in 34.5 ms for one iris image on a CPU, and its localization performance outperforms conventional iris segmentation methods. In addition, generalized evaluation results show that the proposed method has higher robustness for datasets in different domain than other segmentation methods. Furthermore, we also confirm that the proposed ILN and PRN improve the iris recognition accuracy.

Fast Eye Detector Using Metric Learning for Iris on The Move

Feb 22, 2022

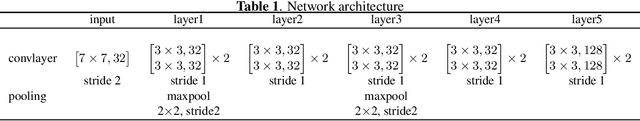

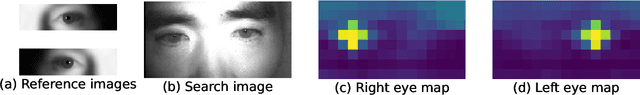

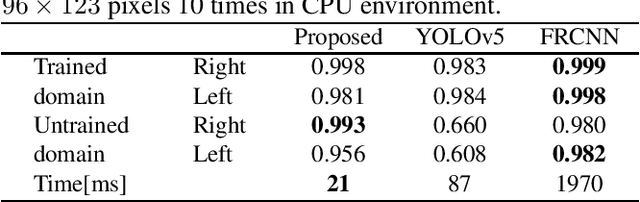

Abstract:This paper proposes a fast eye detection method based on fully-convolutional Siamese networks for iris recognition. The iris on the move system requires to capture high resolution iris images from a moving subject for iris recognition. Therefore, capturing images contains both eyes at high-frame-rate increases the chance of iris imaging. In order to output the authentication result in real time, the system requires a fast eye detector extracting the left and right eye regions from the image. Our method extracts features of a partial face image and a reference eye image using Siamese network frameworks. Similarity heat maps of both eyes are created by calculating the spatial cosine similarity between extracted features. Besides, we use CosFace as a loss function for training to discriminate the left and right eyes with high accuracy even with a shallow network. Experimental results show that our method trained by CosFace is fast and accurate compared with conventional generic object detection methods.

Rollable Latent Space for Azimuth Invariant SAR Target Recognition

Apr 20, 2018

Abstract:This paper proposes rollable latent space (RLS) for an azimuth invariant synthetic aperture radar (SAR) target recognition. Scarce labeled data and limited viewing direction are critical issues in SAR target recognition.The RLS is a designed space in which rolling of latent features corresponds to 3D rotation of an object. Thus latent features of an arbitrary view can be inferred using those of different views. This characteristic further enables us to augment data from limited viewing in RLS. RLS-based classifiers with and without data augmentation and a conventional classifier trained with target front shots are evaluated over untrained target back shots. Results show that the RLS-based classifier with augmentation improves an accuracy by 30% compared to the conventional classifier.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge