Tahir Hassan

Fair Distillation: Teaching Fairness from Biased Teachers in Medical Imaging

Nov 18, 2024

Abstract:Deep learning has achieved remarkable success in image classification and segmentation tasks. However, fairness concerns persist, as models often exhibit biases that disproportionately affect demographic groups defined by sensitive attributes such as race, gender, or age. Existing bias-mitigation techniques, including Subgroup Re-balancing, Adversarial Training, and Domain Generalization, aim to balance accuracy across demographic groups, but often fail to simultaneously improve overall accuracy, group-specific accuracy, and fairness due to conflicts among these interdependent objectives. We propose the Fair Distillation (FairDi) method, a novel fairness approach that decomposes these objectives by leveraging biased ``teacher'' models, each optimized for a specific demographic group. These teacher models then guide the training of a unified ``student'' model, which distills their knowledge to maximize overall and group-specific accuracies, while minimizing inter-group disparities. Experiments on medical imaging datasets show that FairDi achieves significant gains in both overall and group-specific accuracy, along with improved fairness, compared to existing methods. FairDi is adaptable to various medical tasks, such as classification and segmentation, and provides an effective solution for equitable model performance.

Artificial Image Tampering Distorts Spatial Distribution of Texture Landmarks and Quality Characteristics

Aug 04, 2022

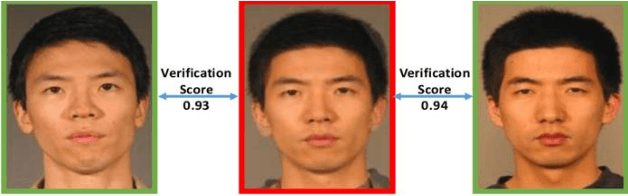

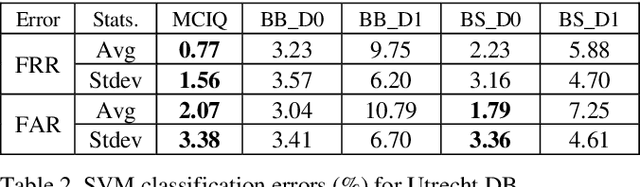

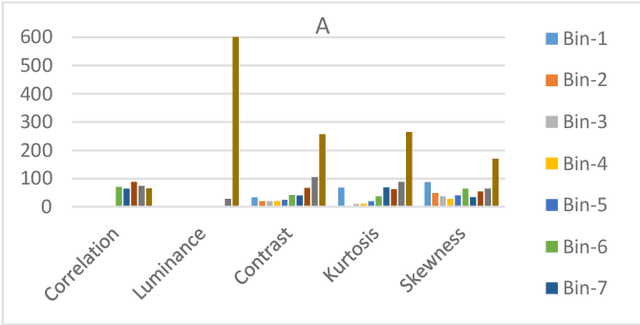

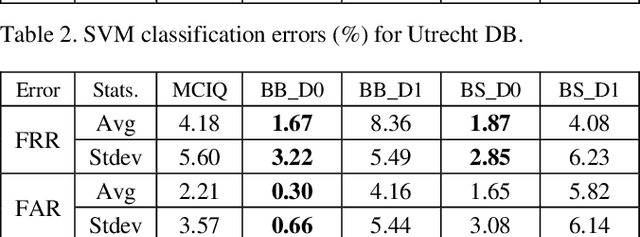

Abstract:Advances in AI based computer vision has led to a significant growth in synthetic image generation and artificial image tampering with serious implications for unethical exploitations that undermine person identification and could make render AI predictions less explainable.Morphing, Deepfake and other artificial generation of face photographs undermine the reliability of face biometrics authentication using different electronic ID documents.Morphed face photographs on e-passports can fool automated border control systems and human guards.This paper extends our previous work on using the persistent homology (PH) of texture landmarks to detect morphing attacks.We demonstrate that artificial image tampering distorts the spatial distribution of texture landmarks (i.e. their PH) as well as that of a set of image quality characteristics.We shall demonstrate that the tamper caused distortion of these two slim feature vectors provide significant potentials for building explainable (Handcrafted) tamper detectors with low error rates and suitable for implementation on constrained devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge