T. Daniel Loveless

Assessing Adversarial Replay and Deep Learning-Driven Attacks on Specific Emitter Identification-based Security Approaches

Aug 07, 2023Abstract:Specific Emitter Identification (SEI) detects, characterizes, and identifies emitters by exploiting distinct, inherent, and unintentional features in their transmitted signals. Since its introduction, a significant amount of work has been conducted; however, most assume the emitters are passive and that their identifying signal features are immutable and challenging to mimic. Suggesting the emitters are reluctant and incapable of developing and implementing effective SEI countermeasures; however, Deep Learning (DL) has been shown capable of learning emitter-specific features directly from their raw in-phase and quadrature signal samples, and Software-Defined Radios (SDRs) can manipulate them. Based on these capabilities, it is fair to question the ease at which an emitter can effectively mimic the SEI features of another or manipulate its own to hinder or defeat SEI. This work considers SEI mimicry using three signal features mimicking countermeasures; off-the-self DL; two SDRs of different sizes, weights, power, and cost (SWaP-C); handcrafted and DL-based SEI processes, and a coffee shop deployment. Our results show off-the-shelf DL algorithms, and SDR enables SEI mimicry; however, adversary success is hindered by: the use of decoy emitter preambles, the use of a denoising autoencoder, and SDR SWaP-C constraints.

Improving RF-DNA Fingerprinting Performance in an Indoor Multipath Environment Using Semi-Supervised Learning

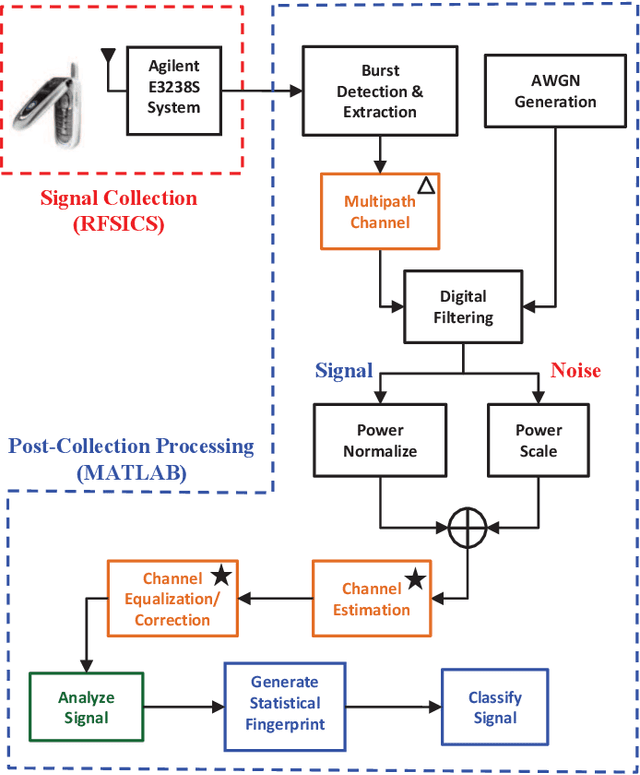

Apr 02, 2023Abstract:The number of Internet of Things (IoT) deployments is expected to reach 75.4 billion by 2025. Roughly 70% of all IoT devices employ weak or no encryption; thus, putting them and their connected infrastructure at risk of attack by devices that are wrongly authenticated or not authenticated at all. A physical layer security approach -- known as Specific Emitter Identification (SEI) -- has been proposed and is being pursued as a viable IoT security mechanism. SEI is advantageous because it is a passive technique that exploits inherent and distinct features that are unintentionally added to the signal by the IoT Radio Frequency (RF) front-end. SEI's passive exploitation of unintentional signal features removes any need to modify the IoT device, which makes it ideal for existing and future IoT deployments. Despite the amount of SEI research conducted, some challenges must be addressed to make SEI a viable IoT security approach. One challenge is the extraction of SEI features from signals collected under multipath fading conditions. Multipath corrupts the inherent SEI features that are used to discriminate one IoT device from another; thus, degrading authentication performance and increasing the chance of attack. This work presents two semi-supervised Deep Learning (DL) equalization approaches and compares their performance with the current state of the art. The two approaches are the Conditional Generative Adversarial Network (CGAN) and Joint Convolutional Auto-Encoder and Convolutional Neural Network (JCAECNN). Both approaches learn the channel distribution to enable multipath correction while simultaneously preserving the SEI exploited features. CGAN and JCAECNN performance is assessed using a Rayleigh fading channel under degrading SNR, up to thirty-two IoT devices, and two publicly available signal sets. The JCAECNN improves SEI performance by 10% beyond that of the current state of the art.

Pre-print: Radio Identity Verification-based IoT Security Using RF-DNA Fingerprints and SVM

May 19, 2020

Abstract:It is estimated that the number of IoT devices will reach 75 billion in the next five years. Most of those currently, and to be deployed, lack sufficient security to protect themselves and their networks from attack by malicious IoT devices that masquerade as authorized devices to circumvent digital authentication approaches. This work presents a PHY layer IoT authentication approach capable of addressing this critical security need through the use of feature reduced Radio Frequency-Distinct Native Attributes (RF-DNA) fingerprints and Support Vector Machines (SVM). This work successfully demonstrates 100%: (i) authorized ID verification across three trials of six randomly chosen radios at signal-to-noise ratios greater than or equal to 6 dB, and (ii) rejection of all rogue radio ID spoofing attacks at signal-to-noise ratios greater than or equal to 3 dB using RF-DNA fingerprints whose features are selected using the Relief-F algorithm.

Preprint: Using RF-DNA Fingerprints To Classify OFDM Transmitters Under Rayleigh Fading Conditions

May 06, 2020

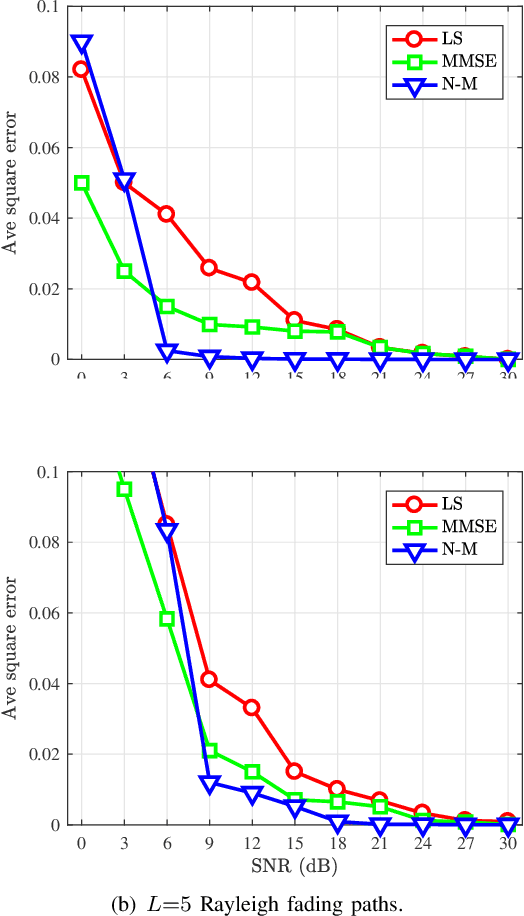

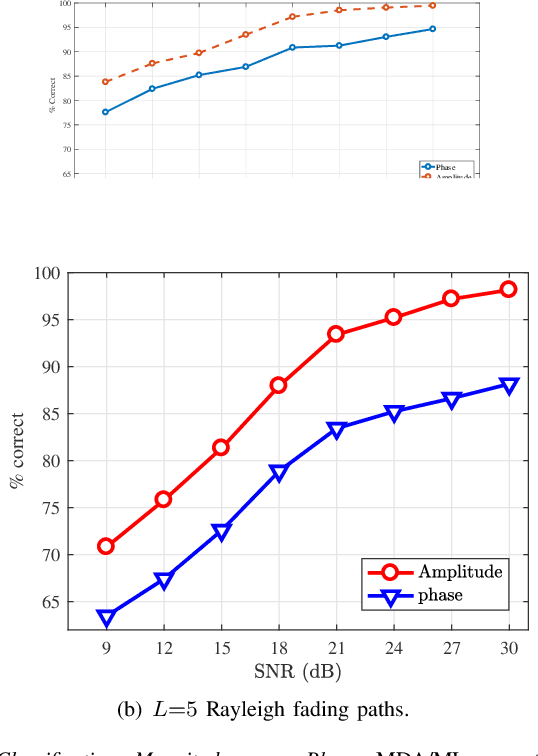

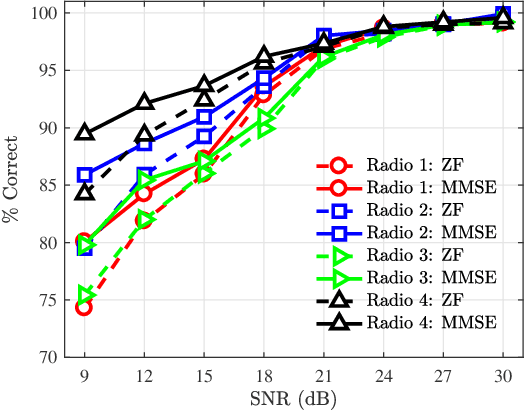

Abstract:The Internet of Things (IoT) is a collection of Internet connected devices capable of interacting with the physical world and computer systems. It is estimated that the IoT will consist of approximately fifty billion devices by the year 2020. In addition to the sheer numbers, the need for IoT security is exacerbated by the fact that many of the edge devices employ weak to no encryption of the communication link. It has been estimated that almost 70% of IoT devices use no form of encryption. Previous research has suggested the use of Specific Emitter Identification (SEI), a physical layer technique, as a means of augmenting bit-level security mechanism such as encryption. The work presented here integrates a Nelder-Mead based approach for estimating the Rayleigh fading channel coefficients prior to the SEI approach known as RF-DNA fingerprinting. The performance of this estimator is assessed for degrading signal-to-noise ratio and compared with least square and minimum mean squared error channel estimators. Additionally, this work presents classification results using RF-DNA fingerprints that were extracted from received signals that have undergone Rayleigh fading channel correction using Minimum Mean Squared Error (MMSE) equalization. This work also performs radio discrimination using RF-DNA fingerprints generated from the normalized magnitude-squared and phase response of Gabor coefficients as well as two classifiers. Discrimination of four 802.11a Wi-Fi radios achieves an average percent correct classification of 90% or better for signal-to-noise ratios of 18 and 21 dB or greater using a Rayleigh fading channel comprised of two and five paths, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge