Sven Simon

Multispectral CT Denoising via Simulation-Trained Deep Learning: Experimental Results at the ESRF BM18

Sep 10, 2025Abstract:Multispectral computed tomography (CT) enables advanced material characterization by acquiring energy-resolved projection data. However, since the incoming X-ray flux is be distributed across multiple narrow energy bins, the photon count per bin is greatly reduced compared to standard energy-integrated imaging. This inevitably introduces substantial noise, which can either prolong acquisition times and make scan durations infeasible or degrade image quality with strong noise artifacts. To address this challenge, we present a dedicated neural network-based denoising approach tailored for multispectral CT projections acquired at the BM18 beamline of the ESRF. The method exploits redundancies across angular, spatial, and spectral domains through specialized sub-networks combined via stacked generalization and an attention mechanism. Non-local similarities in the angular-spatial domain are leveraged alongside correlations between adjacent energy bands in the spectral domain, enabling robust noise suppression while preserving fine structural details. Training was performed exclusively on simulated data replicating the physical and noise characteristics of the BM18 setup, with validation conducted on CT scans of custom-designed phantoms containing both high-Z and low-Z materials. The denoised projections and reconstructions demonstrate substantial improvements in image quality compared to classical denoising methods and baseline CNN models. Quantitative evaluations confirm that the proposed method achieves superior performance across a broad spectral range, generalizing effectively to real-world experimental data while significantly reducing noise without compromising structural fidelity.

A GPU-Accelerated Light-field Super-resolution Framework Based on Mixed Noise Model and Weighted Regularization

Jun 09, 2022

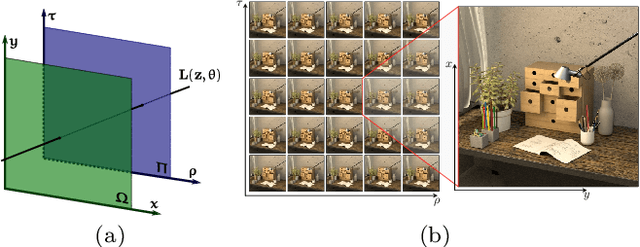

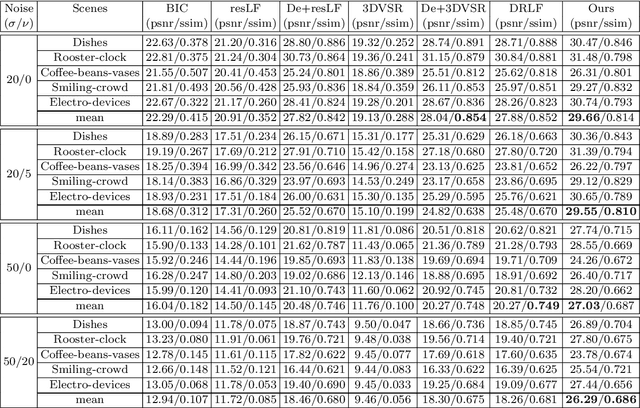

Abstract:This paper presents a GPU-accelerated computational framework for reconstructing high resolution (HR) LF images under a mixed Gaussian-Impulse noise condition. The main focus is on developing a high-performance approach considering processing speed and reconstruction quality. From a statistical perspective, we derive a joint $\ell^1$-$\ell^2$ data fidelity term for penalizing the HR reconstruction error taking into account the mixed noise situation. For regularization, we employ the weighted non-local total variation approach, which allows us to effectively realize LF image prior through a proper weighting scheme. We show that the alternating direction method of multipliers algorithm (ADMM) can be used to simplify the computation complexity and results in a high-performance parallel computation on the GPU Platform. An extensive experiment is conducted on both synthetic 4D LF dataset and natural image dataset to validate the proposed SR model's robustness and evaluate the accelerated optimizer's performance. The experimental results show that our approach achieves better reconstruction quality under severe mixed-noise conditions as compared to the state-of-the-art approaches. In addition, the proposed approach overcomes the limitation of the previous work in handling large-scale SR tasks. While fitting within a single off-the-shelf GPU, the proposed accelerator provides an average speedup of 2.46$\times$ and 1.57$\times$ for $\times 2$ and $\times 3$ SR tasks, respectively. In addition, a speedup of $77\times$ is achieved as compared to CPU execution.

Multi-Material Blind Beam Hardening Correction Based on Non-Linearity Adjustment of Projections

Mar 09, 2022

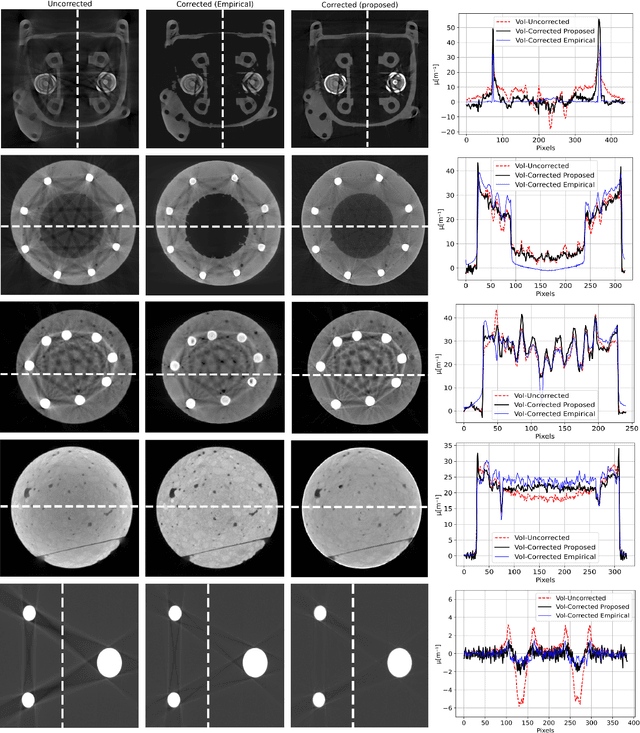

Abstract:Beam hardening (BH) is one of the major artifacts that severely reduces the quality of Computed Tomography (CT) imaging. In a polychromatic X-ray beam, since low-energy photons are more preferentially absorbed, the attenuation of the beam is no longer a linear function of the absorber thickness. The existing BH correction methods either require a given material, which might be unfeasible in reality, or they require a long computation time. This work aims to propose a fast and accurate BH correction method that requires no prior knowledge of the materials and corrects first and higher-order BH artifacts. In the first step, a wide sweep of the material is performed based on an experimentally measured look-up table to obtain the closest estimate of the material. Then the non-linearity effect of the BH is corrected by adding the difference between the estimated monochromatic and the polychromatic simulated projections of the segmented image. The estimated monochromatic projection is simulated by selecting the energy from the polychromatic spectrum which produces the lowest mean square error (MSE) with the acquired projection from the scanner. The polychromatic projection is estimated by minimizing the difference between the acquired projection and the weighted sum of the simulated polychromatic projections using different spectra of different filtration. To evaluate the proposed BH correction method, we have conducted extensive experiments on the real-world CT data. Compared to the state-of-the-art empirical BH correction method, the experiments show that the proposed method can highly reduce the BH artifacts without prior knowledge of the materials.

Computational Scatter Correction for High-Resolution Flat-Panel CT Based on a Fast Monte Carlo Photon Transport Model

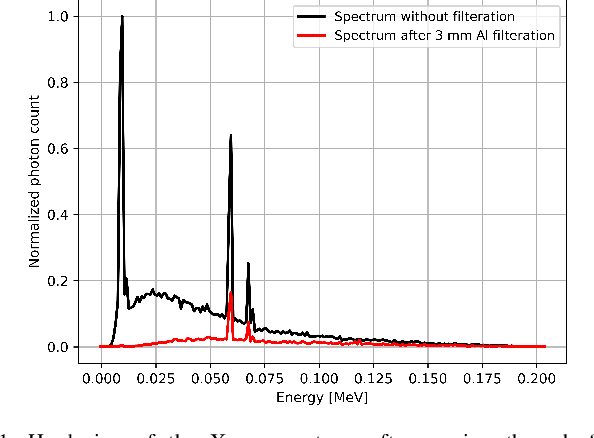

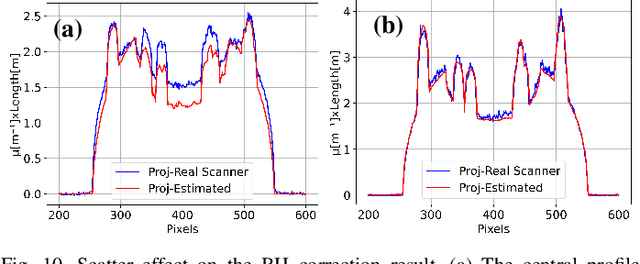

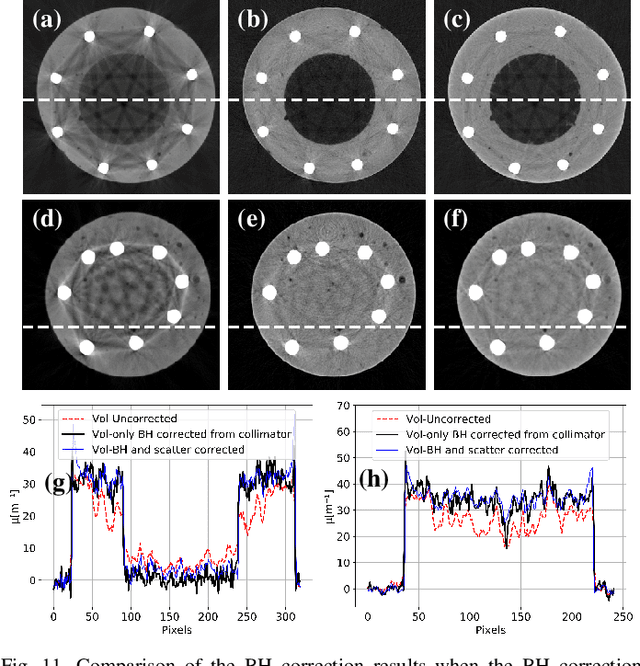

Jan 31, 2022Abstract:In computed tomography (CT) reconstruction, scattering causes server quality degradation of the reconstructed CT images by introducing streaks and cupping artifacts which reduce the detectability of low contrast objects. Monte Carlo (MC) simulation is considered as the most accurate approach for scatter estimation. However, the existing MC estimators are computationally expensive especially for the considered high-resolution flat-panel CT. In this paper, we propose a fast and accurate photon transport model which describes the physics within the 1 keV to 1 MeV range using multiple controllable key parameters. Based on this model, scatter computation for a single projection can be completed within a range of few seconds under well-defined model parameters. Smoothing and interpolation are performed on the estimated scatter to accelerate the scatter calculation without compromising accuracy too much compared to measured near scatter-free projection images. Combining the scatter estimation with the filtered backprojection (FBP), scatter correction is performed effectively in an iterative manner. In order to evaluate the proposed MC model, we have conducted extensive experiments on the simulated data and real-world high-resolution flat-panel CT. Comparing to the state-of-the-art MC simulators, our photon transport model achieved a 202$\times$ speed-up on a four GPU system comparing to the multi-threaded state-of-the-art EGSnrc MC simulator. Besides, it is shown that for real-world high-resolution flat-panel CT, scatter correction with sufficient accuracy is accomplished within one to three iterations using a FBP and a forward projection computed with the proposed fast MC photon transport model.

3DVSR: 3D EPI Volume-based Approach for Angular and Spatial Light field Image Super-resolution

Jan 04, 2022

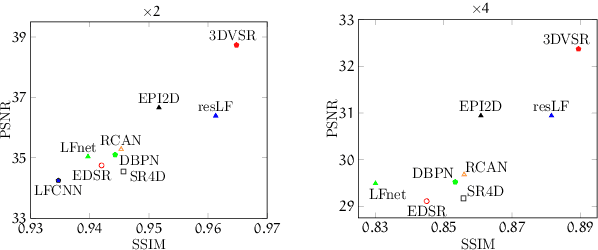

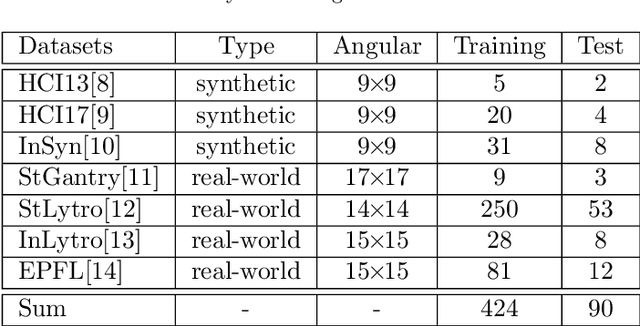

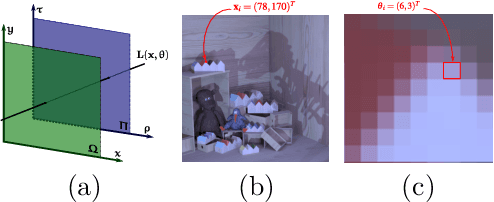

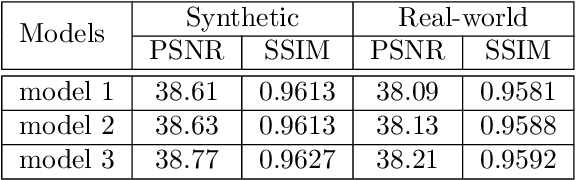

Abstract:Light field (LF) imaging, which captures both spatial and angular information of a scene, is undoubtedly beneficial to numerous applications. Although various techniques have been proposed for LF acquisition, achieving both angularly and spatially high-resolution LF remains a technology challenge. In this paper, a learning-based approach applied to 3D epipolar image (EPI) is proposed to reconstruct high-resolution LF. Through a 2-stage super-resolution framework, the proposed approach effectively addresses various LF super-resolution (SR) problems, i.e., spatial SR, angular SR, and angular-spatial SR. While the first stage provides flexible options to up-sample EPI volume to the desired resolution, the second stage, which consists of a novel EPI volume-based refinement network (EVRN), substantially enhances the quality of the high-resolution EPI volume. An extensive evaluation on 90 challenging synthetic and real-world light field scenes from 7 published datasets shows that the proposed approach outperforms state-of-the-art methods to a large extend for both spatial and angular super-resolution problem, i.e., an average peak signal to noise ratio improvement of more than 2.0 dB, 1.4 dB, and 3.14 dB in spatial SR $\times 2$, spatial SR $\times 4$, and angular SR respectively. The reconstructed 4D light field demonstrates a balanced performance distribution across all perspective images and presents superior visual quality compared to the previous works.

A Resolution Enhancement Plug-in for Deformable Registration of Medical Images

Dec 30, 2021

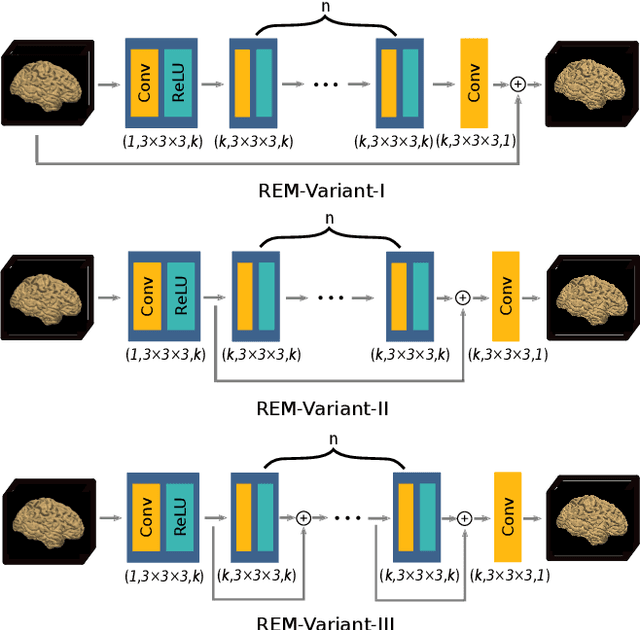

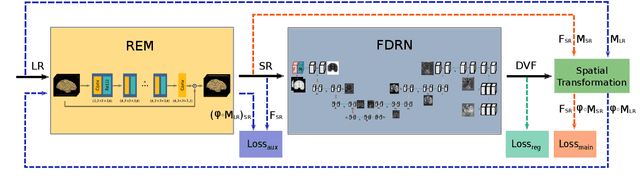

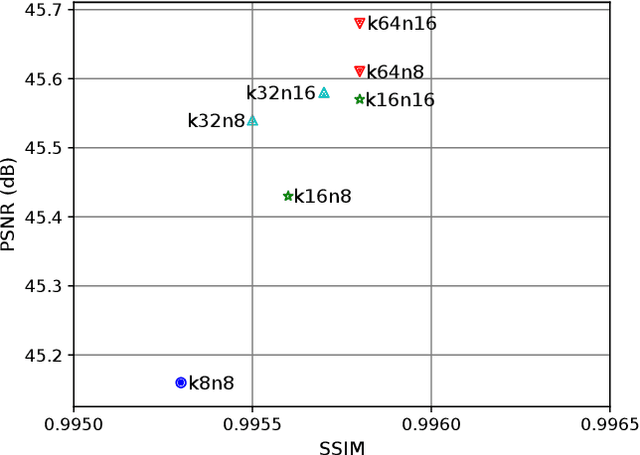

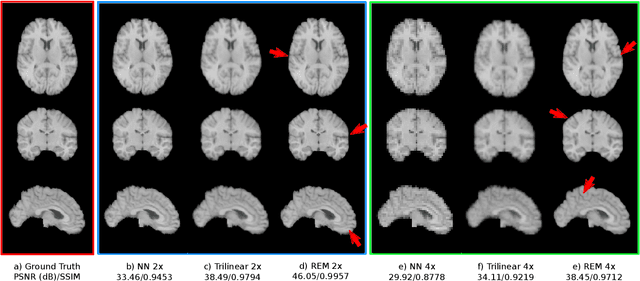

Abstract:Image registration is a fundamental task for medical imaging. Resampling of the intensity values is required during registration and better spatial resolution with finer and sharper structures can improve the resampling performance and hence the registration accuracy. Super-resolution (SR) is an algorithmic technique targeting at spatial resolution enhancement which can achieve an image resolution beyond the hardware limitation. In this work, we consider SR as a preprocessing technique and present a CNN-based resolution enhancement module (REM) which can be easily plugged into the registration network in a cascaded manner. Different residual schemes and network configurations of REM are investigated to obtain an effective architecture design of REM. In fact, REM is not confined to image registration, it can also be straightforwardly integrated into other vision tasks for enhanced resolution. The proposed REM is thoroughly evaluated for deformable registration on medical images quantitatively and qualitatively at different upscaling factors. Experiments on LPBA40 brain MRI dataset demonstrate that REM not only improves the registration accuracy, especially when the input images suffer from degraded spatial resolution, but also generates resolution enhanced images which can be exploited for successive diagnosis.

FL-MISR: Fast Large-Scale Multi-Image Super-Resolution for Computed Tomography Based on Multi-GPU Acceleration

Aug 09, 2021

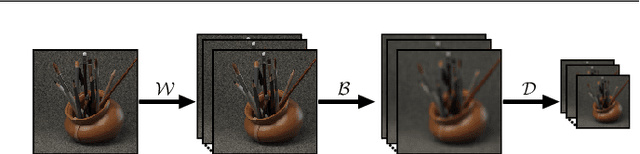

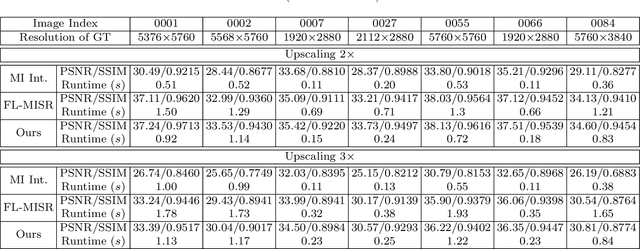

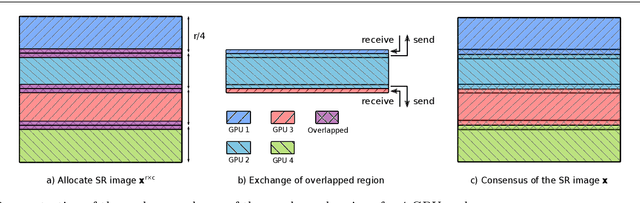

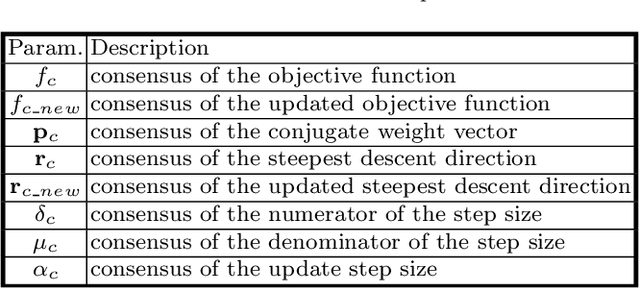

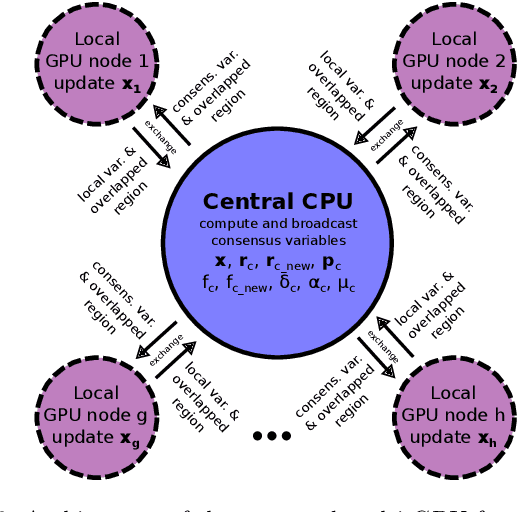

Abstract:Multi-image super-resolution (MISR) usually outperforms single-image super-resolution (SISR) under a proper inter-image alignment by explicitly exploiting the inter-image correlation. However, the large computational demand encumbers the deployment of MISR methods in practice. In this work, we propose a distributed optimization framework based on data parallelism for fast large-scale MISR which supports multi- GPU acceleration, named FL-MISR. Inter-GPU communication for the exchange of local variables and over-lapped regions is enabled to impose a consensus convergence of the distributed task allocated to each GPU node. We have seamlessly integrated FL-MISR into the computed tomography (CT) imaging system by super-resolving multiple projections of the same view acquired by subpixel detector shift. The SR reconstruction is performed on the fly during the CT acquisition such that no additional computation time is introduced. We evaluated FL-MISR quantitatively and qualitatively on multiple objects including aluminium cylindrical phantoms, QRM bar pattern phantoms, and concrete joints. Experiments show that FL-MISR can effectively improve the spatial resolution of CT systems in modulation transfer function (MTF) and visual perception. Besides, comparing to a multi-core CPU implementation, FL-MISR achieves a more than 50x speedup on an off-the-shelf 4-GPU system.

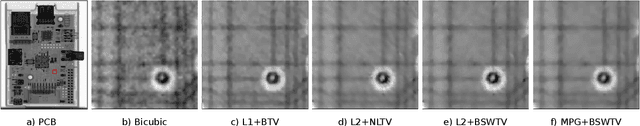

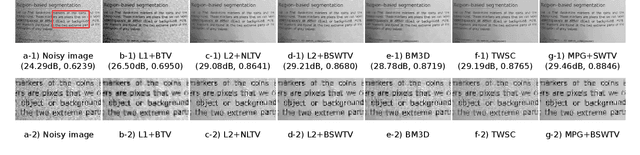

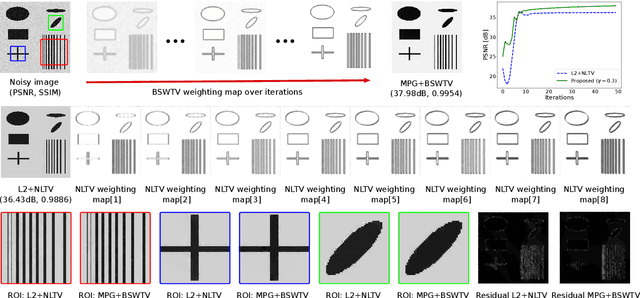

Bilateral Spectrum Weighted Total Variation for Noisy-Image Super-Resolution and Image Denoising

Jun 05, 2021

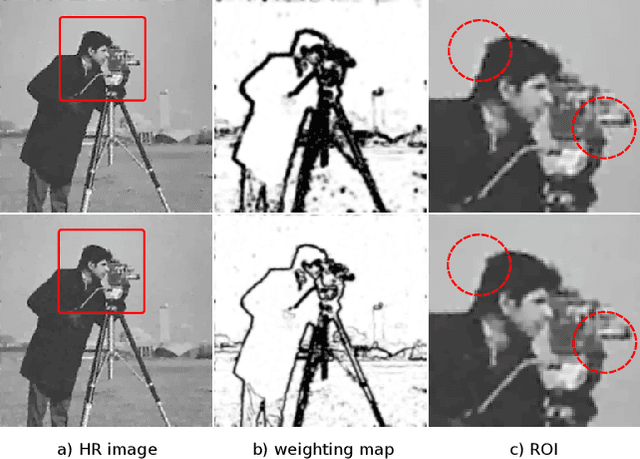

Abstract:In this paper, we propose a regularization technique for noisy-image super-resolution and image denoising. Total variation (TV) regularization is adopted in many image processing applications to preserve the local smoothness. However, TV prior is prone to oversmoothness, staircasing effect, and contrast losses. Nonlocal TV (NLTV) mitigates the contrast losses by adaptively weighting the smoothness based on the similarity measure of image patches. Although it suppresses the noise effectively in the flat regions, it might leave residual noise surrounding the edges especially when the image is not oversmoothed. To address this problem, we propose the bilateral spectrum weighted total variation (BSWTV). Specially, we apply a locally adaptive shrink coefficient to the image gradients and employ the eigenvalues of the covariance matrix of the weighted image gradients to effectively refine the weighting map and suppress the residual noise. In conjunction with the data fidelity term derived from a mixed Poisson-Gaussian noise model, the objective function is decomposed and solved by the alternating direction method of multipliers (ADMM) algorithm. In order to remove outliers and facilitate the convergence stability, the weighting map is smoothed by a Gaussian filter with an iteratively decreased kernel width and updated in a momentum-based manner in each ADMM iteration. We benchmark our method with the state-of-the-art approaches on the public real-world datasets for super-resolution and image denoising. Experiments show that the proposed method obtains outstanding performance for super-resolution and achieves promising results for denoising on real-world images.

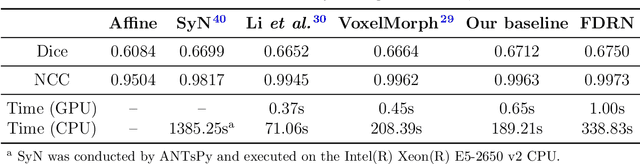

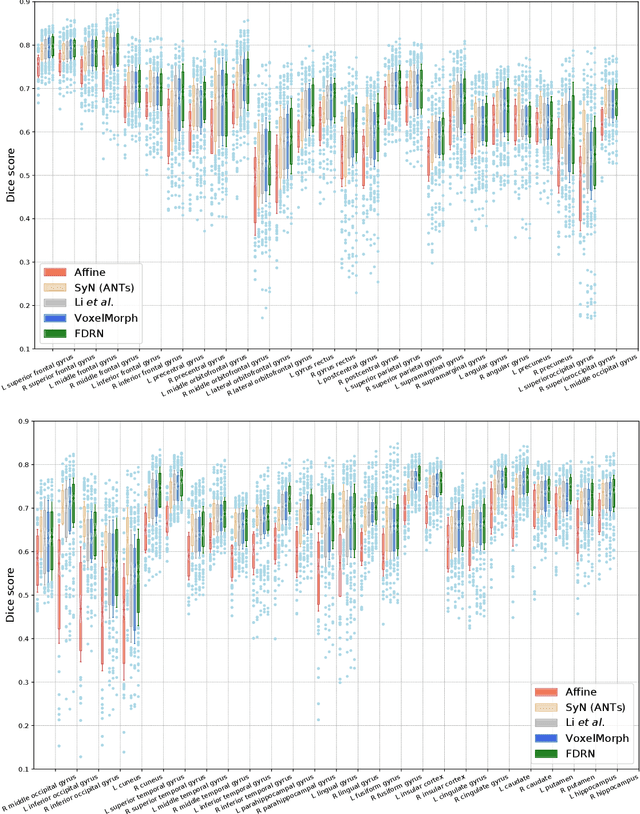

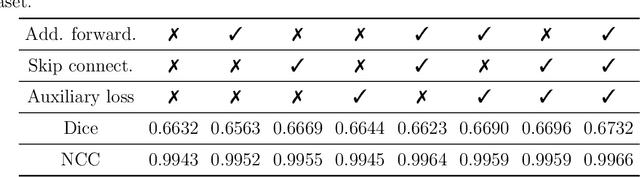

FDRN: A Fast Deformable Registration Network for Medical Images

Nov 04, 2020

Abstract:Deformable image registration is a fundamental task in medical imaging. Due to the large computational complexity of deformable registration of volumetric images, conventional iterative methods usually face the tradeoff between the registration accuracy and the computation time in practice. In order to boost the registration performance in both accuracy and runtime, we propose a fast unsupervised convolutional neural network for deformable image registration. Specially, the proposed FDRN possesses a compact encoder-decoder structure and exploits deep supervision, additive forwarding and residual learning. We conducted comparison with the existing state-of-the-art registration methods on the LPBA40 brain MRI dataset. Experimental results demonstrate that our FDRN performs better than the investigated methods qualitatively and quantitatively in Dice score and normalized cross correlation (NCC). Besides, FDRN is a generalized framework for image registration which is not confined to a particular type of medical images or anatomy. It can also be applied to other anatomical structures or CT images.

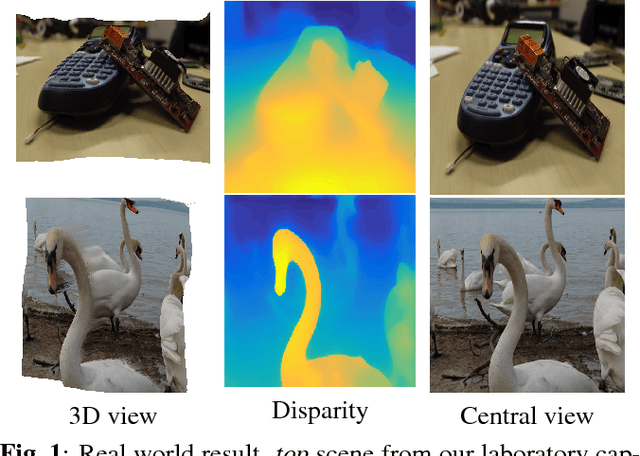

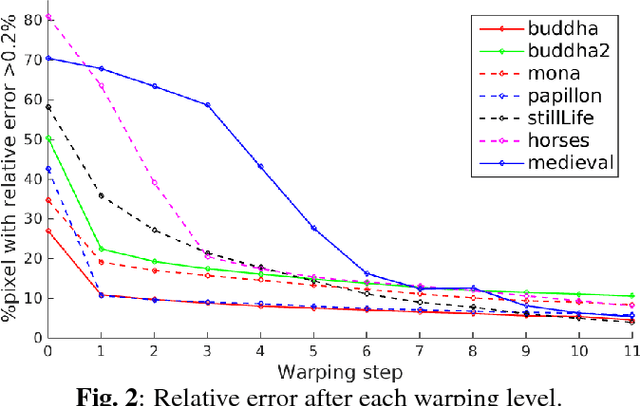

Variational Disparity Estimation Framework for Plenoptic Image

Apr 18, 2018

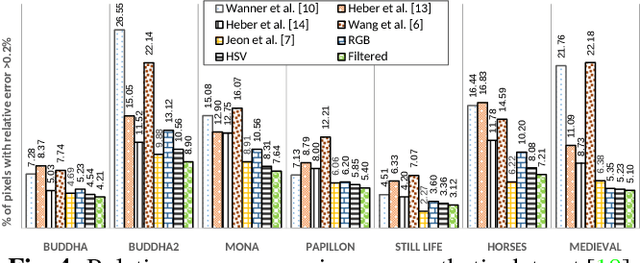

Abstract:This paper presents a computational framework for accurately estimating the disparity map of plenoptic images. The proposed framework is based on the variational principle and provides intrinsic sub-pixel precision. The light-field motion tensor introduced in the framework allows us to combine advanced robust data terms as well as provides explicit treatments for different color channels. A warping strategy is embedded in our framework for tackling the large displacement problem. We also show that by applying a simple regularization term and a guided median filtering, the accuracy of displacement field at occluded area could be greatly enhanced. We demonstrate the excellent performance of the proposed framework by intensive comparisons with the Lytro software and contemporary approaches on both synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge