A GPU-Accelerated Light-field Super-resolution Framework Based on Mixed Noise Model and Weighted Regularization

Paper and Code

Jun 09, 2022

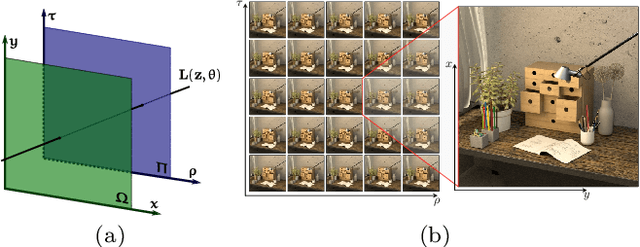

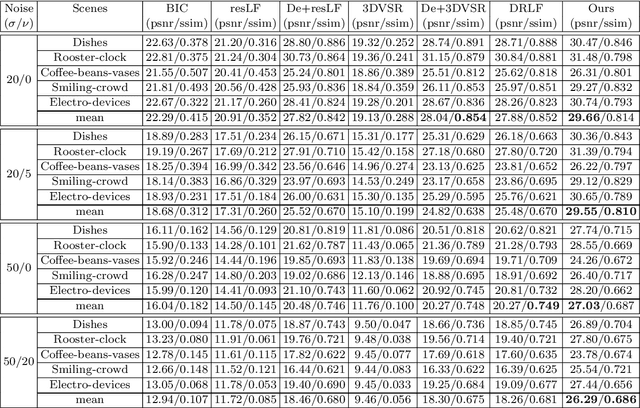

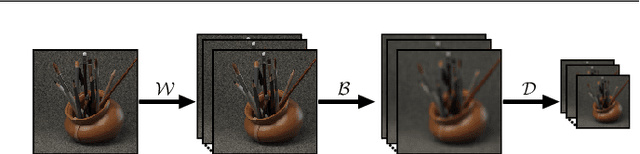

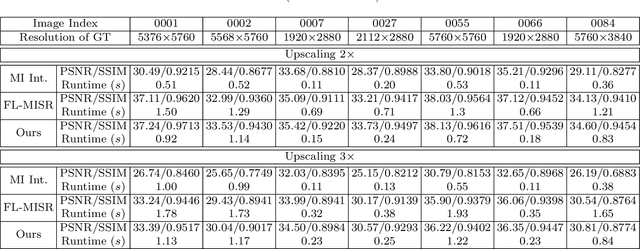

This paper presents a GPU-accelerated computational framework for reconstructing high resolution (HR) LF images under a mixed Gaussian-Impulse noise condition. The main focus is on developing a high-performance approach considering processing speed and reconstruction quality. From a statistical perspective, we derive a joint $\ell^1$-$\ell^2$ data fidelity term for penalizing the HR reconstruction error taking into account the mixed noise situation. For regularization, we employ the weighted non-local total variation approach, which allows us to effectively realize LF image prior through a proper weighting scheme. We show that the alternating direction method of multipliers algorithm (ADMM) can be used to simplify the computation complexity and results in a high-performance parallel computation on the GPU Platform. An extensive experiment is conducted on both synthetic 4D LF dataset and natural image dataset to validate the proposed SR model's robustness and evaluate the accelerated optimizer's performance. The experimental results show that our approach achieves better reconstruction quality under severe mixed-noise conditions as compared to the state-of-the-art approaches. In addition, the proposed approach overcomes the limitation of the previous work in handling large-scale SR tasks. While fitting within a single off-the-shelf GPU, the proposed accelerator provides an average speedup of 2.46$\times$ and 1.57$\times$ for $\times 2$ and $\times 3$ SR tasks, respectively. In addition, a speedup of $77\times$ is achieved as compared to CPU execution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge