Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Sven Schlarb

Deep Learning Frameworks Applied For Audio-Visual Scene Classification

Jun 12, 2021Figures and Tables:

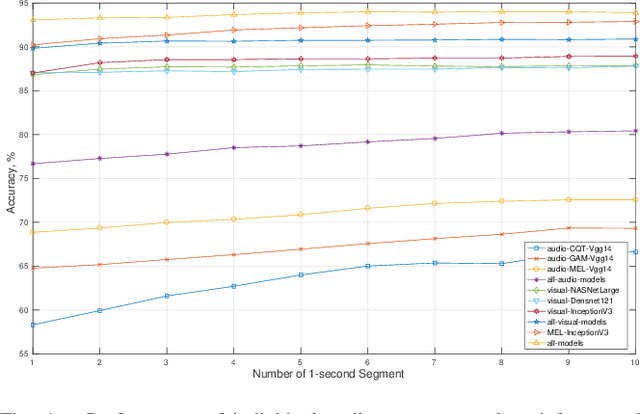

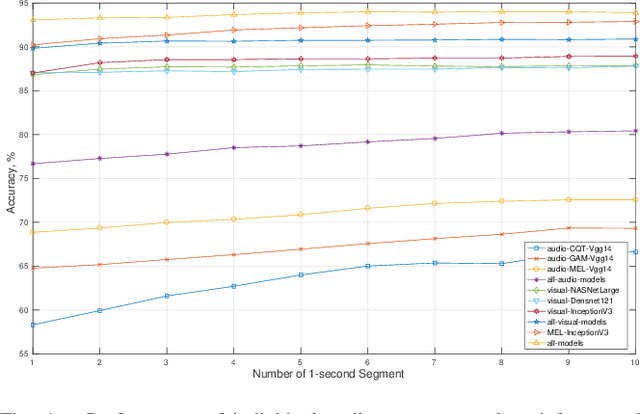

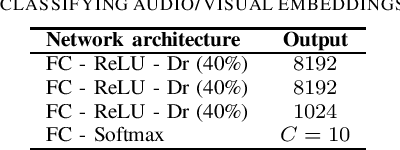

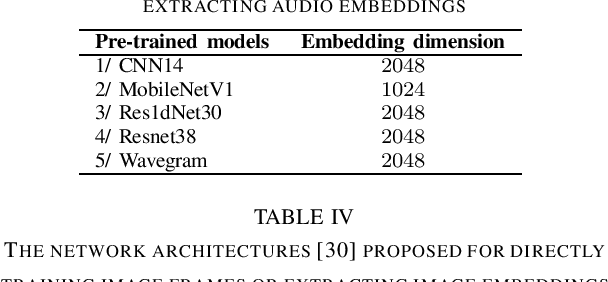

Abstract:In this paper, we present deep learning frameworks for audio-visual scene classification (SC) and indicate how individual visual and audio features as well as their combination affect SC performance. Our extensive experiments, which are conducted on DCASE (IEEE AASP Challenge on Detection and Classification of Acoustic Scenes and Events) Task 1B development dataset, achieve the best classification accuracy of 82.2%, 91.1%, and 93.9% with audio input only, visual input only, and both audio-visual input, respectively. The highest classification accuracy of 93.9%, obtained from an ensemble of audio-based and visual-based frameworks, shows an improvement of 16.5% compared with DCASE baseline.

* 6 pages

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge