Sven Loncaric

Faculty of Electrical Engineering and Computing, University of Zagreb, Croatia

Generative adversarial network with object detector discriminator for enhanced defect detection on ultrasonic B-scans

Jun 08, 2021

Abstract:Non-destructive testing is a set of techniques for defect detection in materials. While the set of imaging techniques are manifold, ultrasonic imaging is the one used the most. The analysis is mainly performed by human inspectors manually analyzing recorded images. The low number of defects in real ultrasonic inspections and legal issues considering data from such inspections make it difficult to obtain proper results from automatic ultrasonic image (B-scan) analysis. In this paper, we present a novel deep learning Generative Adversarial Network model for generating ultrasonic B-scans with defects in distinct locations. Furthermore, we show that generated B-scans can be used for synthetic data augmentation, and can improve the performance of deep convolutional neural object detection networks. Our novel method is demonstrated on a dataset of almost 4000 B-scans with more than 6000 annotated defects. Defect detection performance when training on real data yielded average precision of 71%. By training only on generated data the results increased to 72.1%, and by mixing generated and real data we achieve 75.7% average precision. We believe that synthetic data generation can generalize to other challenges with limited datasets and could be used for training human personnel.

Microvasculature Segmentation and Inter-capillary Area Quantification of the Deep Vascular Complex using Transfer Learning

Mar 19, 2020

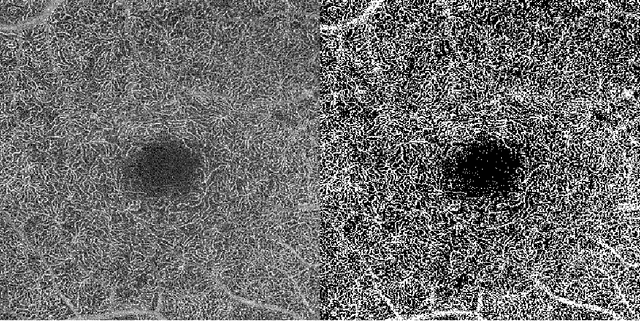

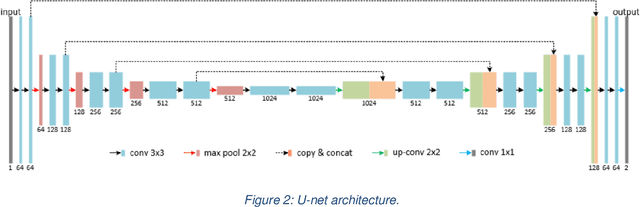

Abstract:Purpose: Optical Coherence Tomography Angiography (OCT-A) permits visualization of the changes to the retinal circulation due to diabetic retinopathy (DR), a microvascular complication of diabetes. We demonstrate accurate segmentation of the vascular morphology for the superficial capillary plexus and deep vascular complex (SCP and DVC) using a convolutional neural network (CNN) for quantitative analysis. Methods: Retinal OCT-A with a 6x6mm field of view (FOV) were acquired using a Zeiss PlexElite. Multiple-volume acquisition and averaging enhanced the vessel network contrast used for training the CNN. We used transfer learning from a CNN trained on 76 images from smaller FOVs of the SCP acquired using different OCT systems. Quantitative analysis of perfusion was performed on the automated vessel segmentations in representative patients with DR. Results: The automated segmentations of the OCT-A images maintained the hierarchical branching and lobular morphologies of the SCP and DVC, respectively. The network segmented the SCP with an accuracy of 0.8599, and a Dice index of 0.8618. For the DVC, the accuracy was 0.7986, and the Dice index was 0.8139. The inter-rater comparisons for the SCP had an accuracy and Dice index of 0.8300 and 0.6700, respectively, and 0.6874 and 0.7416 for the DVC. Conclusions: Transfer learning reduces the amount of manually-annotated images required, while producing high quality automatic segmentations of the SCP and DVC. Using high quality training data preserves the characteristic appearance of the capillary networks in each layer. Translational Relevance: Accurate retinal microvasculature segmentation with the CNN results in improved perfusion analysis in diabetic retinopathy.

Deep learning vessel segmentation and quantification of the foveal avascular zone using commercial and prototype OCT-A platforms

Sep 25, 2019

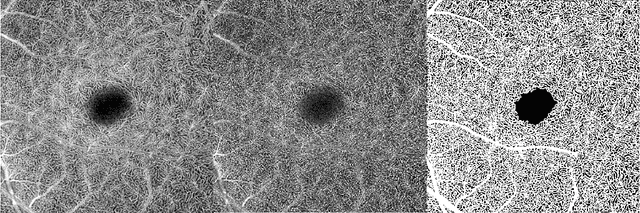

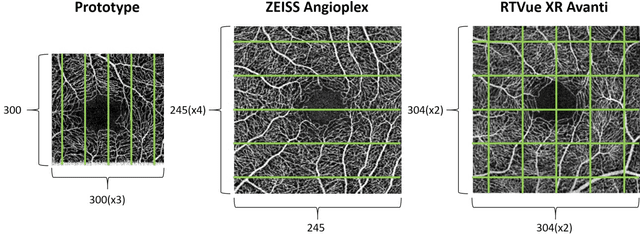

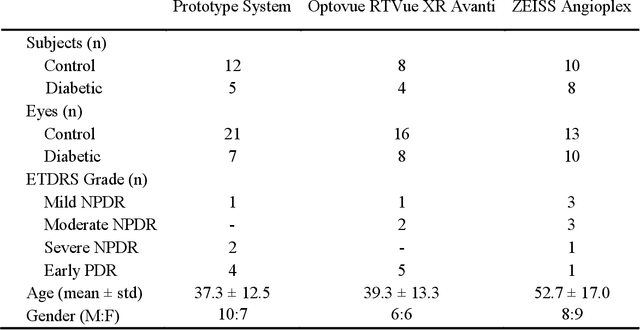

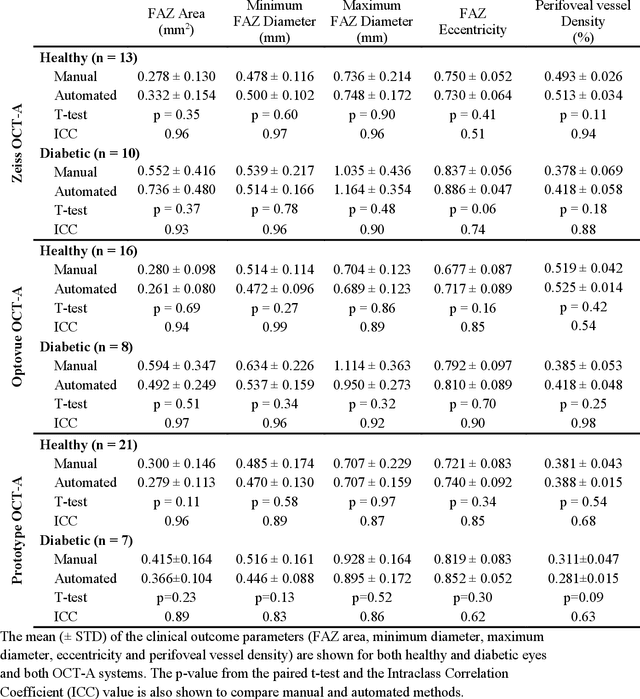

Abstract:Automatic quantification of perifoveal vessel densities in optical coherence tomography angiography (OCT-A) images face challenges such as variable intra- and inter-image signal to noise ratios, projection artefacts from outer vasculature layers, and motion artefacts. This study demonstrates the utility of deep neural networks for automatic quantification of foveal avascular zone (FAZ) parameters and perifoveal vessel density of OCT-A images in healthy and diabetic eyes. OCT-A images of the foveal region were acquired using three OCT-A systems: a 1060nm Swept Source (SS)-OCT prototype, RTVue XR Avanti (Optovue Inc., Fremont, CA), and the ZEISS Angioplex (Carl Zeiss Meditec, Dublin, CA). Automated segmentation was then performed using a deep neural network. Four FAZ morphometric parameters (area, min/max diameter, and eccentricity) and perifoveal vessel density were used as outcome measures. The accuracy, sensitivity and specificity of the DNN vessel segmentations were comparable across all three device platforms. No significant difference between the means of the measurements from automated and manual segmentations were found for any of the outcome measures on any system. The intraclass correlation coefficient (ICC) was also good (> 0.51) for all measurements. Automated deep learning vessel segmentation of OCT-A may be suitable for both commercial and research purposes for better quantification of the retinal circulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge