Supratik Moulik

DropConnect Is Effective in Modeling Uncertainty of Bayesian Deep Networks

Jun 07, 2019

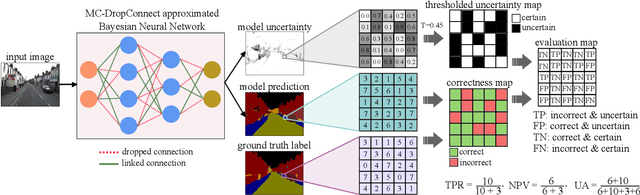

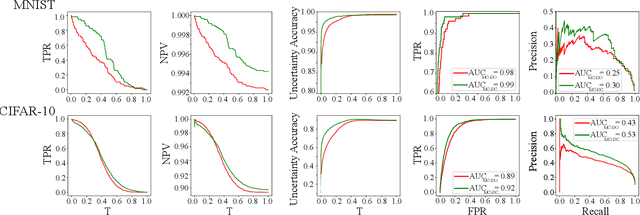

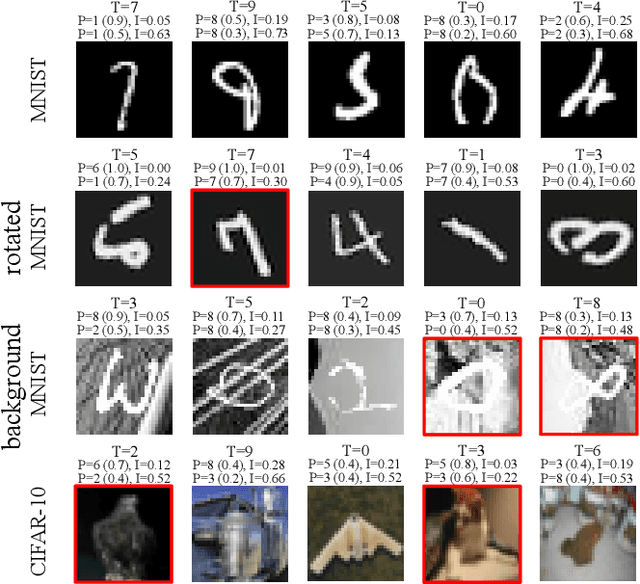

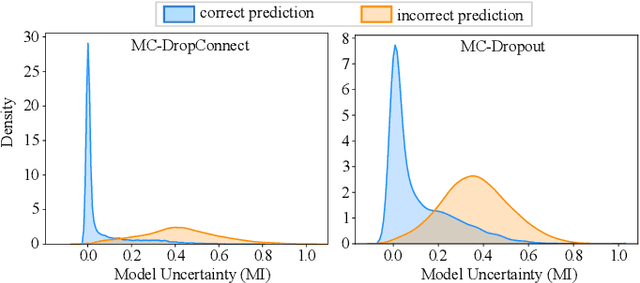

Abstract:Deep neural networks (DNNs) have achieved state-of-the-art performances in many important domains, including medical diagnosis, security, and autonomous driving. In these domains where safety is highly critical, an erroneous decision can result in serious consequences. While a perfect prediction accuracy is not always achievable, recent work on Bayesian deep networks shows that it is possible to know when DNNs are more likely to make mistakes. Knowing what DNNs do not know is desirable to increase the safety of deep learning technology in sensitive applications. Bayesian neural networks attempt to address this challenge. However, traditional approaches are computationally intractable and do not scale well to large, complex neural network architectures. In this paper, we develop a theoretical framework to approximate Bayesian inference for DNNs by imposing a Bernoulli distribution on the model weights. This method, called MC-DropConnect, gives us a tool to represent the model uncertainty with little change in the overall model structure or computational cost. We extensively validate the proposed algorithm on multiple network architectures and datasets for classification and semantic segmentation tasks. We also propose new metrics to quantify the uncertainty estimates. This enables an objective comparison between MC-DropConnect and prior approaches. Our empirical results demonstrate that the proposed framework yields significant improvement in both prediction accuracy and uncertainty estimation quality compared to the state of the art.

Lung Cancer Screening Using Adaptive Memory-Augmented Recurrent Networks

Sep 07, 2018

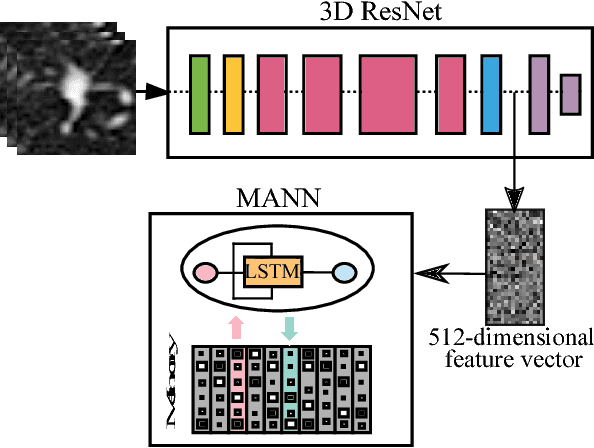

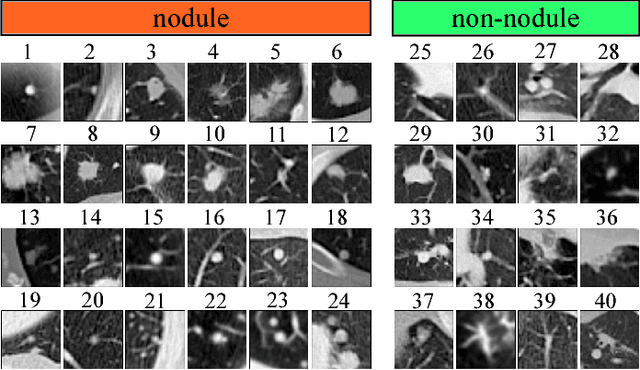

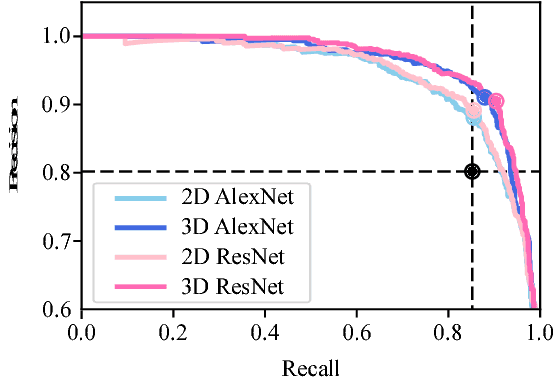

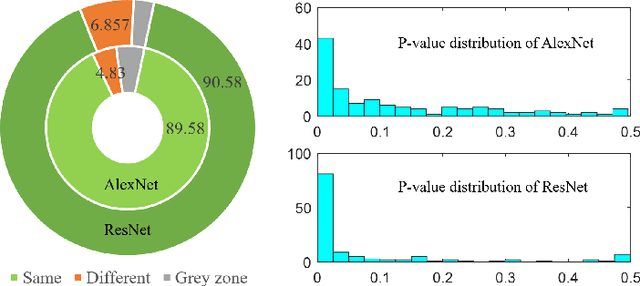

Abstract:In this paper, we investigate the effectiveness of deep learning techniques for lung nodule classification in computed tomography scans. Using less than 10,000 training examples, our deep networks perform two times better than a standard radiology software. Visualization of the networks' neurons reveals semantically meaningful features that are consistent with the clinical knowledge and radiologists' perception. Our paper also proposes a novel framework for rapidly adapting deep networks to the radiologists' feedback, or change in the data due to the shift in sensor's resolution or patient population. The classification accuracy of our approach remains above 80% while popular deep networks' accuracy is around chance. Finally, we provide in-depth analysis of our framework by asking a radiologist to examine important networks' features and perform blind re-labeling of networks' mistakes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge