Sunwoong Yang

Node Assigned physics-informed neural networks for thermal-hydraulic system simulation: CVH/FL module

Apr 23, 2025

Abstract:Severe accidents (SAs) in nuclear power plants have been analyzed using thermal-hydraulic (TH) system codes such as MELCOR and MAAP. These codes efficiently simulate the progression of SAs, while they still have inherent limitations due to their inconsistent finite difference schemes. The use of empirical schemes incorporating both implicit and explicit formulations inherently induces unidirectional coupling in multi-physics analyses. The objective of this study is to develop a novel numerical method for TH system codes using physics-informed neural network (PINN). They have shown strength in solving multi-physics due to the innate feature of neural networks-automatic differentiation. We propose a node-assigned PINN (NA-PINN) that is suitable for the control volume approach-based system codes. NA-PINN addresses the issue of spatial governing equation variation by assigning an individual network to each nodalization of the system code, such that spatial information is excluded from both the input and output domains, and each subnetwork learns to approximate a purely temporal solution. In this phase, we evaluated the accuracy of the PINN methods for the hydrodynamic module. In the 6 water tank simulation, PINN and NA-PINN showed maximum absolute errors of 1.678 and 0.007, respectively. It should be noted that only NA-PINN demonstrated acceptable accuracy. To the best of the authors' knowledge, this is the first study to successfully implement a system code using PINN. Our future work involves extending NA-PINN to a multi-physics solver and developing it in a surrogate manner.

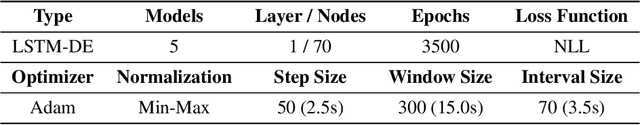

AI-powered Digital Twin of the Ocean: Reliable Uncertainty Quantification for Real-time Wave Height Prediction with Deep Ensemble

Dec 07, 2024

Abstract:Environmental pollution and the depletion of fossil fuels have prompted the need for eco-friendly power generation methods based on renewable energy. However, renewable energy sources often face challenges in providing stable power due to low energy density and non-stationary. Wave energy converters (WECs), in particular, need reliable real-time wave height prediction to address these issues caused by irregular wave patterns, which can lead to the inefficient and unstable operation of WECs. In this study, we propose an AI-powered reliable real-time wave height prediction model, aiming both high predictive accuracy and reliable uncertainty quantification (UQ). The proposed architecture LSTM-DE, integrates long short-term memory (LSTM) networks for temporal prediction with deep ensemble (DE) for robust UQ, achieving accuracy and reliability in wave height prediction. To further enhance the reliability of the predictive models, uncertainty calibration is applied, which has proven to significantly improve the quality of the quantified uncertainty. Based on the real operational data obtained from an oscillating water column-wave energy converter (OWC-WEC) system in Jeju, South Korea, we demonstrate that the proposed LSTM-DE model architecture achieves notable predictive accuracy (R2 > 0.9) while increasing the uncertainty quality by over 50% through simple calibration technique. Furthermore, a comprehensive parametric study is conducted to explore the effects of key model hyperparameters, offering valuable guidelines for diverse operational scenarios, characterized by differences in wavelength, amplitude, and period. The findings show that the proposed method provides robust and reliable real-time wave height predictions, facilitating digital twin of the ocean.

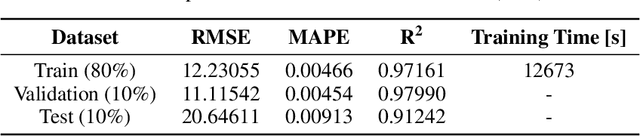

Towards Robust Spatio-Temporal Auto-Regressive Prediction: Adams-Bashforth Time Integration with Adaptive Multi-Step Rollout

Dec 07, 2024

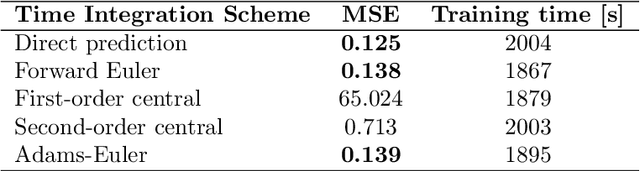

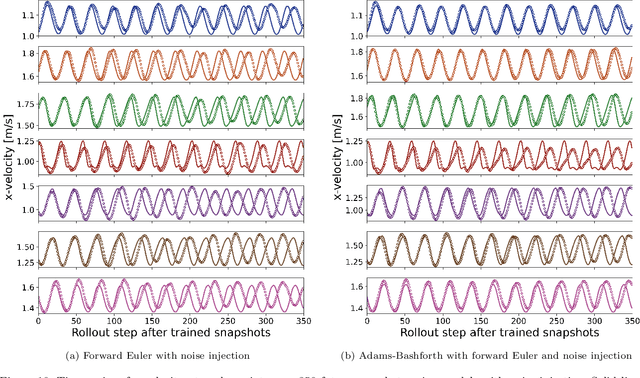

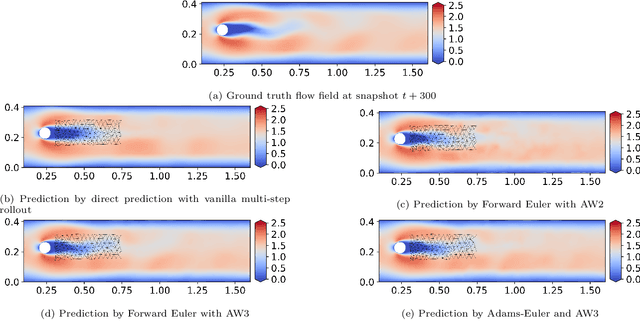

Abstract:This study addresses the critical challenge of error accumulation in spatio-temporal auto-regressive predictions within scientific machine learning models by introducing innovative temporal integration schemes and adaptive multi-step rollout strategies. We present a comprehensive analysis of time integration methods, highlighting the adaptation of the two-step Adams-Bashforth scheme to enhance long-term prediction robustness in auto-regressive models. Additionally, we improve temporal prediction accuracy through a multi-step rollout strategy that incorporates multiple future time steps during training, supported by three newly proposed approaches that dynamically adjust the importance of each future step. By integrating the Adams-Bashforth scheme with adaptive multi-step strategies, our graph neural network-based auto-regressive model accurately predicts 350 future time steps, even under practical constraints such as limited training data and minimal model capacity -- achieving an error of only 1.6% compared to the vanilla auto-regressive approach. Moreover, our framework demonstrates an 83% improvement in rollout performance over the standard noise injection method, a standard technique for enhancing long-term rollout performance. Its effectiveness is further validated in more challenging scenarios with truncated meshes, showcasing its adaptability and robustness in practical applications. This work introduces a versatile framework for robust long-term spatio-temporal auto-regressive predictions, effectively mitigating error accumulation across various model types and engineering discipline.

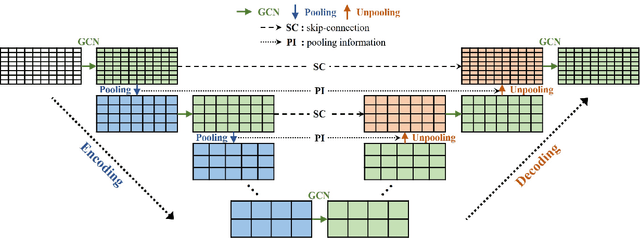

Enhancing Graph U-Nets for Mesh-Agnostic Spatio-Temporal Flow Prediction

Jun 06, 2024

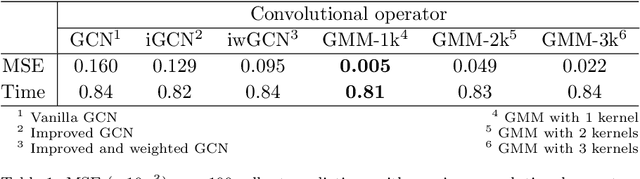

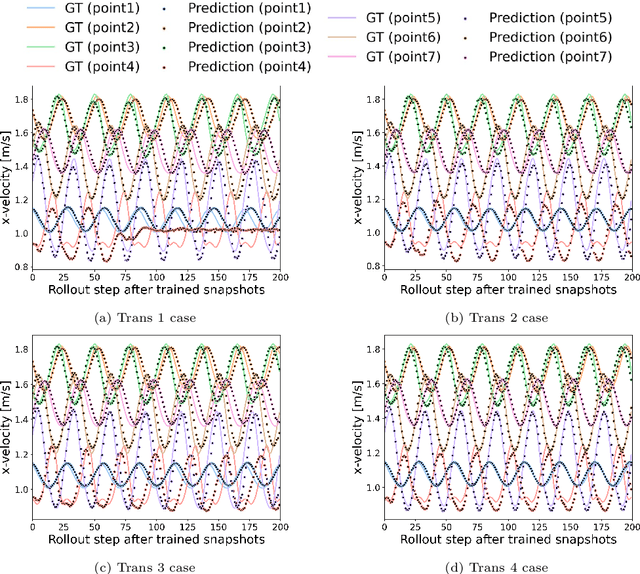

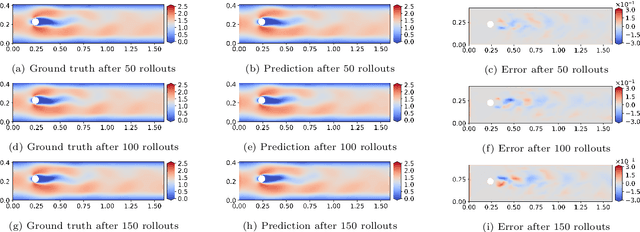

Abstract:This study aims to overcome the conventional deep-learning approaches based on convolutional neural networks, whose applicability to complex geometries and unstructured meshes is limited due to their inherent mesh dependency. We propose novel approaches to improve mesh-agnostic spatio-temporal prediction of transient flow fields using graph U-Nets, enabling accurate prediction on diverse mesh configurations. Key enhancements to the graph U-Net architecture, including the Gaussian mixture model convolutional operator and noise injection approaches, provide increased flexibility in modeling node dynamics: the former reduces prediction error by 95\% compared to conventional convolutional operators, while the latter improves long-term prediction robustness, resulting in an error reduction of 86\%. We also investigate transductive and inductive-learning perspectives of graph U-Nets with proposed improvements. In the transductive setting, they effectively predict quantities for unseen nodes within the trained graph. In the inductive setting, they successfully perform in mesh scenarios with different vortex-shedding periods, showing 98\% improvement in predicting the future flow fields compared to a model trained without the inductive settings. It is found that graph U-Nets without pooling operations, i.e. without reducing and restoring the node dimensionality of the graph data, perform better in inductive settings due to their ability to learn from the detailed structure of each graph. Meanwhile, we also discover that the choice of normalization technique significantly impacts graph U-Net performance.

Towards Reliable Uncertainty Quantification via Deep Ensembles in Multi-output Regression Task

Apr 14, 2023Abstract:Deep ensemble is a simple and straightforward approach for approximating Bayesian inference and has been successfully applied to many classification tasks. This study aims to comprehensively investigate this approach in the multi-output regression task to predict the aerodynamic performance of a missile configuration. By scrutinizing the effect of the number of neural networks used in the ensemble, an obvious trend toward underconfidence in estimated uncertainty is observed. In this context, we propose the deep ensemble framework that applies the post-hoc calibration method, and its improved uncertainty quantification performance is demonstrated. It is compared with Gaussian process regression, the most prevalent model for uncertainty quantification in engineering, and is proven to have superior performance in terms of regression accuracy, reliability of estimated uncertainty, and training efficiency. Finally, the impact of the suggested framework on the results of Bayesian optimization is examined, showing that whether or not the deep ensemble is calibrated can result in completely different exploration characteristics. This framework can be seamlessly applied and extended to any regression task, as no special assumptions have been made for the specific problem used in this study.

Physics-aware Reduced-order Modeling of Transonic Flow via $β$-Variational Autoencoder

May 02, 2022

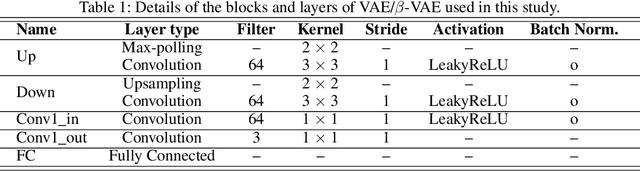

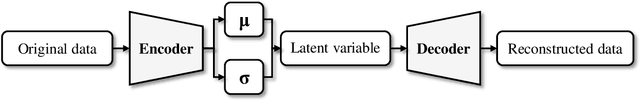

Abstract:Autoencoder-based reduced-order modeling has recently attracted significant attention, owing to the ability to capture underlying nonlinear features. However, its uninterpretable latent variables (LVs) severely undermine the applicability to various physical problems. This study proposes physics-aware reduced-order modeling using a $\beta$-variational autoencoder to address this issue. The presented approach can quantify the rank and independence of LVs, which is validated both quantitatively and qualitatively using various techniques. Accordingly, LVs containing interpretable physical features were successfully identified. It was also verified that these "physics-aware" LVs correspond to the physical parameters that are the generating factors of the dataset, i.e., the Mach number and angle of attack in this study. Moreover, the effects of these physics-aware LVs on the accuracy of reduced-order modeling were investigated, which verified the potential of this method to alleviate the computational cost of the offline stage by excluding physics-unaware LVs.

Inverse design optimization framework via a two-step deep learning approach: application to a wind turbine airfoil

Aug 19, 2021

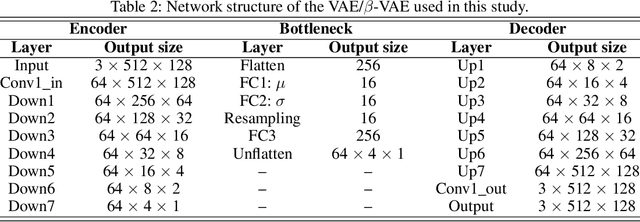

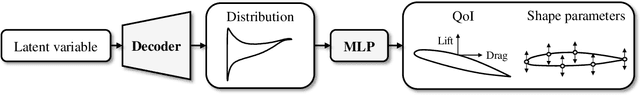

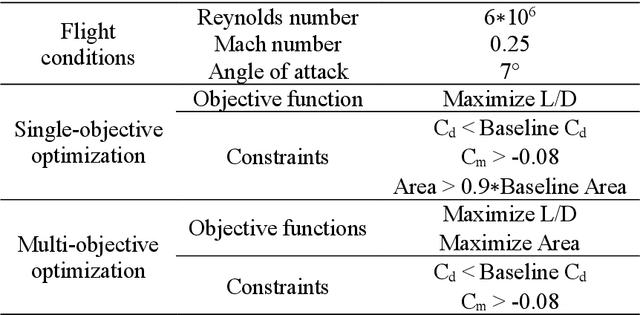

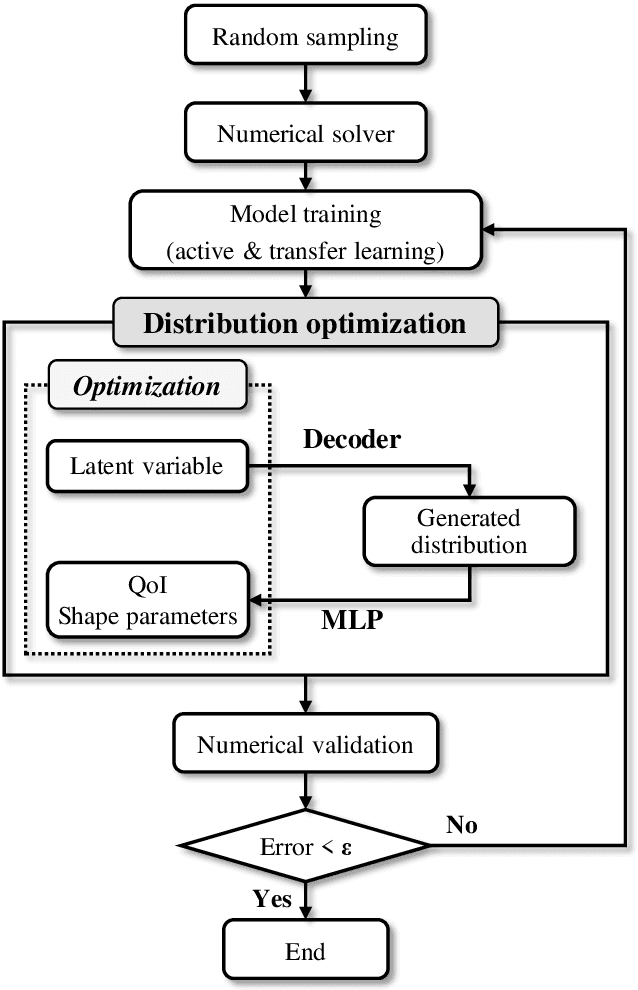

Abstract:Though inverse approach is computationally efficient in aerodynamic design as the desired target performance distribution is specified, it has some significant limitations that prevent full efficiency from being achieved. First, the iterative procedure should be repeated whenever the specified target distribution changes. Target distribution optimization can be performed to clarify the ambiguity in specifying this distribution, but several additional problems arise in this process such as loss of the representation capacity due to parameterization of the distribution, excessive constraints for a realistic distribution, inaccuracy of quantities of interest due to theoretical/empirical predictions, and the impossibility of explicitly imposing geometric constraints. To deal with these issues, a novel inverse design optimization framework with a two-step deep learning approach is proposed. A variational autoencoder and multi-layer perceptron are used to generate a realistic target distribution and predict the quantities of interest and shape parameters from the generated distribution, respectively. Then, target distribution optimization is performed as the inverse design optimization. The proposed framework applies active learning and transfer learning techniques to improve accuracy and efficiency. Finally, the framework is validated through aerodynamic shape optimizations of the airfoil of a wind turbine blade, where inverse design is actively being applied. The results of the optimizations show that this framework is sufficiently accurate, efficient, and flexible to be applied to other inverse design engineering applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge