Sunghwa Lee

Toward High Accuracy DME for Alternative Aircraft Positioning: SFOL Pulse Transmission in High-Power DME

Jun 07, 2025Abstract:The Stretched-FrOnt-Leg (SFOL) pulse is an advanced distance measuring equipment (DME) pulse that offers superior ranging accuracy compared to conventional Gaussian pulses. Successful SFOL pulse transmission has been recently demonstrated from a commercial Gaussian pulse-based DME in low-power mode utilizing digital predistortion (DPD) techniques for power amplifiers. These adjustments were achieved through software modifications, enabling SFOL integration without replacing existing DME infrastructure. However, the SFOL pulse is designed to optimize ranging capabilities by leveraging the effective radiated power (ERP) and pulse shape parameters permitted within DME specifications. Consequently, it operates with narrow margins against these specifications, potentially leading to non-compliance when transmitted in high-power mode. This paper introduces strategies to enable a Gaussian pulse-based DME to transmit the SFOL pulse while adhering to DME specifications in high-power mode. The proposed strategies involve use of a variant of the SFOL pulse and DPD techniques utilizing truncated singular value decomposition, tailored for high-power DME operations. Test results, conducted on a testbed utilizing a commercial Gaussian pulse-based DME, demonstrate the effectiveness of these strategies, ensuring compliance with DME specifications in high-power mode with minimal performance loss. This study enables cost-effective integration of high-accuracy SFOL pulses into existing high-power DME systems, enhancing aircraft positioning precision while ensuring compliance with industry standards.

IR-UWB Radar-Based Contactless Silent Speech Recognition of Vowels, Consonants, Words, and Phrases

Dec 15, 2023

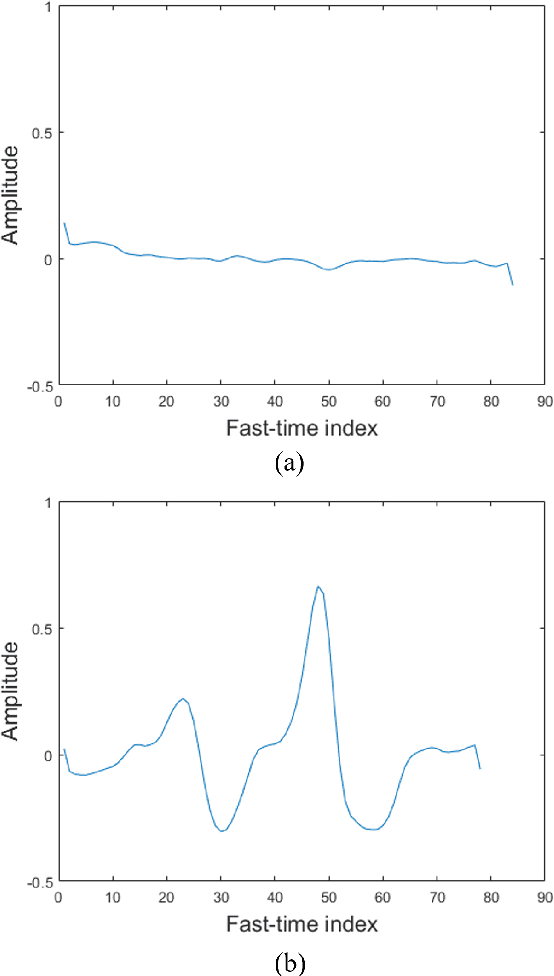

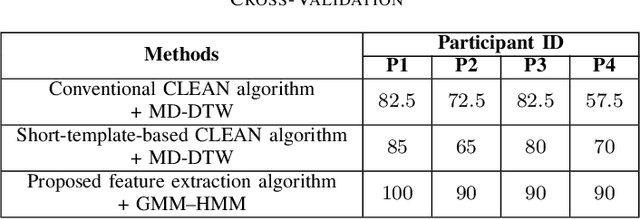

Abstract:Several sensing techniques have been proposed for silent speech recognition (SSR); however, many of these methods require invasive processes or sensor attachment to the skin using adhesive tape or glue, rendering them unsuitable for frequent use in daily life. By contrast, impulse radio ultra-wideband (IR-UWB) radar can operate without physical contact with users' articulators and related body parts, offering several advantages for SSR. These advantages include high range resolution, high penetrability, low power consumption, robustness to external light or sound interference, and the ability to be embedded in space-constrained handheld devices. This study demonstrated IR-UWB radar-based contactless SSR using four types of speech stimuli (vowels, consonants, words, and phrases). To achieve this, a novel speech feature extraction algorithm specifically designed for IR-UWB radar-based SSR is proposed. Each speech stimulus is recognized by applying a classification algorithm to the extracted speech features. Two different algorithms, multidimensional dynamic time warping (MD-DTW) and deep neural network-hidden Markov model (DNN-HMM), were compared for the classification task. Additionally, a favorable radar antenna position, either in front of the user's lips or below the user's chin, was determined to achieve higher recognition accuracy. Experimental results demonstrated the efficacy of the proposed speech feature extraction algorithm combined with DNN-HMM for classifying vowels, consonants, words, and phrases. Notably, this study represents the first demonstration of phoneme-level SSR using contactless radar.

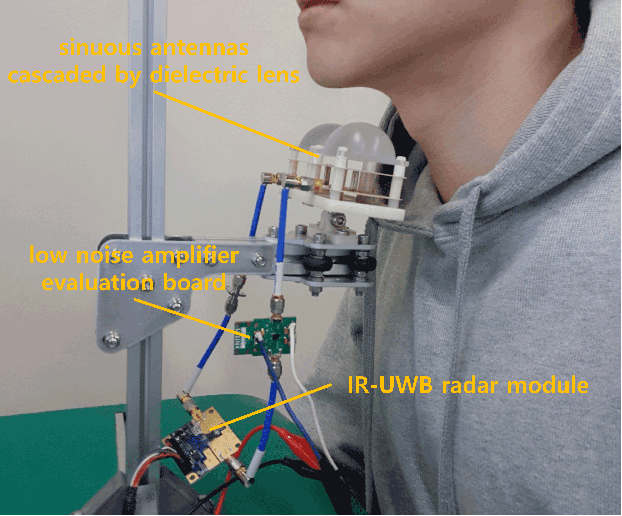

Movement Detection of Tongue and Related Body Parts Using IR-UWB Radar

Sep 05, 2022

Abstract:Because an impulse radio ultra-wideband (IR-UWB) radar can detect targets with high accuracy, work through occluding materials, and operate without contact, it is an attractive hardware solution for building silent speech interfaces, which are non-audio-based speech communication devices. As tongue movement is strongly engaged in pronunciation, detecting its movement is crucial for developing silent speech interfaces. In this study, we attempted to classify the motionless and moving states of an invisible tongue and its related body parts using an IR-UWB radar whose antennas were pointed toward the participant's chin. Using the proposed feature extraction algorithm and a Gaussian mixture model - hidden Markov model, we classified two states of the invisible tongue of four individual participants with a minimum accuracy of 90%.

SFOL DME Pulse Shaping Through Digital Predistortion for High-Accuracy DME

Nov 01, 2021

Abstract:The Stretched-FrOnt-Leg (SFOL) pulse is a high-accuracy distance measuring equipment (DME) pulse developed to support alternative positioning and navigation for aircraft during global navigation satellite system outages. To facilitate the use of the SFOL pulse, it is best to use legacy DMEs that are already deployed to transmit the SFOL pulse, rather than the current Gaussian pulse, through software changes only. When attempting to transmit the SFOL pulse in legacy DMEs, the greatest challenge is the pulse shape distortion caused by the pulse-shaping circuits and power amplifiers in the transmission unit such that the original SFOL pulse shape is no longer preserved. This letter proposes an inverse-learning-based DME digital predistortion method and presents successfully transmitted SFOL pulses from a testbed based on a commercial legacy DME that was designed to transmit Gaussian pulses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge