Sultan Rizky Hikmawan Madjid

On the Soft-Subnetwork for Few-shot Class Incremental Learning

Sep 15, 2022

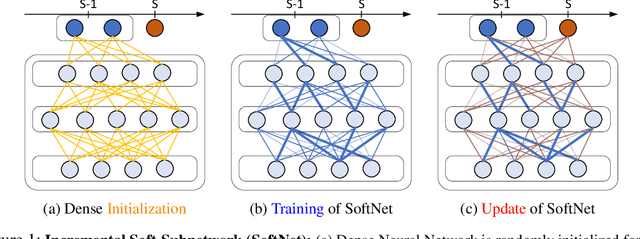

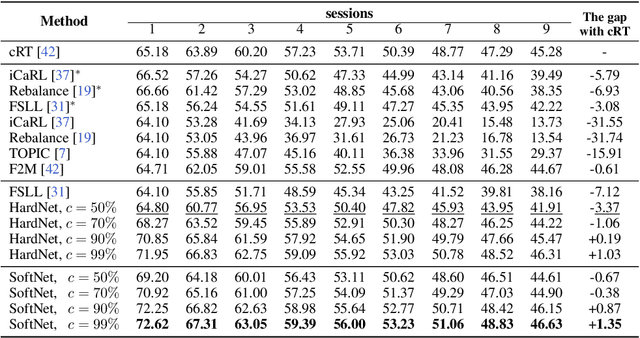

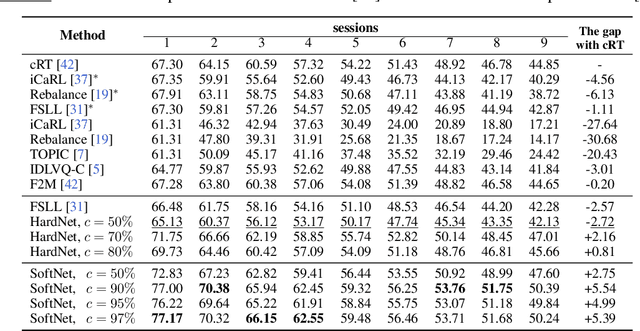

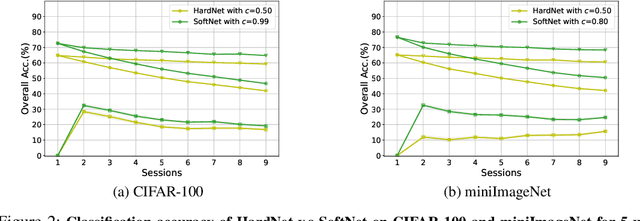

Abstract:Inspired by Regularized Lottery Ticket Hypothesis (RLTH), which hypothesizes that there exist smooth (non-binary) subnetworks within a dense network that achieve the competitive performance of the dense network, we propose a few-shot class incremental learning (FSCIL) method referred to as \emph{Soft-SubNetworks (SoftNet)}. Our objective is to learn a sequence of sessions incrementally, where each session only includes a few training instances per class while preserving the knowledge of the previously learned ones. SoftNet jointly learns the model weights and adaptive non-binary soft masks at a base training session in which each mask consists of the major and minor subnetwork; the former aims to minimize catastrophic forgetting during training, and the latter aims to avoid overfitting to a few samples in each new training session. We provide comprehensive empirical validations demonstrating that our SoftNet effectively tackles the few-shot incremental learning problem by surpassing the performance of state-of-the-art baselines over benchmark datasets.

CISRNet: Compressed Image Super-Resolution Network

Jan 16, 2022Abstract:In recent years, tons of research has been conducted on Single Image Super-Resolution (SISR). However, to the best of our knowledge, few of these studies are mainly focused on compressed images. A problem such as complicated compression artifacts hinders the advance of this study in spite of its high practical values. To tackle this problem, we proposed CISRNet; a network that employs a two-stage coarse-to-fine learning framework that is mainly optimized for Compressed Image Super-Resolution Problem. Specifically, CISRNet consists of two main subnetworks; the coarse and refinement network, where recursive and residual learning is employed within these two networks respectively. Extensive experiments show that with a careful design choice, CISRNet performs favorably against competing Single-Image Super-Resolution methods in the Compressed Image Super-Resolution tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge