Sue Min Cho

Explainable AI for Collaborative Assessment of 2D/3D Registration Quality

Jul 23, 2025Abstract:As surgery embraces digital transformation--integrating sophisticated imaging, advanced algorithms, and robotics to support and automate complex sub-tasks--human judgment of system correctness remains a vital safeguard for patient safety. This shift introduces new "operator-type" roles tasked with verifying complex algorithmic outputs, particularly at critical junctures of the procedure, such as the intermediary check before drilling or implant placement. A prime example is 2D/3D registration, a key enabler of image-based surgical navigation that aligns intraoperative 2D images with preoperative 3D data. Although registration algorithms have advanced significantly, they occasionally yield inaccurate results. Because even small misalignments can lead to revision surgery or irreversible surgical errors, there is a critical need for robust quality assurance. Current visualization-based strategies alone have been found insufficient to enable humans to reliably detect 2D/3D registration misalignments. In response, we propose the first artificial intelligence (AI) framework trained specifically for 2D/3D registration quality verification, augmented by explainability features that clarify the model's decision-making. Our explainable AI (XAI) approach aims to enhance informed decision-making for human operators by providing a second opinion together with a rationale behind it. Through algorithm-centric and human-centered evaluations, we systematically compare four conditions: AI-only, human-only, human-AI, and human-XAI. Our findings reveal that while explainability features modestly improve user trust and willingness to override AI errors, they do not exceed the standalone AI in aggregate performance. Nevertheless, future work extending both the algorithmic design and the human-XAI collaboration elements holds promise for more robust quality assurance of 2D/3D registration.

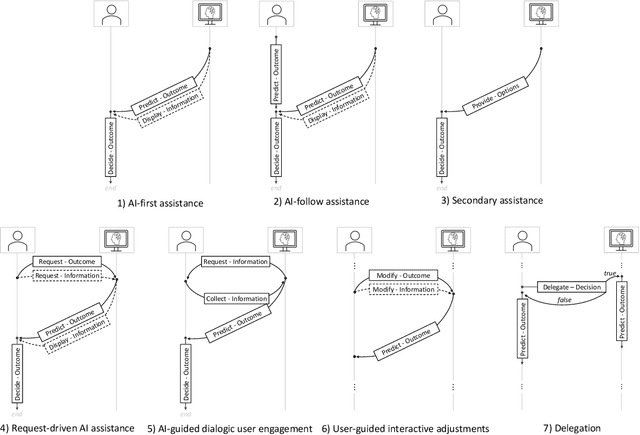

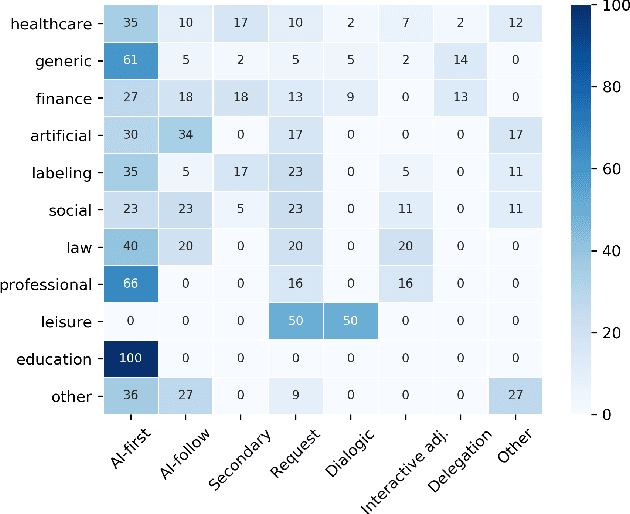

Designing AI Support for Human Involvement in AI-assisted Decision Making: A Taxonomy of Human-AI Interactions from a Systematic Review

Oct 31, 2023

Abstract:Efforts in levering Artificial Intelligence (AI) in decision support systems have disproportionately focused on technological advancements, often overlooking the alignment between algorithmic outputs and human expectations. To address this, explainable AI promotes AI development from a more human-centered perspective. Determining what information AI should provide to aid humans is vital, however, how the information is presented, e. g., the sequence of recommendations and the solicitation of interpretations, is equally crucial. This motivates the need to more precisely study Human-AI interaction as a pivotal component of AI-based decision support. While several empirical studies have evaluated Human-AI interactions in multiple application domains in which interactions can take many forms, there is not yet a common vocabulary to describe human-AI interaction protocols. To address this gap, we describe the results of a systematic review of the AI-assisted decision making literature, analyzing 105 selected articles, which grounds the introduction of a taxonomy of interaction patterns that delineate various modes of human-AI interactivity. We find that current interactions are dominated by simplistic collaboration paradigms and report comparatively little support for truly interactive functionality. Our taxonomy serves as a valuable tool to understand how interactivity with AI is currently supported in decision-making contexts and foster deliberate choices of interaction designs.

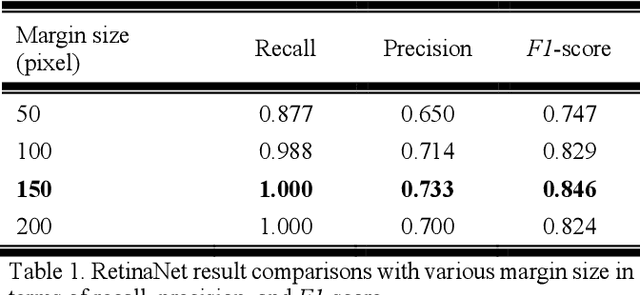

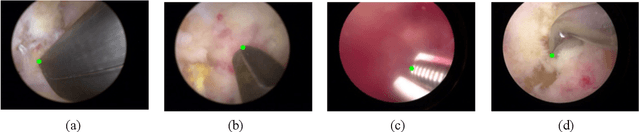

Automatic Tip Detection of Surgical Instruments in Biportal Endoscopic Spine Surgery

Nov 07, 2019

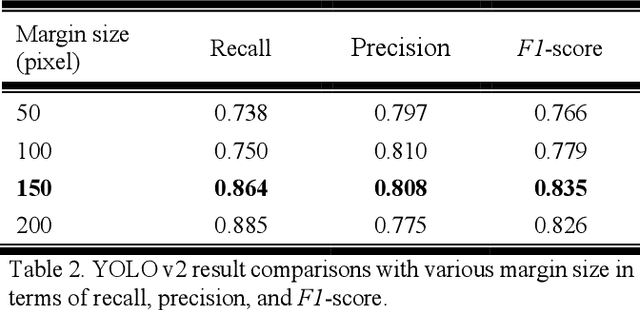

Abstract:Some endoscopic surgeries require a surgeon to hold the endoscope with one hand and the surgical instruments with the other hand to perform the actual surgery with correct vision. Recent technical advances in deep learning as well as in robotics can introduce robotics to these endoscopic surgeries. This can have numerous advantages by freeing one hand of the surgeon, which will allow the surgeon to use both hands and to use more intricate and sophisticated techniques. Recently, deep learning with convolutional neural network achieves state-of-the-art results in computer vision. Therefore, the aim of this study is to automatically detect the tip of the instrument, localize a point, and evaluate detection accuracy in biportal endoscopic spine surgery. The localized point could be used for the controller's inputs of robotic endoscopy in these types of endoscopic surgeries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge