Steven Lay

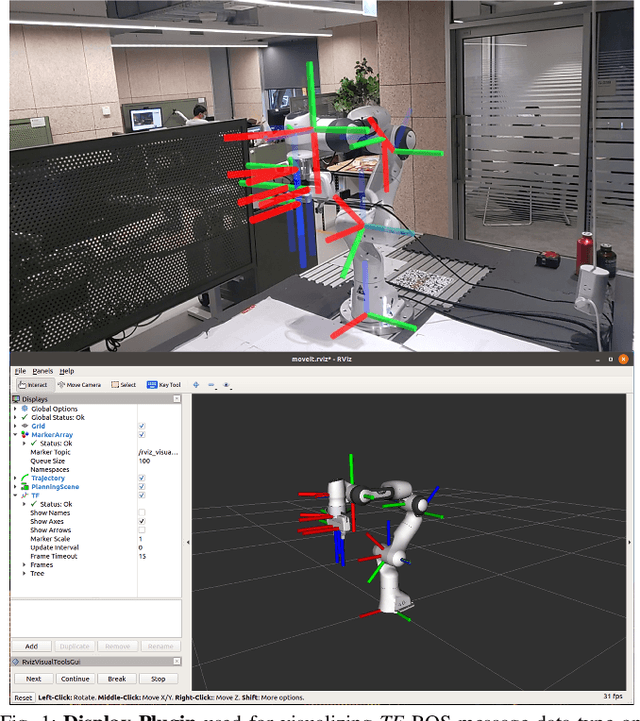

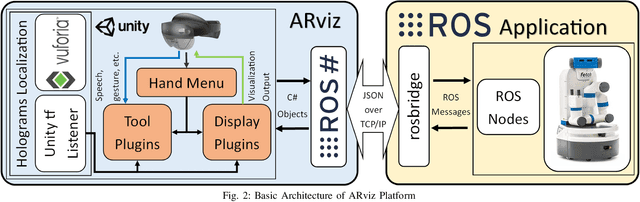

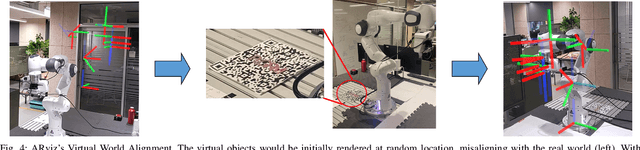

ARviz -- An Augmented Reality-enabled Visualization Platform for ROS Applications

Oct 29, 2021

Abstract:Current robot interfaces such as teach pendants and 2D screen displays used for task visualization and interaction often seem unintuitive and limited in terms of information flow. This compromises task efficiency as interacting with the interface can distract the user from the task at hand. Augmented Reality (AR) technology offers the capability to create visually rich displays and intuitive interaction elements in situ. In recent years, AR has shown promising potential to enable effective human-robot interaction. We introduce ARviz - a versatile, extendable AR visualization platform built for robot applications developed with the widely used Robot Operating System (ROS) framework. ARviz aims to provide both a universal visualization platform with the capability of displaying any ROS message data type in AR, as well as a multimodal user interface for interacting with robots over ROS. ARviz is built as a platform incorporating a collection of plugins that provide visualization and/or interaction components. Users can also extend the platform by implementing new plugins to suit their needs. We present three use cases as well as two potential use cases to showcase the capabilities and benefits of the ARviz platform for human-robot interaction applications. The open access source code for our ARviz platform is available at: https://github.com/hri-group/arviz.

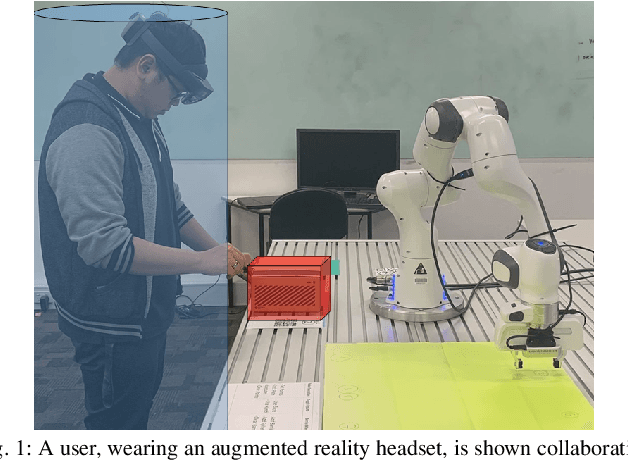

Virtual Barriers in Augmented Reality for Safe and Effective Human-Robot Cooperation in Manufacturing

Apr 12, 2021

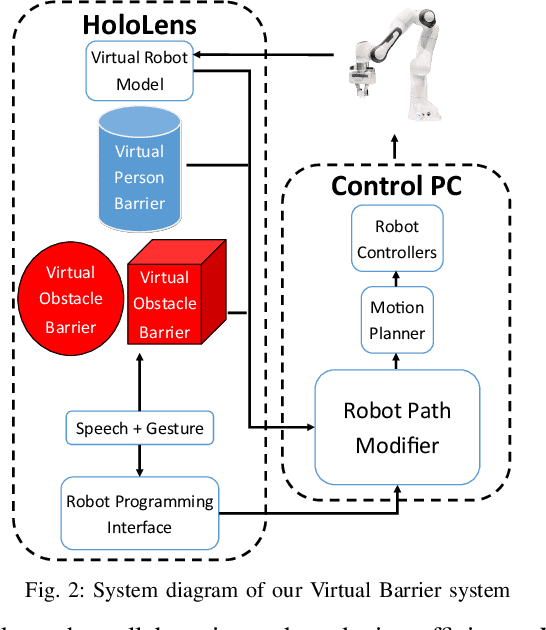

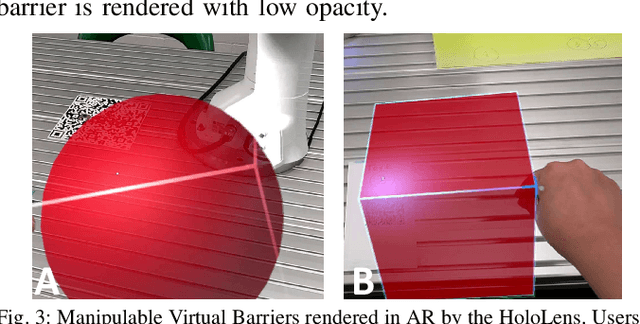

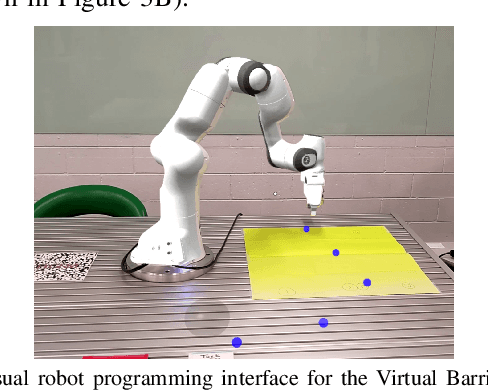

Abstract:Safety is a fundamental requirement in any human-robot collaboration scenario. To ensure the safety of users for such scenarios, we propose a novel Virtual Barrier system facilitated by an augmented reality interface. Our system provides two kinds of Virtual Barriers to ensure safety: 1) a Virtual Person Barrier which encapsulates and follows the user to protect them from colliding with the robot, and 2) Virtual Obstacle Barriers which users can spawn to protect objects or regions that the robot should not enter. To enable effective human-robot collaboration, our system includes an intuitive robot programming interface utilizing speech commands and hand gestures, and features the capability of automatic path re-planning when potential collisions are detected as a result of a barrier intersecting the robot's planned path. We compared our novel system with a standard 2D display interface through a user study, where participants performed a task mimicking an industrial manufacturing procedure. Results show that our system increases the user's sense of safety and task efficiency, and makes the interaction more intuitive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge