Stephen Lindsly

RAILS: A Robust Adversarial Immune-inspired Learning System

Jun 27, 2021

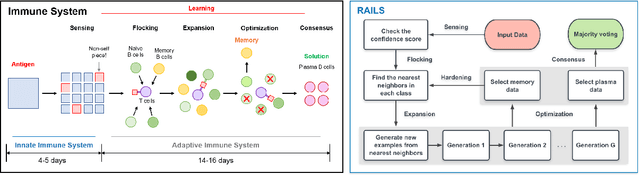

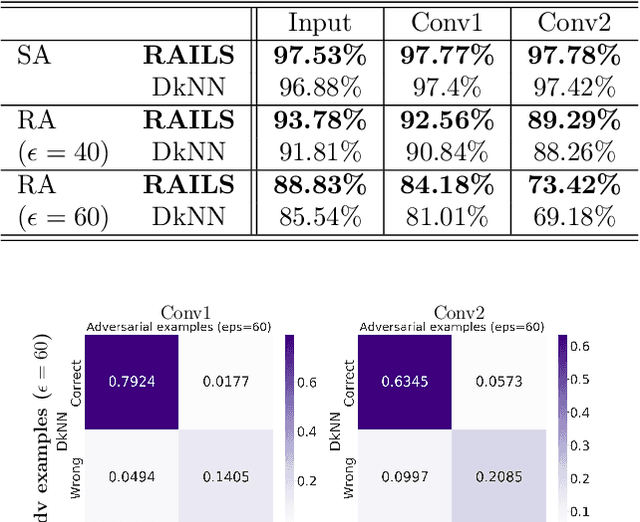

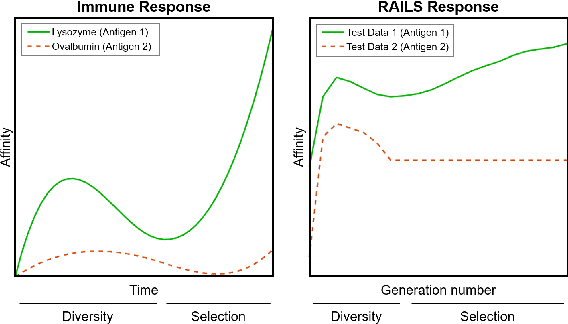

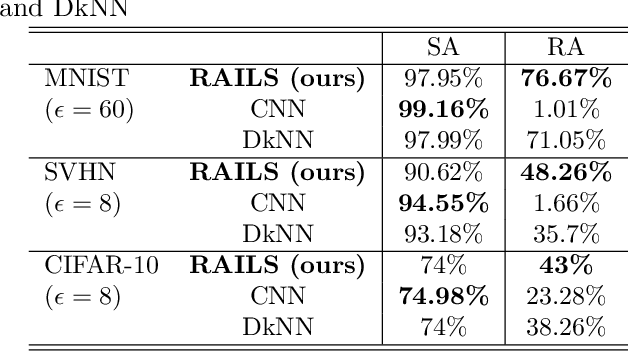

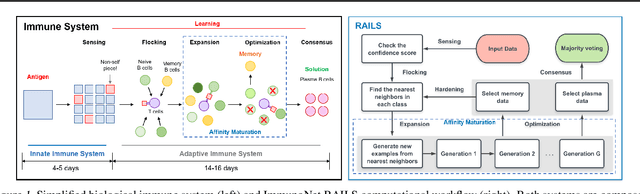

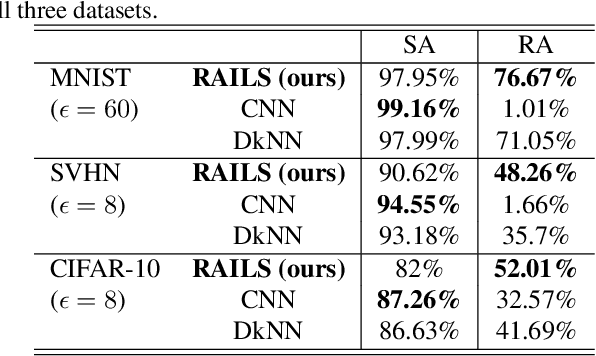

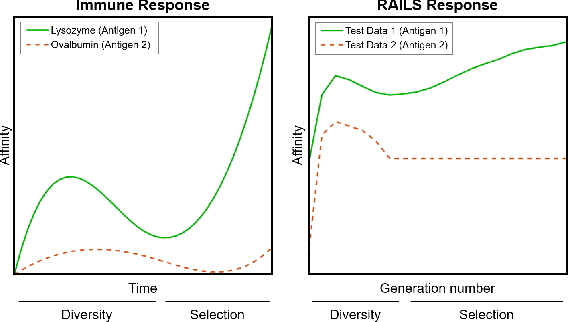

Abstract:Adversarial attacks against deep neural networks (DNNs) are continuously evolving, requiring increasingly powerful defense strategies. We develop a novel adversarial defense framework inspired by the adaptive immune system: the Robust Adversarial Immune-inspired Learning System (RAILS). Initializing a population of exemplars that is balanced across classes, RAILS starts from a uniform label distribution that encourages diversity and debiases a potentially corrupted initial condition. RAILS implements an evolutionary optimization process to adjust the label distribution and achieve specificity towards ground truth. RAILS displays a tradeoff between robustness (diversity) and accuracy (specificity), providing a new immune-inspired perspective on adversarial learning. We empirically validate the benefits of RAILS through several adversarial image classification experiments on MNIST, SVHN, and CIFAR-10 datasets. For the PGD attack, RAILS is found to improve the robustness over existing methods by >= 5.62%, 12.5% and 10.32%, respectively, without appreciable loss of standard accuracy.

Immuno-mimetic Deep Neural Networks (Immuno-Net)

Jun 27, 2021

Abstract:Biomimetics has played a key role in the evolution of artificial neural networks. Thus far, in silico metaphors have been dominated by concepts from neuroscience and cognitive psychology. In this paper we introduce a different type of biomimetic model, one that borrows concepts from the immune system, for designing robust deep neural networks. This immuno-mimetic model leads to a new computational biology framework for robustification of deep neural networks against adversarial attacks. Within this Immuno-Net framework we define a robust adaptive immune-inspired learning system (Immuno-Net RAILS) that emulates, in silico, the adaptive biological mechanisms of B-cells that are used to defend a mammalian host against pathogenic attacks. When applied to image classification tasks on benchmark datasets, we demonstrate that Immuno-net RAILS results in improvement of as much as 12.5% in adversarial accuracy of a baseline method, the DkNN-robustified CNN, without appreciable loss of accuracy on clean data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge