Stefan Wegenkittl

A Modular Test Bed for Reinforcement Learning Incorporation into Industrial Applications

Jun 02, 2023

Abstract:This application paper explores the potential of using reinforcement learning (RL) to address the demands of Industry 4.0, including shorter time-to-market, mass customization, and batch size one production. Specifically, we present a use case in which the task is to transport and assemble goods through a model factory following predefined rules. Each simulation run involves placing a specific number of goods of random color at the entry point. The objective is to transport the goods to the assembly station, where two rivets are installed in each product, connecting the upper part to the lower part. Following the installation of rivets, blue products must be transported to the exit, while green products are to be transported to storage. The study focuses on the application of reinforcement learning techniques to address this problem and improve the efficiency of the production process.

An Architecture for Deploying Reinforcement Learning in Industrial Environments

Jun 02, 2023Abstract:Industry 4.0 is driven by demands like shorter time-to-market, mass customization of products, and batch size one production. Reinforcement Learning (RL), a machine learning paradigm shown to possess a great potential in improving and surpassing human level performance in numerous complex tasks, allows coping with the mentioned demands. In this paper, we present an OPC UA based Operational Technology (OT)-aware RL architecture, which extends the standard RL setting, combining it with the setting of digital twins. Moreover, we define an OPC UA information model allowing for a generalized plug-and-play like approach for exchanging the RL agent used. In conclusion, we demonstrate and evaluate the architecture, by creating a proof of concept. By means of solving a toy example, we show that this architecture can be used to determine the optimal policy using a real control system.

* This preprint has not undergone peer review or any post-submission improvements or corrections. The Version of Record of this contribution is published in Computer Aided Systems Theory - EUROCAST 2022 and is available online at https://doi.org/10.1007/978-3-031-25312-6_67

Deep Q-Learning versus Proximal Policy Optimization: Performance Comparison in a Material Sorting Task

Jun 02, 2023Abstract:This paper presents a comparison between two well-known deep Reinforcement Learning (RL) algorithms: Deep Q-Learning (DQN) and Proximal Policy Optimization (PPO) in a simulated production system. We utilize a Petri Net (PN)-based simulation environment, which was previously proposed in related work. The performance of the two algorithms is compared based on several evaluation metrics, including average percentage of correctly assembled and sorted products, average episode length, and percentage of successful episodes. The results show that PPO outperforms DQN in terms of all evaluation metrics. The study highlights the advantages of policy-based algorithms in problems with high-dimensional state and action spaces. The study contributes to the field of deep RL in context of production systems by providing insights into the effectiveness of different algorithms and their suitability for different tasks.

Sequential IoT Data Augmentation using Generative Adversarial Networks

Jan 13, 2021

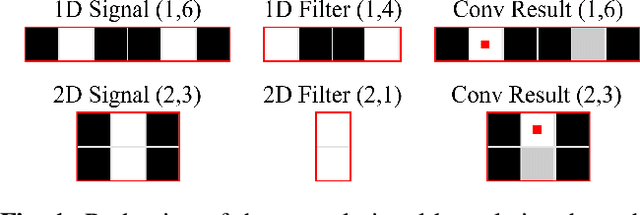

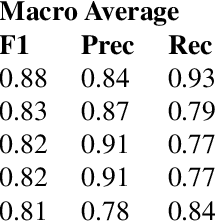

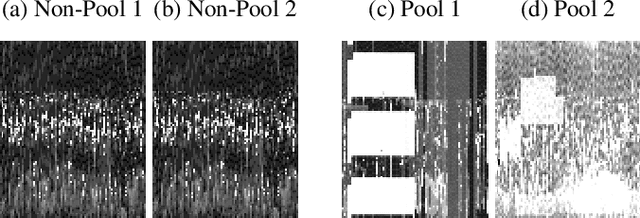

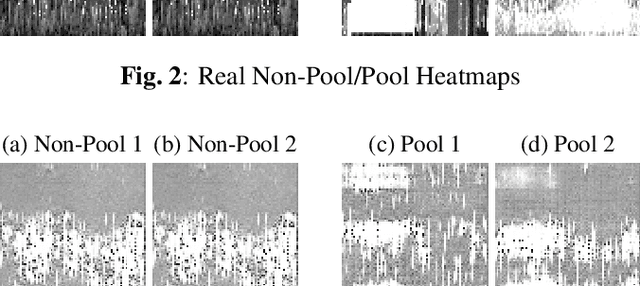

Abstract:Sequential data in industrial applications can be used to train and evaluate machine learning models (e.g. classifiers). Since gathering representative amounts of data is difficult and time consuming, there is an incentive to generate it from a small ground truth. Data augmentation is a common method to generate more data through a priori knowledge with one specific method, so called generative adversarial networks (GANs), enabling data generation from noise. This paper investigates the possibility of using GANs in order to augment sequential Internet of Things (IoT) data, with an example implementation that generates household energy consumption data with and without swimming pools. The results of the example implementation seem subjectively similar to the original data. Additionally to this subjective evaluation, the paper also introduces a quantitative evaluation technique for GANs if labels are provided. The positive results from the evaluation support the initial assumption that generating sequential data from a small ground truth is possible. This means that tedious data acquisition of sequential data can be shortened. In the future, the results of this paper may be included as a tool in machine learning, tackling the small data challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge