Sotiris Manitsaris

Beyond Pixels: Leveraging the Language of Soccer to Improve Spatio-Temporal Action Detection in Broadcast Videos

May 14, 2025Abstract:State-of-the-art spatio-temporal action detection (STAD) methods show promising results for extracting soccer events from broadcast videos. However, when operated in the high-recall, low-precision regime required for exhaustive event coverage in soccer analytics, their lack of contextual understanding becomes apparent: many false positives could be resolved by considering a broader sequence of actions and game-state information. In this work, we address this limitation by reasoning at the game level and improving STAD through the addition of a denoising sequence transduction task. Sequences of noisy, context-free player-centric predictions are processed alongside clean game state information using a Transformer-based encoder-decoder model. By modeling extended temporal context and reasoning jointly over team-level dynamics, our method leverages the "language of soccer" - its tactical regularities and inter-player dependencies - to generate "denoised" sequences of actions. This approach improves both precision and recall in low-confidence regimes, enabling more reliable event extraction from broadcast video and complementing existing pixel-based methods.

Game State and Spatio-temporal Action Detection in Soccer using Graph Neural Networks and 3D Convolutional Networks

Feb 21, 2025

Abstract:Soccer analytics rely on two data sources: the player positions on the pitch and the sequences of events they perform. With around 2000 ball events per game, their precise and exhaustive annotation based on a monocular video stream remains a tedious and costly manual task. While state-of-the-art spatio-temporal action detection methods show promise for automating this task, they lack contextual understanding of the game. Assuming professional players' behaviors are interdependent, we hypothesize that incorporating surrounding players' information such as positions, velocity and team membership can enhance purely visual predictions. We propose a spatio-temporal action detection approach that combines visual and game state information via Graph Neural Networks trained end-to-end with state-of-the-art 3D CNNs, demonstrating improved metrics through game state integration.

Deep state-space modeling for explainable representation, analysis, and generation of professional human poses

Apr 13, 2023Abstract:The analysis of human movements has been extensively studied due to its wide variety of practical applications. Nevertheless, the state-of-the-art still faces scientific challenges while modeling human movements. Firstly, new models that account for the stochasticity of human movement and the physical structure of the human body are required to accurately predict the evolution of full-body motion descriptors over time. Secondly, the explainability of existing deep learning algorithms regarding their body posture predictions while generating human movements still needs to be improved as they lack comprehensible representations of human movement. This paper addresses these challenges by introducing three novel approaches for creating explainable representations of human movement. In this work, full-body movement is formulated as a state-space model of a dynamic system whose parameters are estimated using deep learning and statistical algorithms. The representations adhere to the structure of the Gesture Operational Model (GOM), which describes movement through its spatial and temporal assumptions. Two approaches correspond to deep state-space models that apply nonlinear network parameterization to provide interpretable posture predictions. The third method trains GOM representations using one-shot training with Kalman Filters. This training strategy enables users to model single movements and estimate their mathematical representation using procedures that require less computational power than deep learning algorithms. Ultimately, two applications of the generated representations are presented. The first is for the accurate generation of human movements, and the second is for body dexterity analysis of professional movements, where dynamic associations between body joints and meaningful motion descriptors are identified.

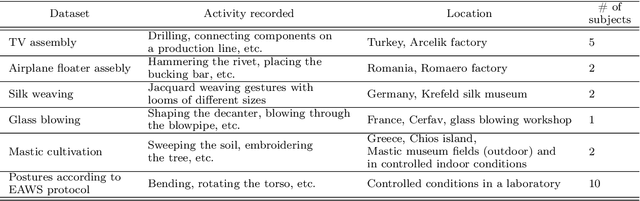

Motion Capture Benchmark of Real Industrial Tasks and Traditional Crafts for Human Movement Analysis

Apr 03, 2023

Abstract:Human movement analysis is a key area of research in robotics, biomechanics, and data science. It encompasses tracking, posture estimation, and movement synthesis. While numerous methodologies have evolved over time, a systematic and quantitative evaluation of these approaches using verifiable ground truth data of three-dimensional human movement is still required to define the current state of the art. This paper presents seven datasets recorded using inertial-based motion capture. The datasets contain professional gestures carried out by industrial operators and skilled craftsmen performed in real conditions in-situ. The datasets were created with the intention of being used for research in human motion modeling, analysis, and generation. The protocols for data collection are described in detail, and a preliminary analysis of the collected data is provided as a benchmark. The Gesture Operational Model, a hybrid stochastic-biomechanical approach based on kinematic descriptors, is utilized to model the dynamics of the experts' movements and create mathematical representations of their motion trajectories for analysis and quantifying their body dexterity. The models allowed accurate the generation of human professional poses and an intuitive description of how body joints cooperate and change over time through the performance of the task.

Computational ergonomics for task delegation in Human-Robot Collaboration: spatiotemporal adaptation of the robot to the human through contactless gesture recognition

Mar 22, 2022

Abstract:The high prevalence of work-related musculoskeletal disorders (WMSDs) could be addressed by optimizing Human-Robot Collaboration (HRC) frameworks for manufacturing applications. In this context, this paper proposes two hypotheses for ergonomically effective task delegation and HRC. The first hypothesis states that it is possible to quantify ergonomically professional tasks using motion data from a reduced set of sensors. Then, the most dangerous tasks can be delegated to a collaborative robot. The second hypothesis is that by including gesture recognition and spatial adaptation, the ergonomics of an HRC scenario can be improved by avoiding needless motions that could expose operators to ergonomic risks and by lowering the physical effort required of operators. An HRC scenario for a television manufacturing process is optimized to test both hypotheses. For the ergonomic evaluation, motion primitives with known ergonomic risks were modeled for their detection in professional tasks and to estimate a risk score based on the European Assembly Worksheet (EAWS). A Deep Learning gesture recognition module trained with egocentric television assembly data was used to complement the collaboration between the human operator and the robot. Additionally, a skeleton-tracking algorithm provided the robot with information about the operator's pose, allowing it to spatially adapt its motion to the operator's anthropometrics. Three experiments were conducted to determine the effect of gesture recognition and spatial adaptation on the operator's range of motion. The rate of spatial adaptation was used as a key performance indicator (KPI), and a new KPI for measuring the reduction in the operator's motion is presented in this paper.

3D Reconstruction of Deformable Revolving Object under Heavy Hand Interaction

Aug 05, 2019

Abstract:We reconstruct 3D deformable object through time, in the context of a live pottery making process where the crafter molds the object. Because the object suffers from heavy hand interaction, and is being deformed, classical techniques cannot be applied. We use particle energy optimization to estimate the object profile and benefit of the object radial symmetry to increase the robustness of the reconstruction to both occlusion and noise. Our method works with an unconstrained scalable setup with one or more depth sensors. We evaluate on our database (released upon publication) on a per-frame and temporal basis and shows it significantly outperforms state-of-the-art achieving 7.60mm average object reconstruction error. Further ablation studies demonstrate the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge