Somenath Kuiry

An ensemble framework approach of hybrid Quantum convolutional neural networks for classification of breast cancer images

Sep 24, 2024

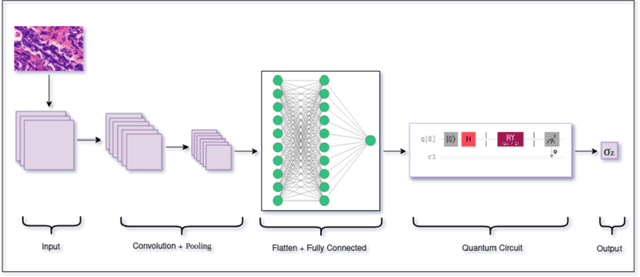

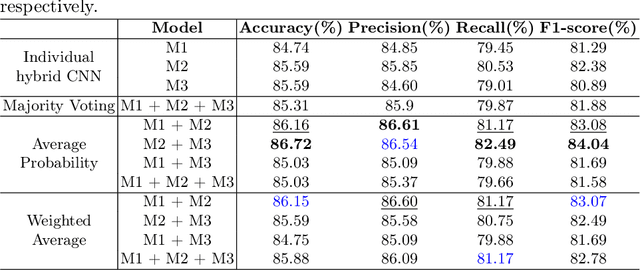

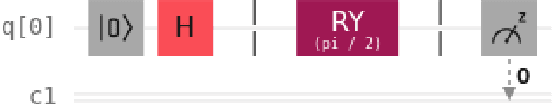

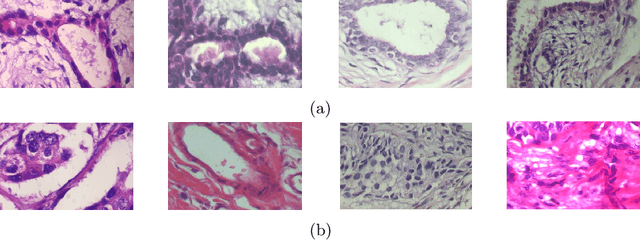

Abstract:Quantum neural networks are deemed suitable to replace classical neural networks in their ability to learn and scale up network models using quantum-exclusive phenomena like superposition and entanglement. However, in the noisy intermediate scale quantum (NISQ) era, the trainability and expressibility of quantum models are yet under investigation. Medical image classification on the other hand, pertains well to applications in deep learning, particularly, convolutional neural networks. In this paper, we carry out a study of three hybrid classical-quantum neural network architectures and combine them using standard ensembling techniques on a breast cancer histopathological dataset. The best accuracy percentage obtained by an individual model is 85.59. Whereas, on performing ensemble, we have obtained accuracy as high as 86.72%, an improvement over the individual hybrid network as well as classical neural network counterparts of the hybrid network models.

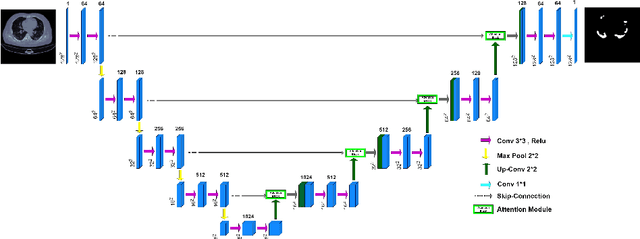

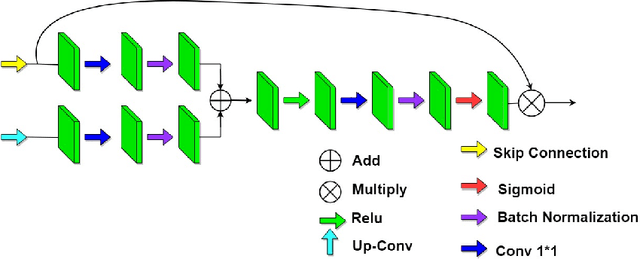

COVID-CT-H-UNet: a novel COVID-19 CT segmentation network based on attention mechanism and Bi-category Hybrid loss

Mar 16, 2024

Abstract:Since 2019, the global COVID-19 outbreak has emerged as a crucial focus in healthcare research. Although RT-PCR stands as the primary method for COVID-19 detection, its extended detection time poses a significant challenge. Consequently, supplementing RT-PCR with the pathological study of COVID-19 through CT imaging has become imperative. The current segmentation approach based on TVLoss enhances the connectivity of afflicted areas. Nevertheless, it tends to misclassify normal pixels between certain adjacent diseased regions as diseased pixels. The typical Binary cross entropy(BCE) based U-shaped network only concentrates on the entire CT images without emphasizing on the affected regions, which results in hazy borders and low contrast in the projected output. In addition, the fraction of infected pixels in CT images is much less, which makes it a challenge for segmentation models to make accurate predictions. In this paper, we propose COVID-CT-H-UNet, a COVID-19 CT segmentation network to solve these problems. To recognize the unaffected pixels between neighbouring diseased regions, extra visual layer information is captured by combining the attention module on the skip connections with the proposed composite function Bi-category Hybrid Loss. The issue of hazy boundaries and poor contrast brought on by the BCE Loss in conventional techniques is resolved by utilizing the composite function Bi-category Hybrid Loss that concentrates on the pixels in the diseased area. The experiment shows when compared to the previous COVID-19 segmentation networks, the proposed COVID-CT-H-UNet's segmentation impact has greatly improved, and it may be used to identify and study clinical COVID-19.

Regularizing CNNs using Confusion Penalty Based Label Smoothing for Histopathology Images

Mar 16, 2024

Abstract:Deep Learning, particularly Convolutional Neural Networks (CNN), has been successful in computer vision tasks and medical image analysis. However, modern CNNs can be overconfident, making them difficult to deploy in real-world scenarios. Researchers propose regularizing techniques, such as Label Smoothing (LS), which introduces soft labels for training data, making the classifier more regularized. LS captures disagreements or lack of confidence in the training phase, making the classifier more regularized. Although LS is quite simple and effective, traditional LS techniques utilize a weighted average between target distribution and a uniform distribution across the classes, which limits the objective of LS as well as the performance. This paper introduces a novel LS technique based on the confusion penalty, which treats model confusion for each class with more importance than others. We have performed extensive experiments with well-known CNN architectures with this technique on publicly available Colorectal Histology datasets and got satisfactory results. Also, we have compared our findings with the State-of-the-art and shown our method's efficacy with Reliability diagrams and t-distributed Stochastic Neighbor Embedding (t-SNE) plots of feature space.

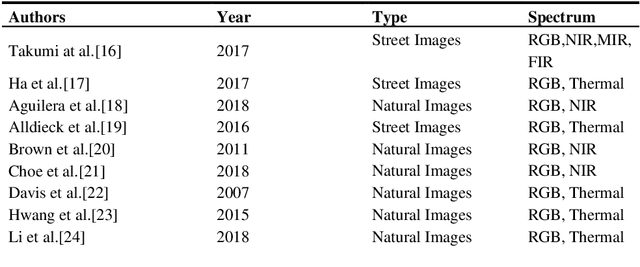

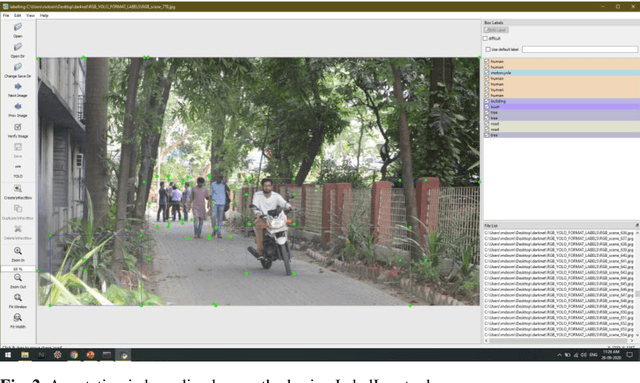

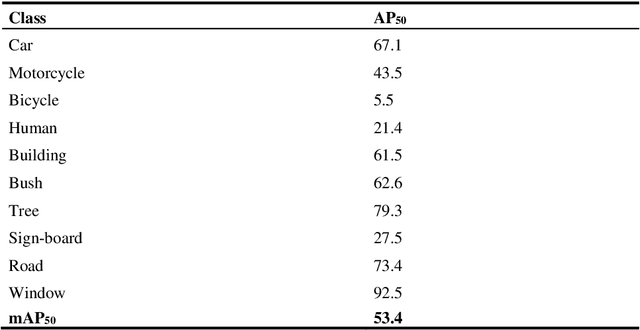

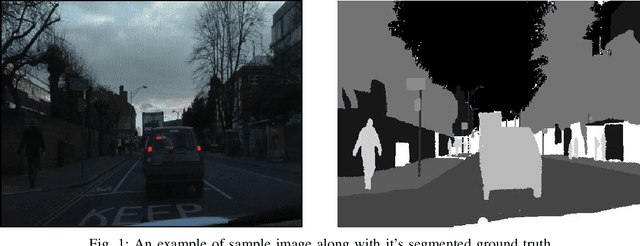

Multispectral Object Detection with Deep Learning

Feb 05, 2021

Abstract:Object detection in natural scenes can be a challenging task. In many real-life situations, the visible spectrum is not suitable for traditional computer vision tasks. Moving outside the visible spectrum range, such as the thermal spectrum or the near-infrared (NIR) images, is much more beneficial in low visibility conditions, NIR images are very helpful for understanding the object's material quality. In this work, we have taken images with both the Thermal and NIR spectrum for the object detection task. As multi-spectral data with both Thermal and NIR is not available for the detection task, we needed to collect data ourselves. Data collection is a time-consuming process, and we faced many obstacles that we had to overcome. We train the YOLO v3 network from scratch to detect an object from multi-spectral images. Also, to avoid overfitting, we have done data augmentation and tune hyperparameters.

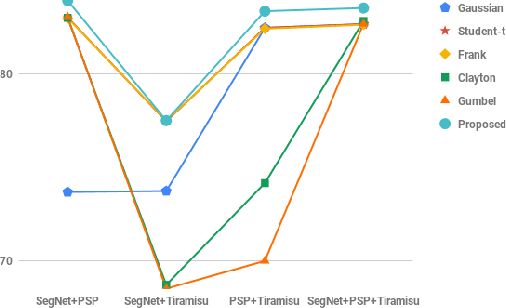

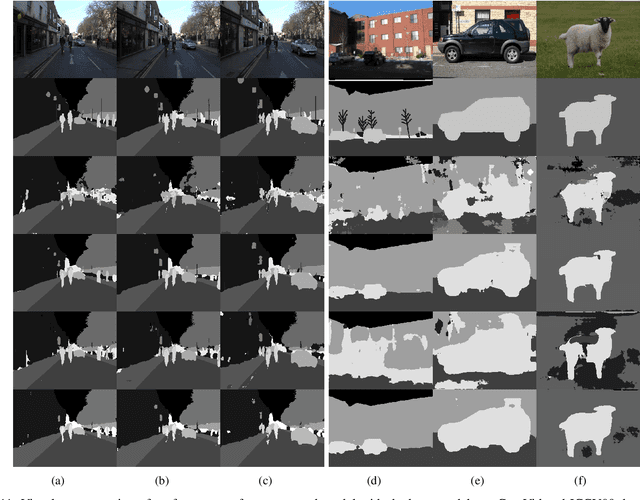

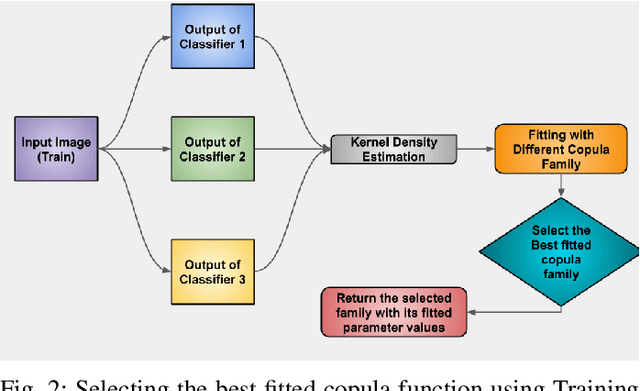

EDC3: Ensemble of Deep-Classifiers using Class-specific Copula functions to Improve Semantic Image Segmentation

Mar 12, 2020

Abstract:In the literature, many fusion techniques are registered for the segmentation of images, but they primarily focus on observed output or belief score or probability score of the output classes. In the present work, we have utilized inter source statistical dependency among different classifiers for ensembling of different deep learning techniques for semantic segmentation of images. For this purpose, in the present work, a class-wise Copula-based ensembling method is newly proposed for solving the multi-class segmentation problem. Experimentally, it is observed that the performance has improved more for semantic image segmentation using the proposed class-specific Copula function than the traditionally used single Copula function for the problem. The performance is also compared with three state-of-the-art ensembling methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge