Soeren Klemm

Exploiting the Full Capacity of Deep Neural Networks while Avoiding Overfitting by Targeted Sparsity Regularization

Feb 21, 2020

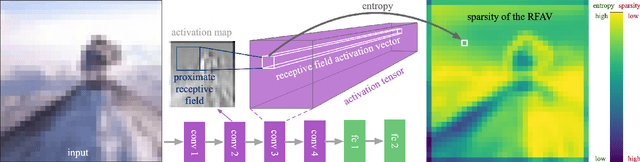

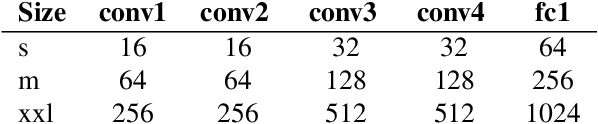

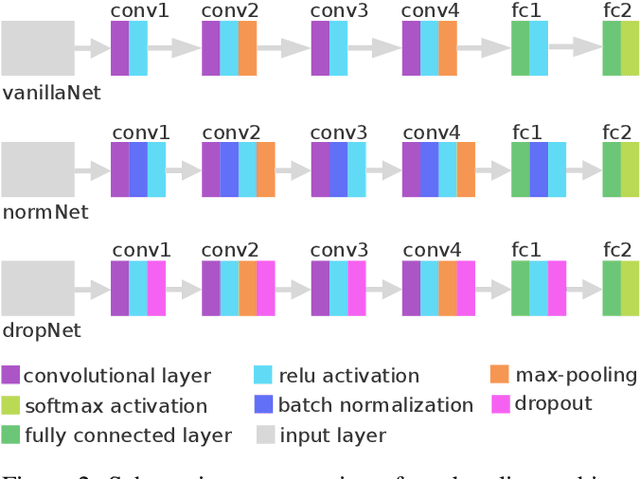

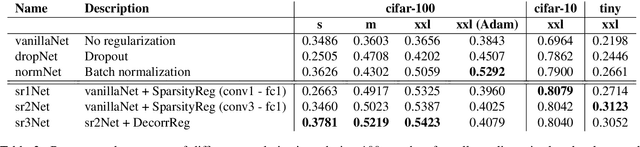

Abstract:Overfitting is one of the most common problems when training deep neural networks on comparatively small datasets. Here, we demonstrate that neural network activation sparsity is a reliable indicator for overfitting which we utilize to propose novel targeted sparsity visualization and regularization strategies. Based on these strategies we are able to understand and counteract overfitting caused by activation sparsity and filter correlation in a targeted layer-by-layer manner. Our results demonstrate that targeted sparsity regularization can efficiently be used to regularize well-known datasets and architectures with a significant increase in image classification performance while outperforming both dropout and batch normalization. Ultimately, our study reveals novel insights into the contradicting concepts of activation sparsity and network capacity by demonstrating that targeted sparsity regularization enables salient and discriminative feature learning while exploiting the full capacity of deep models without suffering from overfitting, even when trained excessively.

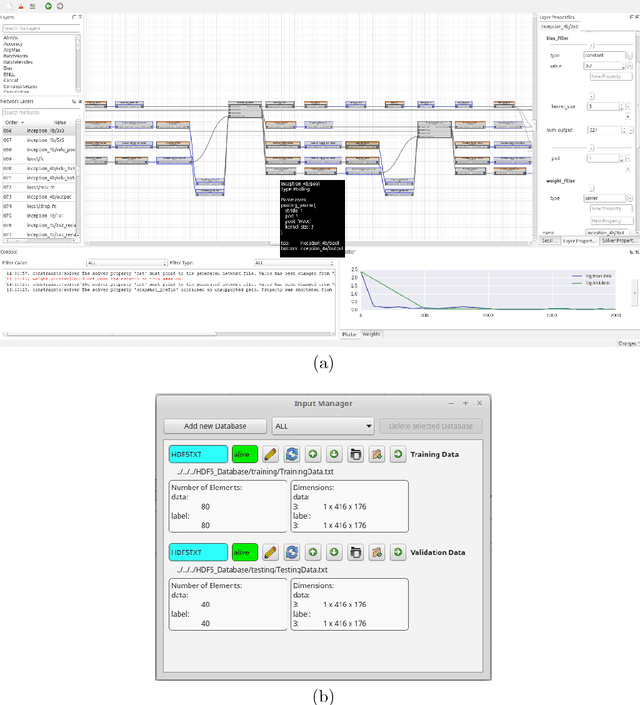

Barista - a Graphical Tool for Designing and Training Deep Neural Networks

Feb 13, 2018

Abstract:In recent years, the importance of deep learning has significantly increased in pattern recognition, computer vision, and artificial intelligence research, as well as in industry. However, despite the existence of multiple deep learning frameworks, there is a lack of comprehensible and easy-to-use high-level tools for the design, training, and testing of deep neural networks (DNNs). In this paper, we introduce Barista, an open-source graphical high-level interface for the Caffe deep learning framework. While Caffe is one of the most popular frameworks for training DNNs, editing prototext files in order to specify the net architecture and hyper parameters can become a cumbersome and error-prone task. Instead, Barista offers a fully graphical user interface with a graph-based net topology editor and provides an end-to-end training facility for DNNs, which allows researchers to focus on solving their problems without having to write code, edit text files, or manually parse logged data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge