Sirui Lu

Can Theoretical Physics Research Benefit from Language Agents?

Jun 06, 2025Abstract:Large Language Models (LLMs) are rapidly advancing across diverse domains, yet their application in theoretical physics research is not yet mature. This position paper argues that LLM agents can potentially help accelerate theoretical, computational, and applied physics when properly integrated with domain knowledge and toolbox. We analyze current LLM capabilities for physics -- from mathematical reasoning to code generation -- identifying critical gaps in physical intuition, constraint satisfaction, and reliable reasoning. We envision future physics-specialized LLMs that could handle multimodal data, propose testable hypotheses, and design experiments. Realizing this vision requires addressing fundamental challenges: ensuring physical consistency, and developing robust verification methods. We call for collaborative efforts between physics and AI communities to help advance scientific discovery in physics.

Quantum Private Distributed Learning Through Blind Quantum Computing

Mar 15, 2021

Abstract:Private distributed learning studies the problem of how multiple distributed entities collaboratively train a shared deep network with their private data unrevealed. With the security provided by the protocols of blind quantum computation, the cooperation between quantum physics and machine learning may lead to unparalleled prospect for solving private distributed learning tasks. In this paper, we introduce a quantum protocol for distributed learning that is able to utilize the computational power of the remote quantum servers while keeping the private data safe. For concreteness, we first introduce a protocol for private single-party delegated training of variational quantum classifiers based on blind quantum computing and then extend this protocol to multiparty private distributed learning incorporated with differential privacy. We carry out extensive numerical simulations with different real-life datasets and encoding strategies to benchmark the effectiveness of our protocol. We find that our protocol is robust to experimental imperfections and is secure under the gradient attack after the incorporation of differential privacy. Our results show the potential for handling computationally expensive distributed learning tasks with privacy guarantees, thus providing a valuable guide for exploring quantum advantages from the security perspective in the field of machine learning with real-life applications.

Tensor networks and efficient descriptions of classical data

Mar 11, 2021

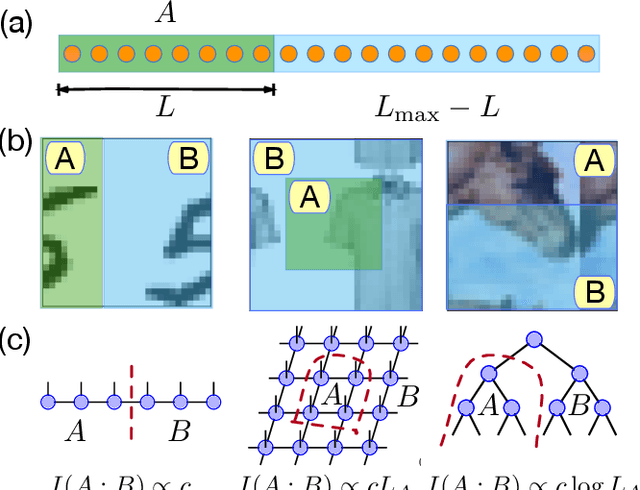

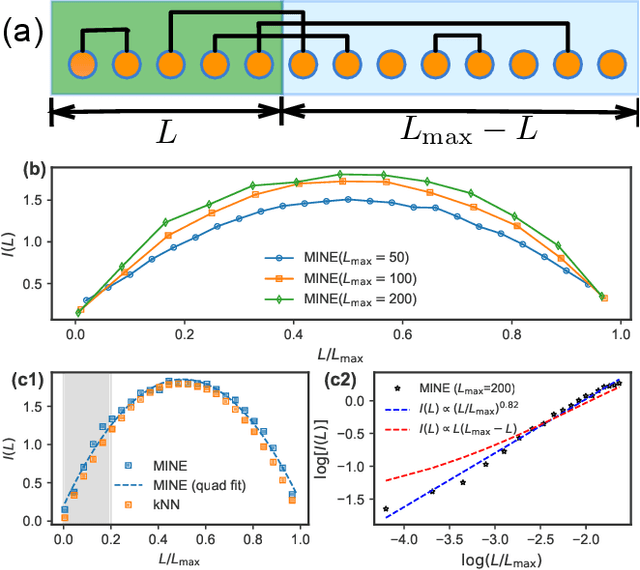

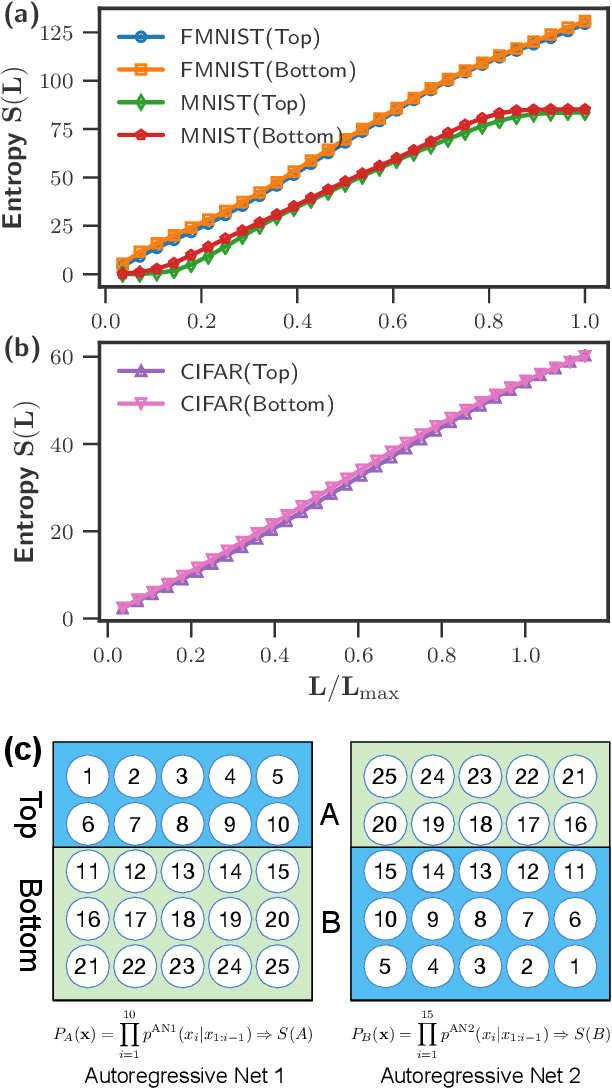

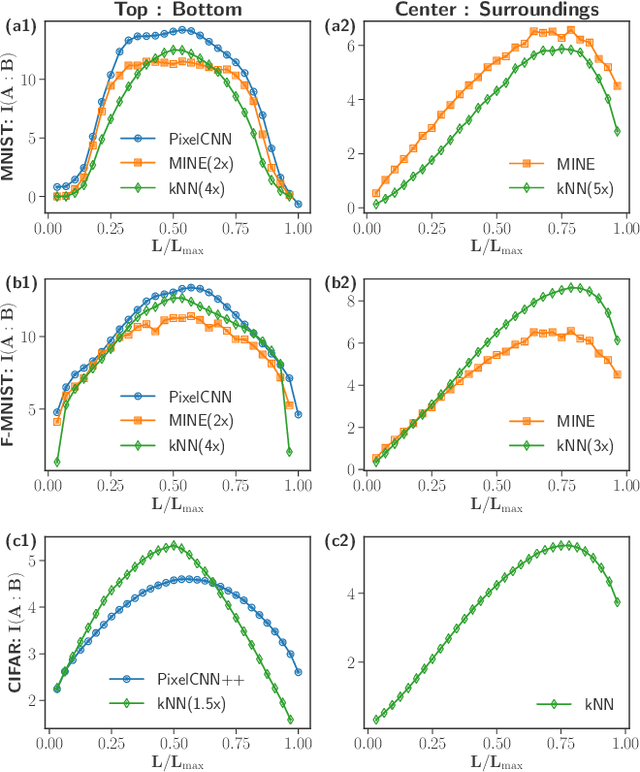

Abstract:We investigate the potential of tensor network based machine learning methods to scale to large image and text data sets. For that, we study how the mutual information between a subregion and its complement scales with the subsystem size $L$, similarly to how it is done in quantum many-body physics. We find that for text, the mutual information scales as a power law $L^\nu$ with a close to volume law exponent, indicating that text cannot be efficiently described by 1D tensor networks. For images, the scaling is close to an area law, hinting at 2D tensor networks such as PEPS could have an adequate expressibility. For the numerical analysis, we introduce a mutual information estimator based on autoregressive networks, and we also use convolutional neural networks in a neural estimator method.

Quantum Adversarial Machine Learning

Dec 31, 2019

Abstract:Adversarial machine learning is an emerging field that focuses on studying vulnerabilities of machine learning approaches in adversarial settings and developing techniques accordingly to make learning robust to adversarial manipulations. It plays a vital role in various machine learning applications and has attracted tremendous attention across different communities recently. In this paper, we explore different adversarial scenarios in the context of quantum machine learning. We find that, similar to traditional classifiers based on classical neural networks, quantum learning systems are likewise vulnerable to crafted adversarial examples, independent of whether the input data is classical or quantum. In particular, we find that a quantum classifier that achieves nearly the state-of-the-art accuracy can be conclusively deceived by adversarial examples obtained via adding imperceptible perturbations to the original legitimate samples. This is explicitly demonstrated with quantum adversarial learning in different scenarios, including classifying real-life images (e.g., handwritten digit images in the dataset MNIST), learning phases of matter (such as, ferromagnetic/paramagnetic orders and symmetry protected topological phases), and classifying quantum data. Furthermore, we show that based on the information of the adversarial examples at hand, practical defense strategies can be designed to fight against a number of different attacks. Our results uncover the notable vulnerability of quantum machine learning systems to adversarial perturbations, which not only reveals a novel perspective in bridging machine learning and quantum physics in theory but also provides valuable guidance for practical applications of quantum classifiers based on both near-term and future quantum technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge